by Michael Cropper | Jan 9, 2023 | IT, Technical |

This is a topic that comes up quite infrequently for many people and for most, never. It’s also one of the topics that is significantly more complex than it should be and one that is fairly poorly documented online about how to do this properly. Fundamentally this is a basic Copy & Paste exercise at best, but it’s made ridiculously complex by the underlying technical gubbins. So hopefully this blog post can clear up the steps involved and some of the considerations you need to make.

Old and New Disks

Disks come in many different shapes and sizes both conceptually and physically, with varying connectors and different underlying technologies. The nuances of these are beyond the scope of this blog post, but to put a few basics down to help conversation let’s look at a few of these at a high level.

We’ve previously covered off topics on the Performance of SSDs VS HDDs so take a look at that for some handy background info.

Summary being that you essentially have two types of hard drives;

- Mechanical Hard Disk Drive (HDD) – Has moving parts

- Solid State Drives (SSD) – Has no moving parts

See the above blog post for further insights into the differences.

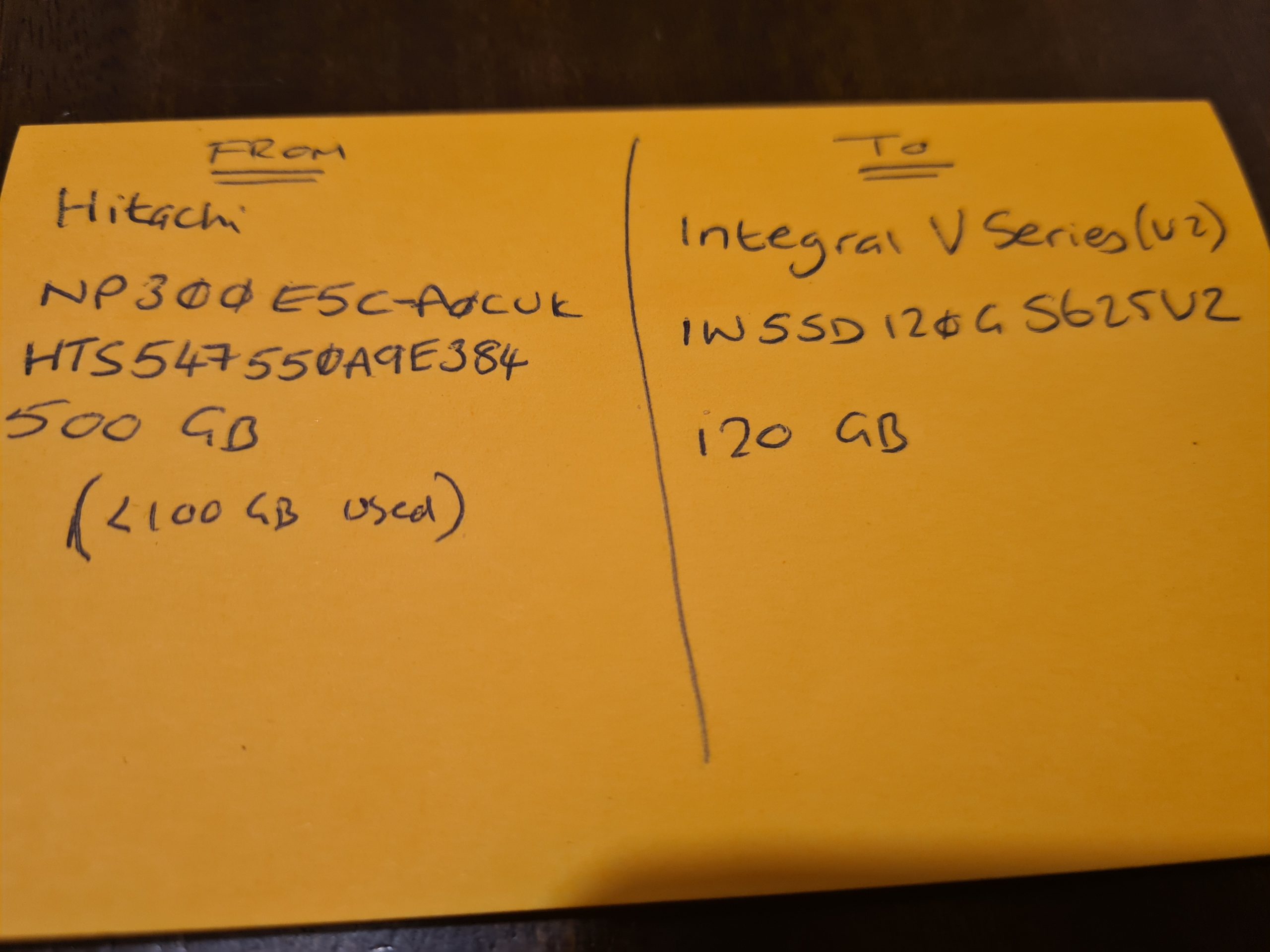

Anyhow, the important point being for the purpose of cloning a hard disk is that you need to know the details of what you are going from and what you are moving to. Get this wrong and you can seriously mess things up in a completely un-recoverable way, so please be careful and if you aren’t sure what you are doing, don’t proceed and instead pass this onto a professional to do this for you.

Disk Connectors – IDE VS SATA

For the sake of simplicity, the two core connectors for disk drives fundamentally fall into either IDE (old) and SATA (new). Yes, the teckies who are reading this will say that this is garbage, and it is. But, in reality, for those reading this blog post, this is likely going to cover 99% of the use case.

In reality there are many types of disk connectors from PATA, SATA, SCSI, SSD, HDD, IDE, M.2 NVMe. M.2 SATA, mSATA, RAID, Host Bus Adapters (HBA) and more (and yes, not all of these are technically connectors… but for the sake of simplicity, we don’t care for this blog post). At the time of writing, the majority of people using the many other various types of disk connectors outside of the basics are generally going to be working within corporate enterprises which tend to operate on a bin and replace mentality from a hardware perspective for basic user computers and for data centres and server racks have setup with cloud native data storage with high availability and lots of redundancy. For many smaller organisations and/or personal use case, this is a goal to work towards.

Which is why we are covering this topic for the average user to help to understand the basics for how to clone a hard disk either if you are upgrading and/or are trying to recover data from a failing disk.

Adapters

Ok, so we’ve covered off the different types of hard disks, it’s time to look at how we connect them to a computer to perform the data migration. Here is where we need the correct connectors to do the job, and this isn’t straight forward.

For simplicity and ease, USB is likely to be the easiest solution for the majority of use cases. Note there is a significant difference in USB 1.0 VS USB 2.0 VS USB 3.0 when it comes to performance and to add to the complexity, there are also different USB Form Factors (aka. different shapes of the connector, but fundamentally doing the same thing) which adds to the confusion.

I work in this field, and I am continually surprised (aka. annoyed…) by the manufacturers who continually make this 1000x more complex than it needs to be. I for one am extremely happy that the European Union (EU) has decided to take a first stance on this topic to help to simplify the needless complexity by standardising on a single port type for charging devices. Personally I have endless converters, adapters, port changers, extender cables and more for the most basic of tasks. It’s a bloody nightmare on a personal level. And at an environmental level, just utterly wasteful.

Anyhow, to keep things simple again, there are a few basic adapters you probably need to help you with cloning a hard disk. These are;

- USB External SATA Disk Drive Connector / Adapter Cable (buy here)

- USB External SATA, 3.5” IDE, 2.5” IDE Disk Drive Adapter Tool Kit (buy here)

Connectors, Adapters and Speed

This is a complex topic, and one that quite frankly I don’t have the time to get into the details of – mainly because the manufacturers don’t make this easy and/or make this far more difficult than it should be. You see, we have things such as USB 1, 2, 3, SATA 1, 2, 3, IDE, 1, 2, 3 etc. and I just don’t have the mental capacity to care about too much the differences between these things. I work with what is available and adapt as needed.

The reality is that each and every connector or adapter has a maximum data transfer rate based on the physical materials and hardware that the device has been manufactured from. Everything has limitations and manufacturers don’t make this info easily accessible and/or understandable to the average joe.

Unique IDs of Disk Drives

Right, so now we’re onto the actual hard disk data migration. Now things get fun, and possibly dangerous – so be careful.

Almost every guide I’ve read online skims over this really important point, and it’s probably the most crucial point to take into account – which is to know your IDs, your Unique Hardware Identifier.

For a bit of background as it’s important to understand. For those with a software engineering and/or database background, you will be very familiar with a Unique Identifier for a ‘thing’. Well, with hardware manufacturing, they also do the same thing. For every physical chip that is manufactured, this is generally embedded with a hard coded unique identifier which both helps, and hinders, in many different ways, but that is a topic for another discussion. For example, the sensors that we use on the GeezerCloud product have a Unique ID for every single sensor that we use.

Anyhow, the most important aspect of what I want to mention for this blog post is that all disk drives have a unique identifier. Thankfully this identifier is printed on the physical disk that you have in front of you. It’s printed on the sticker that is physically attached to the disk.

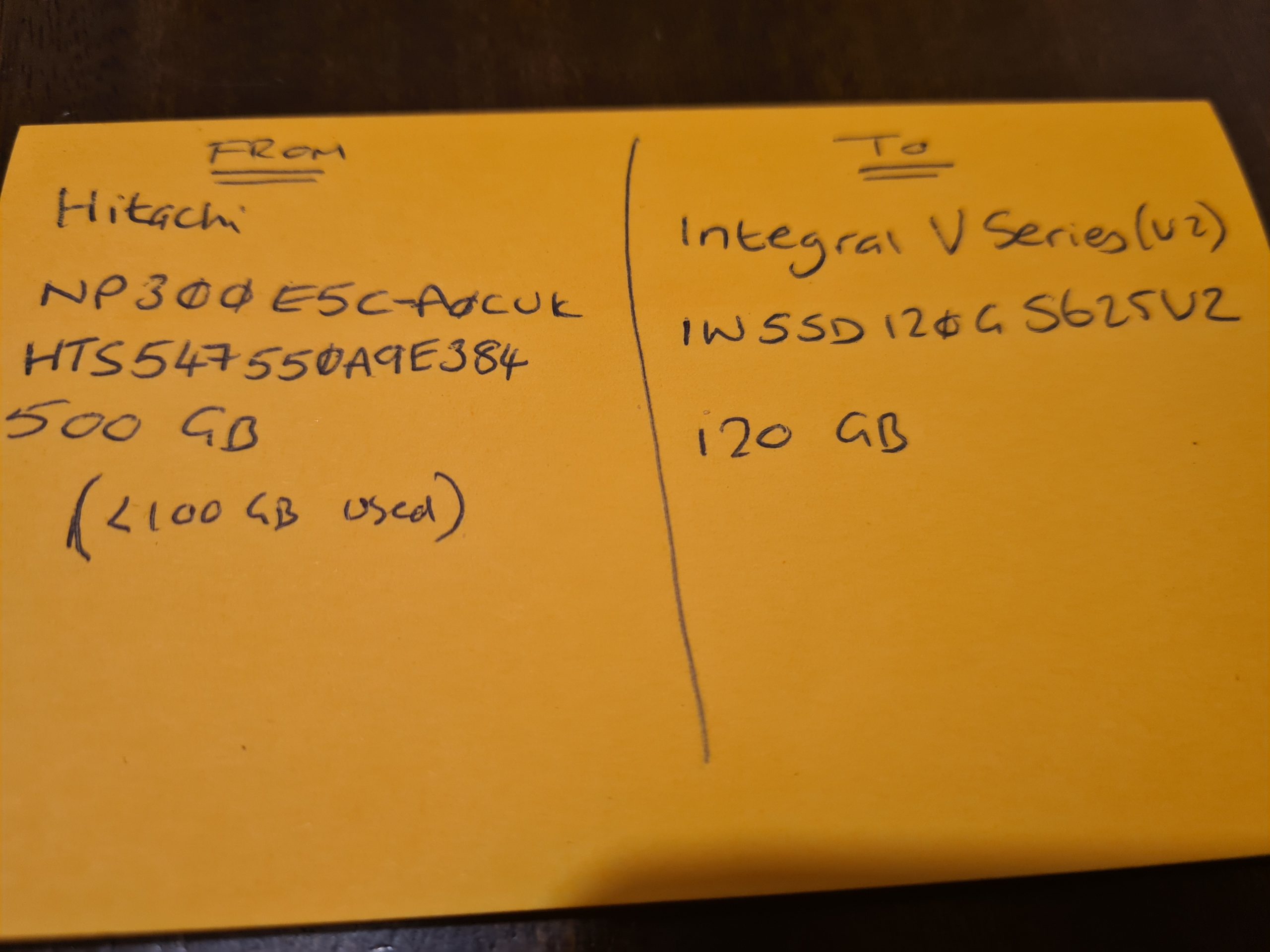

Make a note of the ID of the Disks.

I cannot stress this enough. Make sure you have the IDs of the disks you are working with to transfer data from and to. Make a note of the labels printed on the physical disks so that you can ensure you are transferring data from the right source and to the correct destination.

There is no going back from an incorrect action at this step.

Physical Disks, Partitions and Bootable Disks

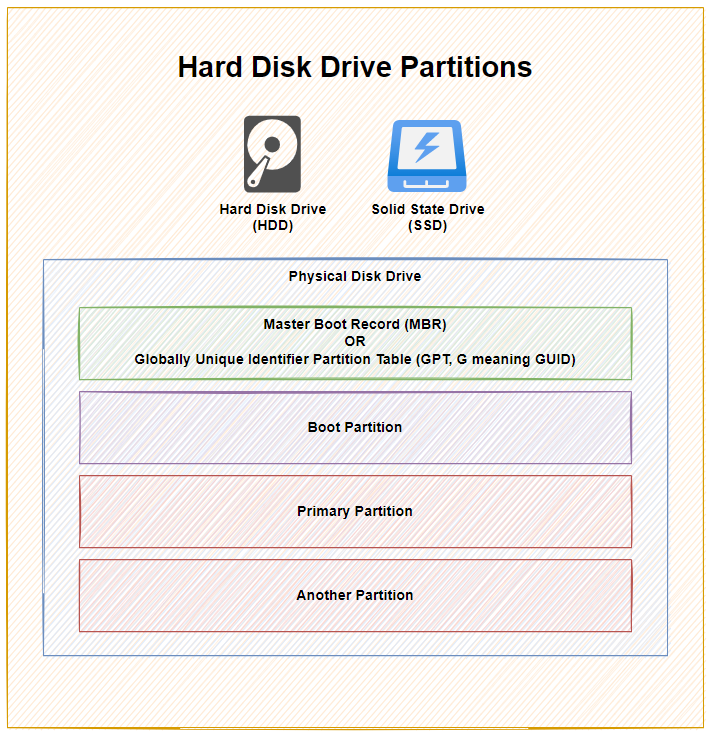

Next, before we actually get onto to the migration, it’s important to understand the context. There is a Physical Disk connected to the computer, but then we have Partitions and Boot Partitions to contend with along with both physical and logical volumes. Volumes is just another word for Partition.

This all depends on your specific use case. For example, if the disk you are cloning is from an external USB hard disk, then this probably doesn’t have a bootable operating system setup as it is just there to store basic data. Whereas if you are upgrading your primary disk that runs your operating system, then you will have a Boot Partition which is the part of the disk that runs a piece of software called the Boot Loader which is responsible for booting the operating system you have installed.

For Example;

As you can see above, with 1x physical disk drive, whether that is a Hard Disk Drive (HDD) or a Solid State Drive (SSD), they ultimately have the same bits under the hood to make the disk work as it should based on you requirements – either as a Bootable Disk or a Non-Bootable Disk.

To explain a few concepts;

- The Master Boot Record (MBR) was for disks less than 2 TB in size. In reality these days, most disks are larger than 2 TB in size, so as a general rule of thumb, you are probably best always using Globally Unique Identifier Partition Table (GPT) when managing your disks. MBR has a maximum partition capacity of 2 TB, so even if your disk is 10 TB, the maximum size of any one partition is 2 TB, which soon becomes a pain to manage. Compared with GPT which has a maximum partition capacity of 9.4 ZB, so you’re good for a while using this option

- Primary Partition, this is where your operating system is installed and your data saved

- Another Partition, this is just an example where some people use multiple partitions on the disk to manage their data. In reality, for basic disks you are likely only using one primary partition for standard computer use. When you get into the world of Servers and Data Management, then you end up having many logical partitions to segment your data on the disk for the virtual machines using that data, but that’s out of scope of this blog post.

I have seen this a few times in practice when computers have come my way to fix after a ‘professional’ had already apparently fixed something and clearly it wasn’t done correctly. One recent example was with a 3 TB disk drive, yet only 2 TB of it was available for use as it had been configured with only one partition which had a maximum of 2 TB of size. Clearly the person setting this up didn’t really look too closely at anything they were doing, particularly as their primary ‘fix’ was to replace a 3 TB disk drive with a 120 GB disk drive, then the end person using the machine was sat wondering why nothing no-longer worked and the only way they could access their files was from an external USB drive. #FacePalm

Windows Disk Management

So what does all this look like in practice? Well, thankfully Windows 10 comes with a handy utility called Disk Management. To access this, simply right click on the Windows ‘Start Menu’ Icon and click on ‘Disk Management’.

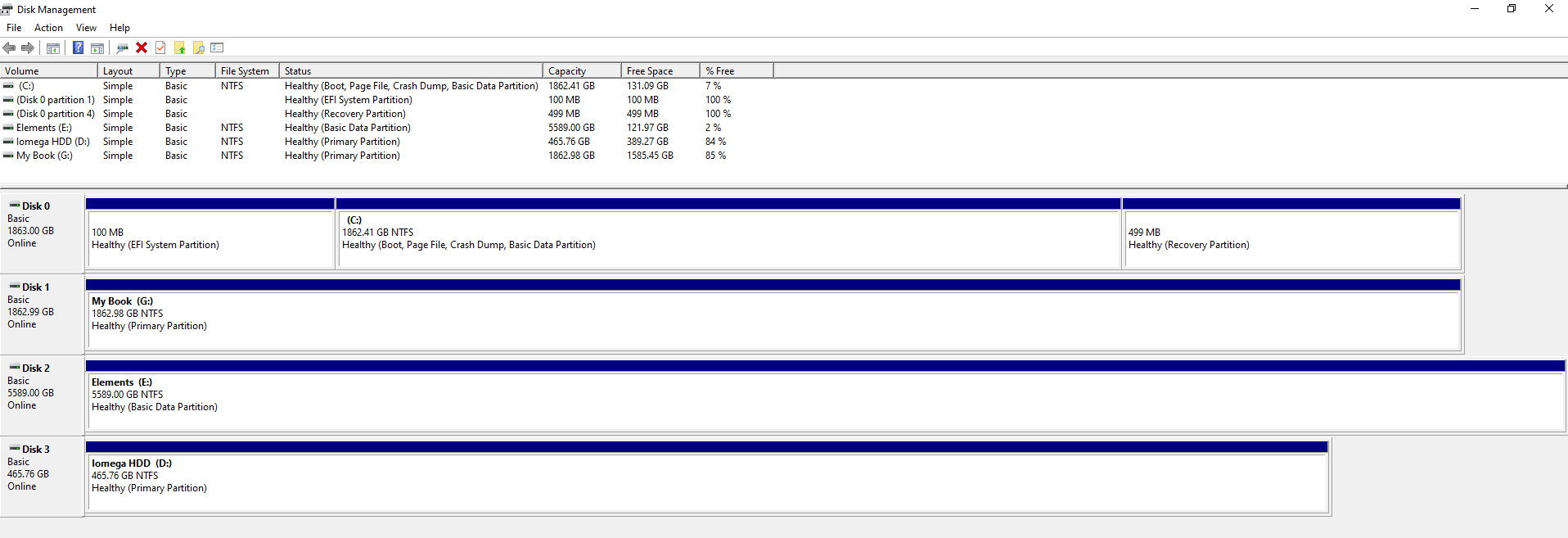

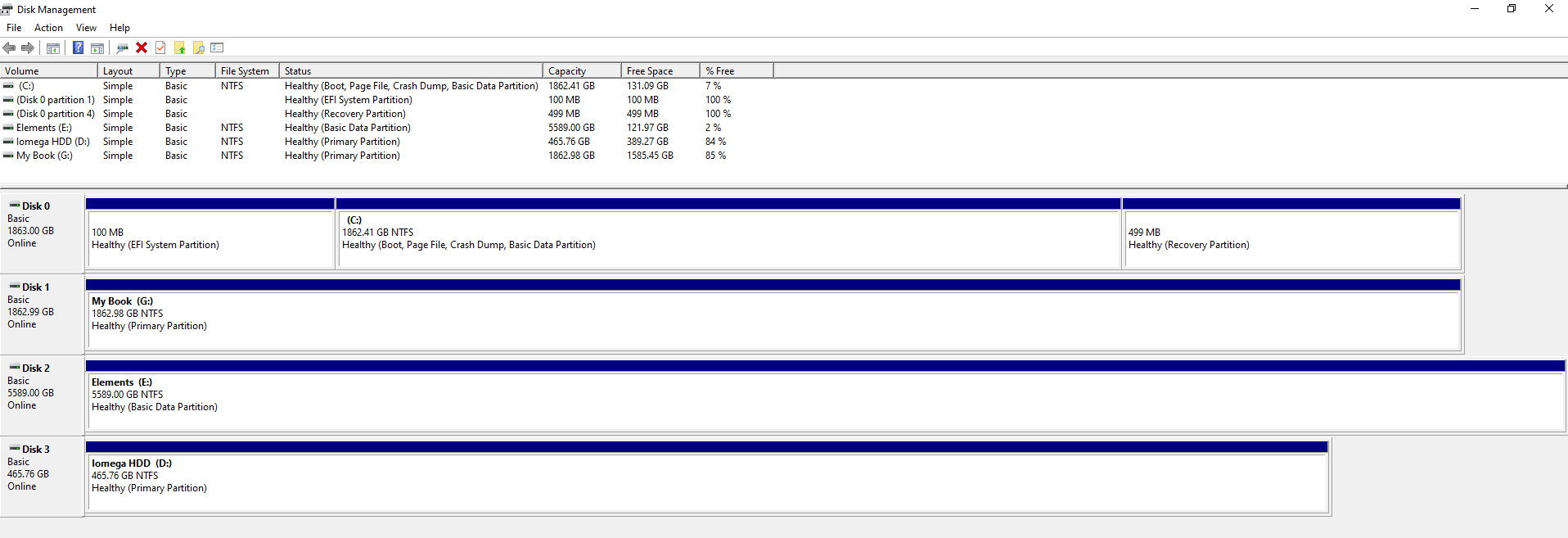

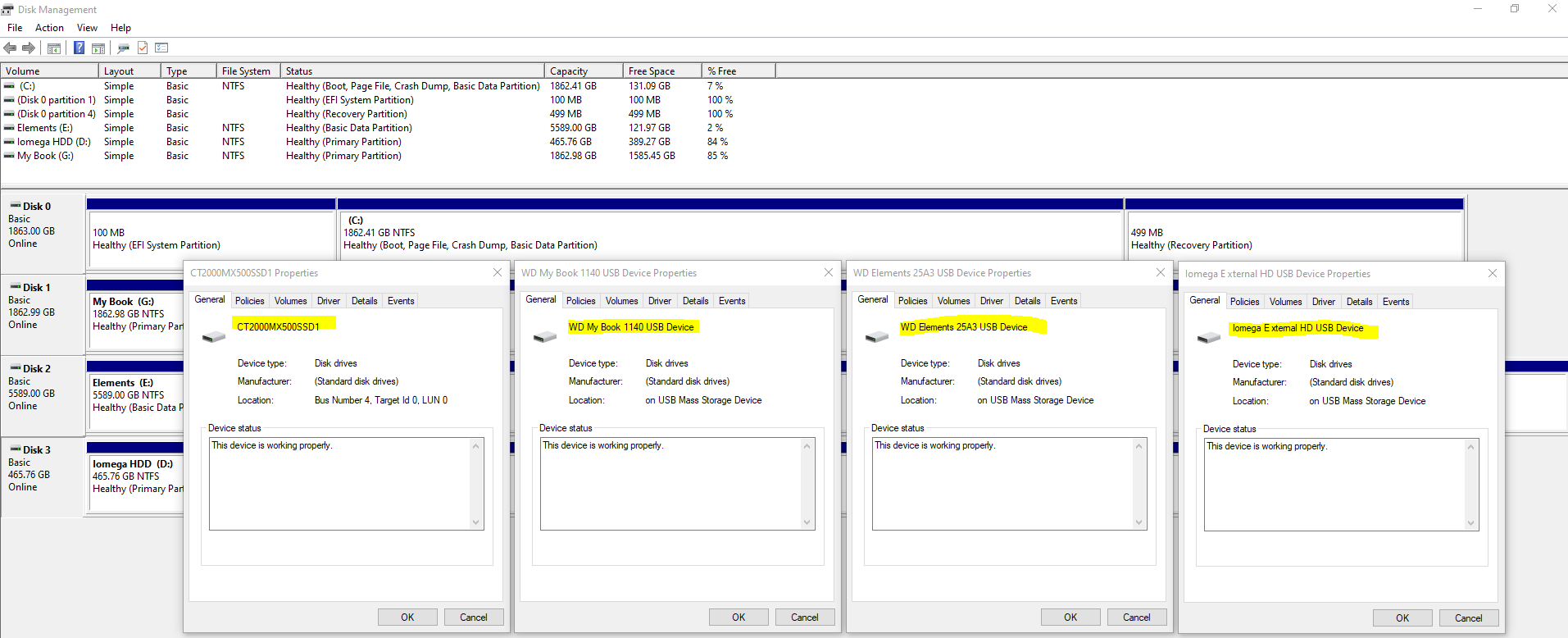

To bring the above conceptual diagram into focus, here is a real example of what this looks like with multiple disks to a computer;

In the above example you can see that there are 4x disks connected to the machine. One is the main disk used for the operating system and the other three are external USB hard drives in this example. What is a tad annoying with this user interface though is that it isn’t clear exactly which disk is which, so you have to be extremely careful. To any user Disk 0, 1, 2, 3 doesn’t really mean anything so at best you have to try and align the disk sizes to what you can see within your ‘This PC’ on your Windows machine.

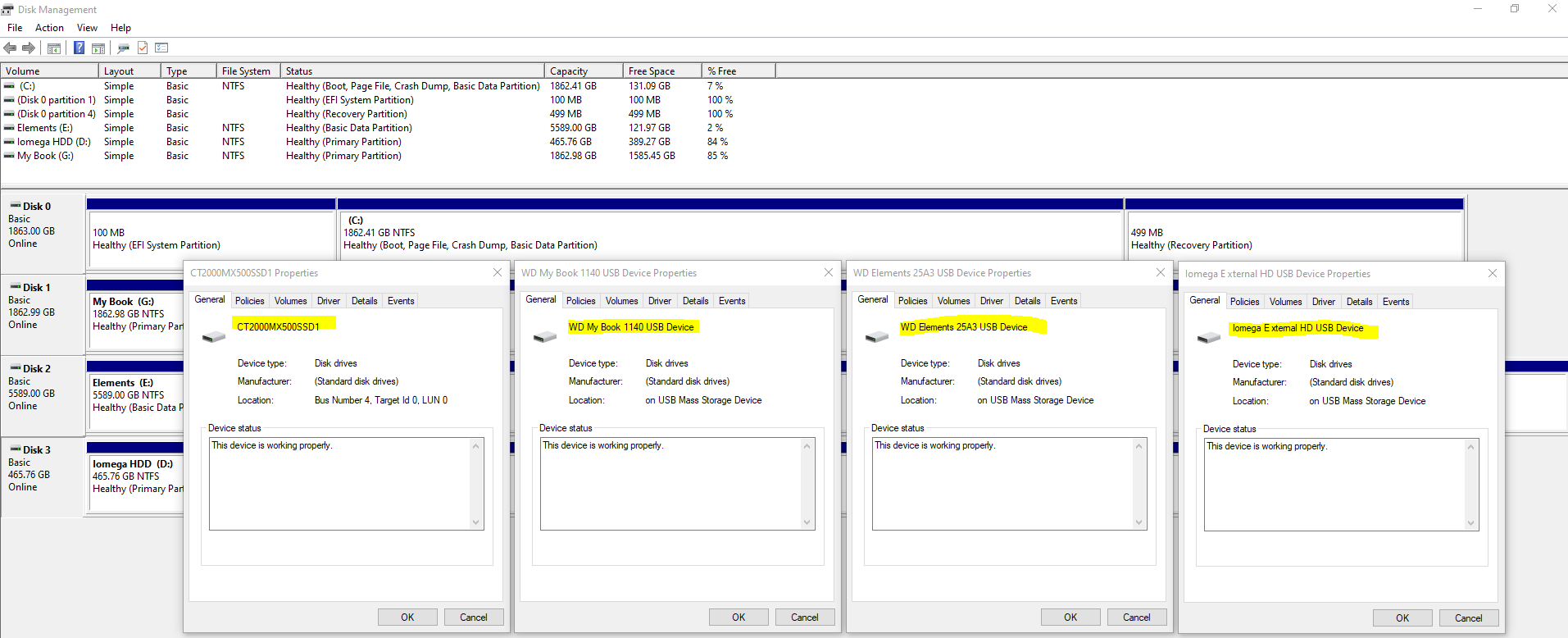

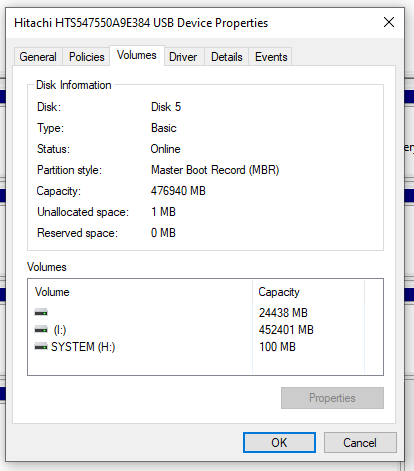

Thankfully when you Right Click on one of the rows and click on Properties, you can see the name of the disk come up as can be seen below;

This info will come in extremely handy when you start plugging in some disk drives that you are going to be working with. It’s essential that you are moving data from the correct disk to the correct disk.

Plugging in Your Disks

Ok, so now we’ve covered off the background topics for how to clone a hard disk, it’s time to jump in and give this a go. You must take this a step at a time to ensure you are 1000% confident that you are sure that you are doing the right thing. As I have said many times already, if you get this bit wrong, it’s going to be very disruptive – particularly if like many people you still don’t have 100% of your data backed up in the cloud.

So, here’s what you’re going to need;

- Old Disk

- New Disk (Contact us if you need us to supply and we can price things up if you aren’t sure what you’re looking for)

- USB External SATA Disk Drive Connector / Adapter Cable (buy here)

- USB External SATA, 3.5” IDE, 2.5” IDE Disk Drive Adapter Tool Kit (buy here)

Make a note of the IDs of your disks from the labels on the physical disk drive. You should see these exact names show up in Windows Disk Management Utility Software. It is these IDs that you will need in the next step to make sure you are cloning the data from and to the correct disks.

One item to note is that if you are using a brand new disk for your New Disk, then you will need to Initialise the disk using GPT via Windows Disk Management Utility Software when prompted once it is plugged in. For disks that you are re-using then this initialisation step usually doesn’t appear. For new disks you will also need to right click on the unallocated area of the disk and select New Simple Volume, then give the Volume (aka. Partition) a size and a Drive Letter then you can format the new partition so you can use it going forwards. Then the drive is ready for use.

Clone Hard Disk Software

There is a small handful of software available both commercially and open source for cloning disk drives, with significantly varying usability aspects. For simplicity, we’re going to take a look at one of the easier to use pieces of software called Acronis True Image for Crucial.

Aconis is a commercial product, but many manufacturers have a free Clone Disk feature within Acronis, such as for Crucial Disk Drives the above software works. There are a lot of makes/models of disks on the market, so if in doubt about what software works best with your hardware, then contact the disk drive manufacturer directly via their support channels and they can advise best which software works best with your hardware.

There are also lots of super technical open source options available, but personally I’ve just not had time to play with these since this is fundamentally a basic copy and paste job fundamentally so it should have a user interface for allow anyone to do this kind of thing in my opinion.

Here are a few images of the setup I was playing with for the purpose of this blog post;

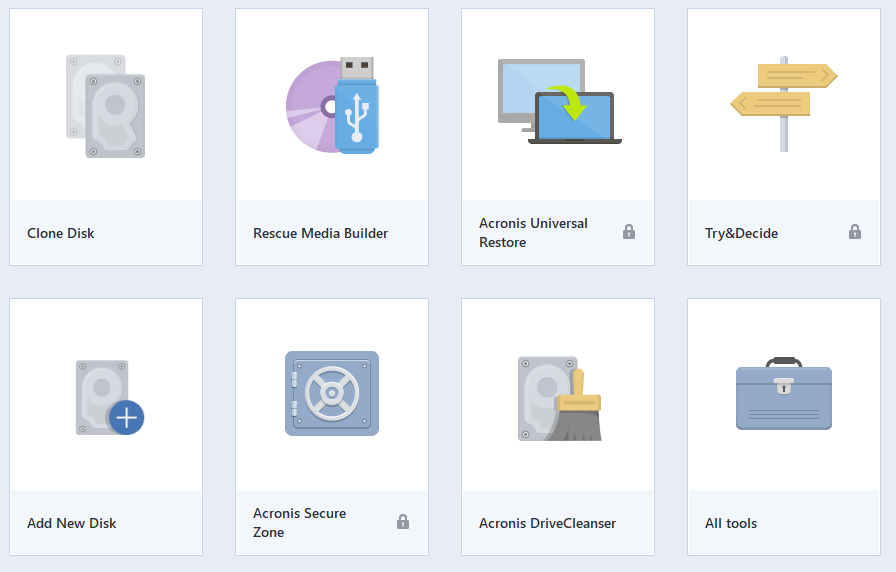

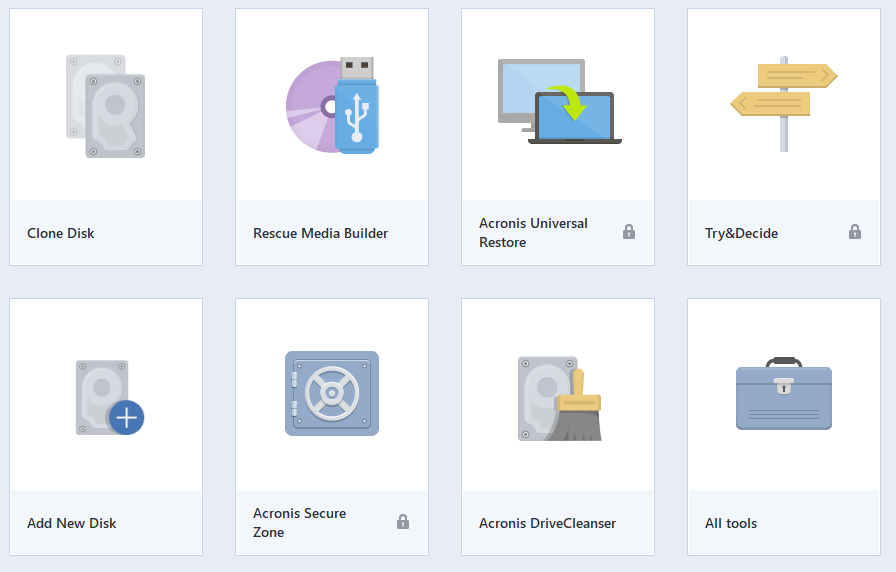

Open Up Clone Disk Tool in Acronis

When you have Acronis open, select the Clone Disk tool. Note, this can take a while to open up, so be patient.

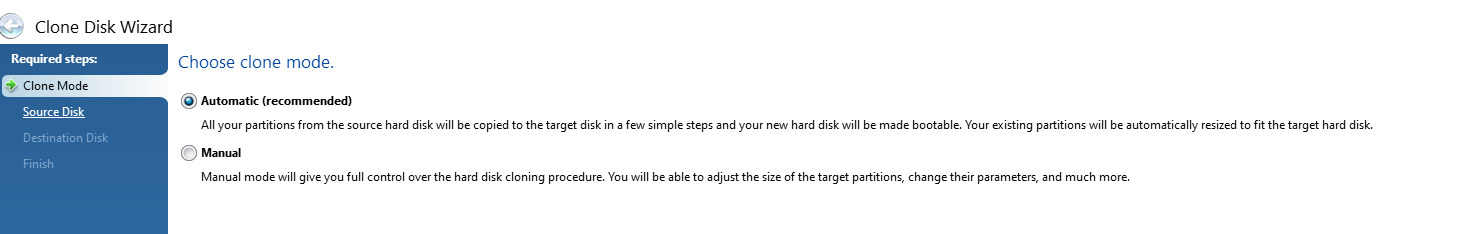

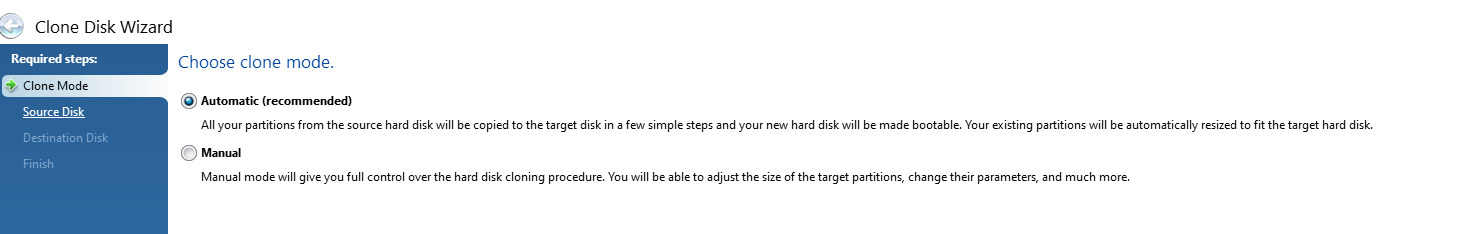

Select Automatic Clone Mode

This is the mode that is most common to use which handles everything in the background for you. The Manual mode gives you much more control but can often be a bit overwhelming if you aren’t too familiar with some of these concepts.

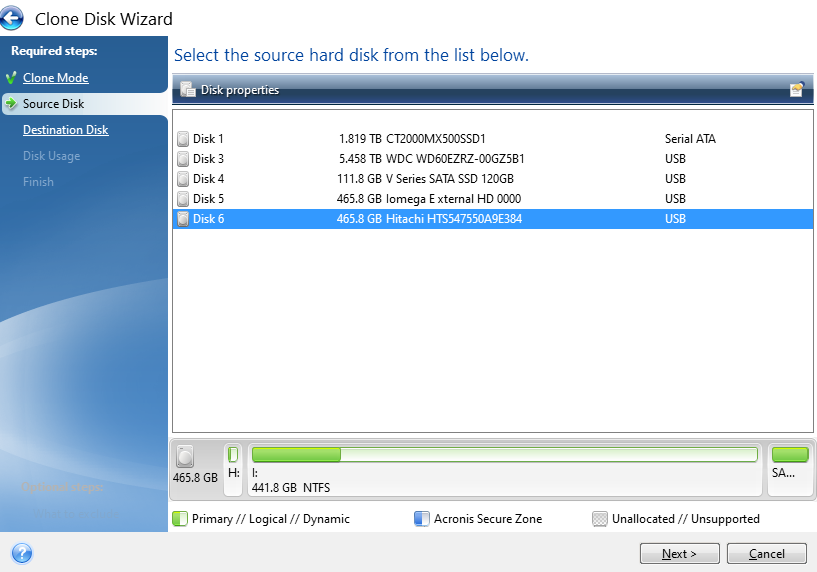

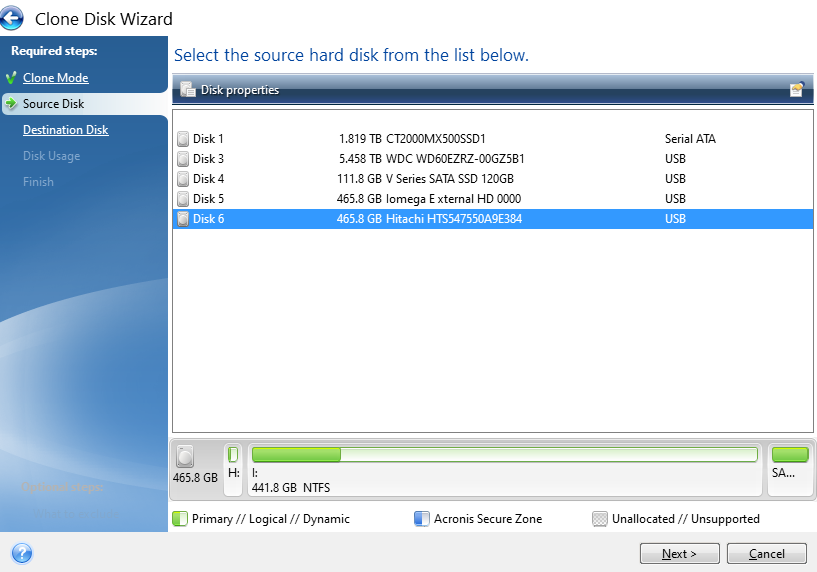

Select Source Disk

This step is particularly important, make sure you select the correct ID that is printed on the hard drive sticker so you are confident you are moving data from the correct disk drive.

You’ll notice the handy info that Acronis displays at the bottom which shows how the partitions on the drive are currently set up and what is and isn’t being used. This comes in very handy in the next step, particularly as in this case the data is being migrated from a 500 GB HDD to a 120 GB SSD. Your math is correct, that doesn’t fit – but – Acronis is smart enough to only transfer the data that is being used which means that in this scenario the data will fit.

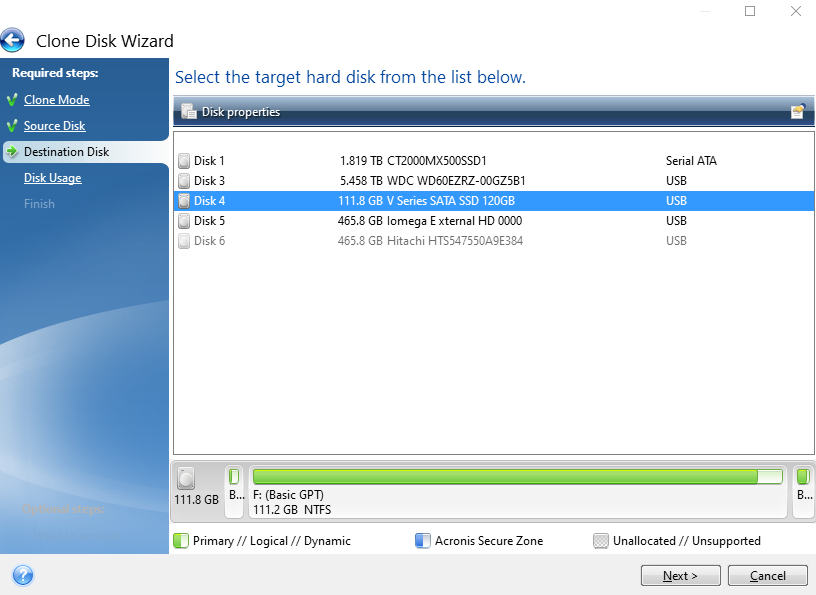

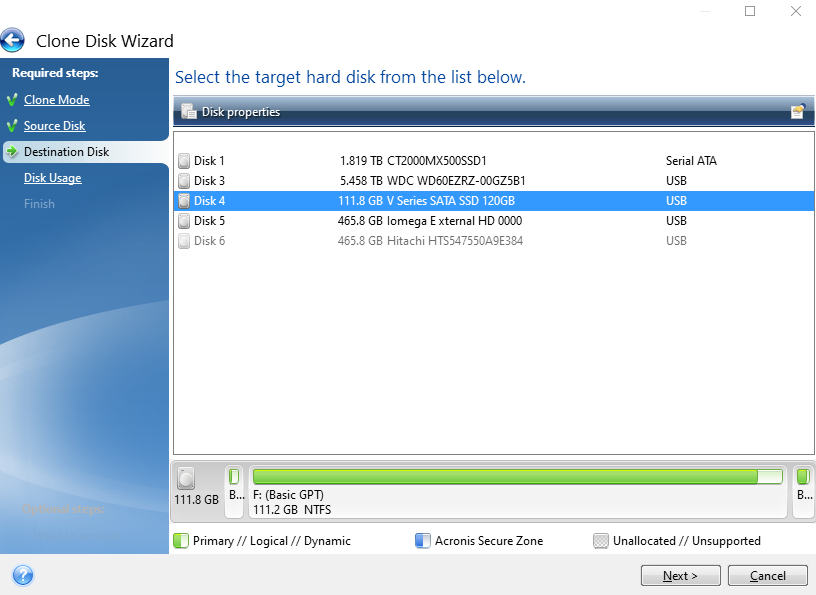

Select Destination Disk

Same as the previous step, make sure you are selecting the correct disk based on the IDs of the disk that is printed on your physical disk.

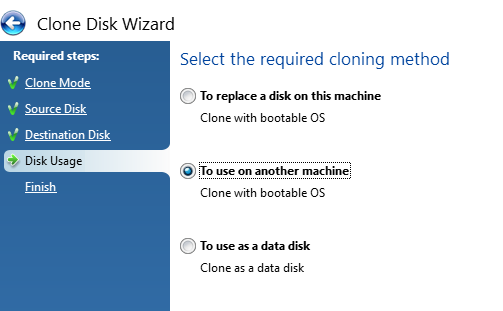

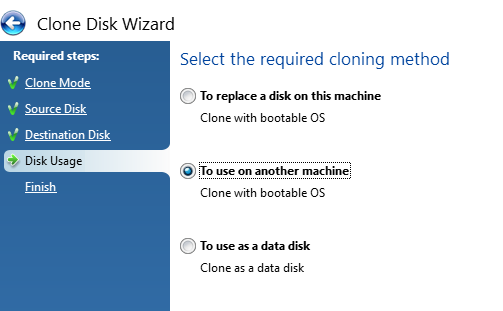

Select the Cloning Method

Next, select the cloning method you are doing. In my case both the old and new disks are connected via USB and are going to be used on another machine, not the machine that Acronis is installed and being run from. Generally speaking, when disk drives start failing, the machine they live in also becomes fairly unresponsive and/or just extremely sluggish. So it’s often easier to whip out the old disk drives and get them plugged into a decent computer that can do the grunt work.

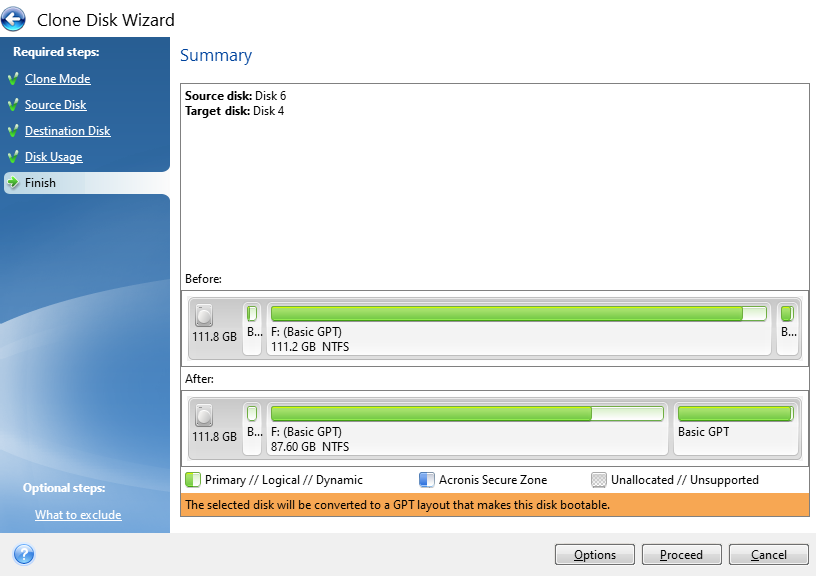

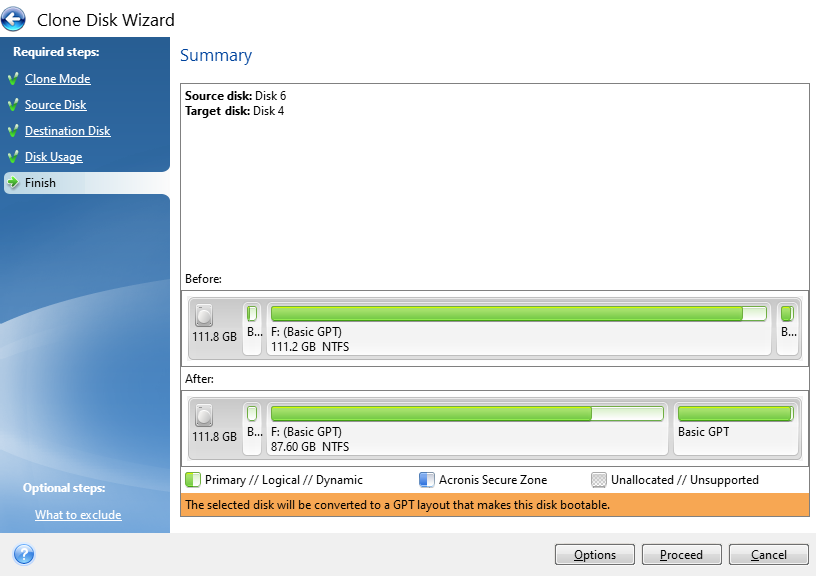

Confirm Settings and Start the Cloning Process

The final step is just confirming what your new disk will look like both at present and after the conversion process. In this example, this is an existing disk that is being flattened and re-purposed which is why the before info shows that the disk is full. If you are using a brand new disk, this will show up mainly empty as there will be nothing on it.

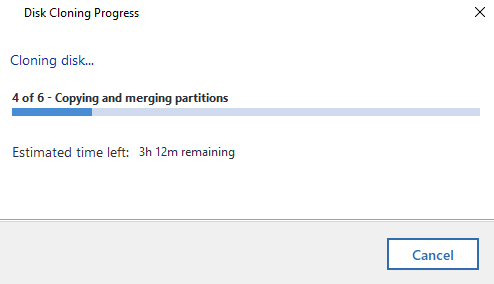

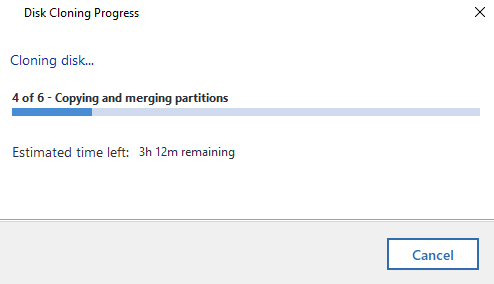

Now it’s just a case of sitting back and waiting. I’ve mentioned already Acronis is a slow piece of software for whatever reason. Just getting to this point probably took around 45 minutes believe it or not. The cloning process takes even longer. So make sure everything has plenty of juice to keep the power on throughout the process or you’ll end up losing a lot of time going through this process again.

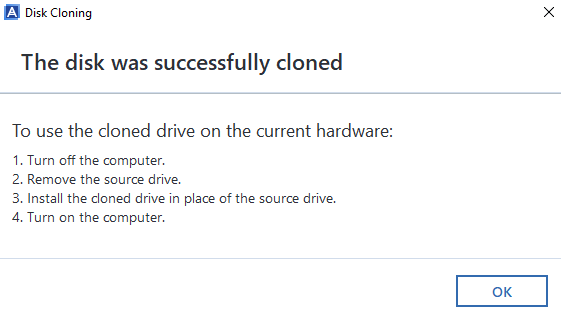

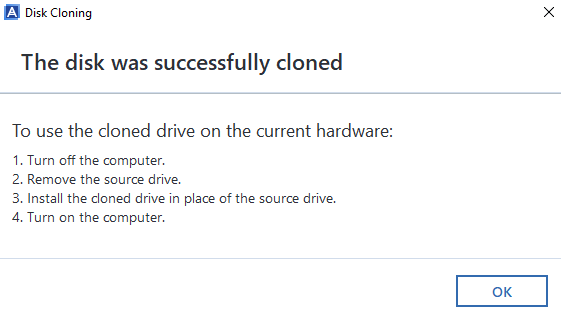

Disk Clone Successful

Woo! Finally, the cloning process has been complete. Now it’s just a case of plugging the new disk drive back into the computer you took the old one out of and everything should be back to normal, working fast again etc. If you do get any problems with this point, then generally the clone will have failed, even though Acronis says that it has worked. i.e. missing a bootable sector or some other form of corruption that is going to be near impossible to get to the bottom of.

Backups, Cloud, Redundancy Etc.

Ok, so we’ve run through the process of cloning hard disks either from HDD to HDD, HDD to SSD or SSD to SSD. Whatever your situation has been. What we haven’t covered off on this blog post yet is around backups, cloud and data redundancy etc. So let’s keep this topic really simple… your hard drives will fail at some point, so plan for it.

Use cloud service providers for storing your data, they have endless backups in place that are handled for you automatically without ever thinking about it. If you only have your data on your main hard disk in your computer, there is a chance that when your disk fails, you will permanently lose your data. Do not go backing up important data to external hard drives, this is manual, error prone and is still likely to result in some data loss for your data when one or more of your hard drives fail.

This is a topic that I could go into for a long time, but will avoid doing so within this blog post. Instead, let’s just keep things simple and ensure your data is backed up to the cloud. And make sure you can easily recover from a failed hard disk and be back up and running within hours, not weeks.

Notes on Failing Disks

Important to note that if you are working with a failing disk, then you can pretty much throw all of the above out of the window. Give it a go, but it’ll probably fail. You are probably best off getting a new disk drive and installing Windows 10 from scratch then you can copy the files over that you need (and backing them up to the cloud!). It’s a bit painful doing this but often it’s the only route when the disk drive has gone past the point of no return and is intermittently failing and doing random things. I’ve seen random things such as monitors flashing on/off with the Windows desktop going blank then back again on a repeat through to disk recovery software failing when it tries to read one single bit of data on the disk, usually about 95% into the process. It’s always best not to get to this point. Some other nuances I’ve seen is that BIOS wasn’t detecting the disk after an apparent successful clone, yet I could see the drive in Windows Device Manager when plugged into another machine, but it wasn’t showing up in Windows Disk Management. All very odd.

When thing get to this point, it’s time to just give up on the old disk, get Windows installed on a brand new one and salvage what you can. Learn your lesson and don’t make the same mistake twice. There are advanced recovery (and costly) options available to do deep dive recovery of data, which again on failing disks can even still be a bit hit and miss so you could be throwing good money after bad trying to recover this data, but it all depends on how important that data is to you.

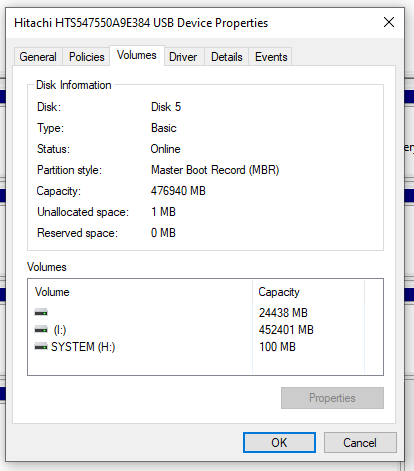

Check What Your Old Disk is Using – GPT or MBR

Something we didn’t go into in too much detail so far but is important to mention. GPT VS MBR – Make sure you check what the old disk is configured as. Or you’ll be repeating the processes again, or be forced to use a commercial bit of software to change GPT to MBR or the other way round. To do this, within Windows Disk Management, simply Right Click on the old drive and select Properties, then click on the Volumes tab where this info will be displayed. In this case we can see that the old drive is using MBR, so it’s best to configure the new disk drive also to use MBR because the computer this came from could (and likely will) have certain limitations at the BIOS layer about if MBR or GPT is supported (aka. UEIF Mode either Enabled or Disabled).

Note, Acronis is a pretty dumb and opinionated piece of software. It assumes that the Destination Disk Partition Mode (MBR VS GPT) is determined purely based on the computer that Acronis is running on. This is dumb, and quite frankly a fundamental flaw in the software in my opinion. In the vast majority of use cases in my experience, the Source Disk and Destination Disk are going to be plugged into an independent computer that is merely there to perform the copy and paste job.

MBR VS GPT is a Legacy VS Modern topic that is beyond the scope of this blog post. But what is important to note beyond the disk drive is that this comes down to the Motherboard’s BIOS Settings in relation to UEIF which is either Enabled or Disabled. Even still, there can be many compatibility issues in this space.

Sometimes, it’s just more effort than it is worth trying to upgrade a computer though. If it’s old, the Old HDD is old, then all the other components are old and slow. Sometimes it’s just more economic to throw away (recycle) the old and get a brand new computer and/or start with a fresh installation of Windows and go from there.

There are many bits of software that can help with cloning disks include: Clonezilla, Macrium Reflect Free, DriveImage XML, SuperDuper and many more. Many come with free basics and trial periods, but generally if you want to do something in full with an easy user experience, then you’re going to be using the commercial offerings.

After personally getting rather frustrated with Acronis, I decided to have a little rant on the Acronis Support Forums. Summary being “Unfortunately this is very unlikely to change for all users of Acronis True Image! This is because Acronis no longer support or develop this product.” And “The MVP community have been asking for this for some years but without any success.”. Not a very positive message, but at least an honest one from a senior member of the community given the lack of engagement from Acronis directly.

Summary

Hopefully this has been a helpful and detailed blog post for how to clone a hard disk drive (HDD) or solid state drive (SSD) and how you can handle this process for either failing disks or just upgrading disks to newer, faster and larger models.

Please take care when performing these actions and if you aren’t sure what you are doing, then leave this to the professionals. There are a lot of nuances with these types of actions which can be extremely destructive if you get this wrong. Be careful.

by Michael Cropper | May 2, 2021 | Amazon Web Services AWS, Client Friendly, Developer, Technical |

Amazon Linux (aka. Amazon Linux 1) was straight forward to get Let’s Encrypt setup, it was a breeze and the documentation wasn’t too bad. I don’t know why Let’s Encrypt support for Amazon Linux 2 just isn’t where it needs to be, given the size and scale of Amazon Linux 2 and the fact that Amazon Linux is now an unsupported operating system. It’s likely because Amazon would prefer you to use their AWS Certificate Manager instead, but what if you just want a Let’s Encrypt certificate setting up with ease. Let’s take a look at how you get Let’s Encrypt setup on an AWS EC2 instance that is running Amazon Linux 2 as the operating system/AMI.

Assumptions

We’re assuming you’ve got Apache / Apache2 installed and set up already with at least one domain name. If you are using Nginx or other as your Web Server software then you’ll need to tweak the commands slightly.

How to Install Let’s Encrypt on Amazon Linux 2

Firstly, we need to get the Let’s Encrypt software installed on your Amazon Linux 2 machine, this is called Certbot. For those of you looking for the quick answer, here’s how you install Let’s Encrypt on Amazon Linux 2 along with the dependences;

yum search certbot

sudo amazon-linux-extras install epel

sudo yum install python2-certbot-apache

sudo yum install certbot-apache

sudo yum install mod_ssl python-certbot-apache

sudo certbot --apache -d yum-info.contradodigital.com

For those of you looking for a bit more information. There are a few fairly undocumented dependencies to get this working. So to get started you’ll want to install the dependencies for Let’s Encrypt on Amazon Linux 2 including;

- Epel, aka. The Extra Packages for Enterprise Linux, from the Amazon Linux Extras repository

- Python2 Certbot Apache using Yum

- Certbot Apache using Yum

- Mod_SSL, Python Certbot Apache using Yum

As it was a bit of a pain to get this configured, I’m fairly sure one of the above isn’t required, I just can’t recall which one that was.

How to Configure Let’s Encrypt on Amazon Linux 2 for a Domain

So now you’ve got Let’s Encrypt installed on Amazon Linux 2, it’s time to generate an SSL certificate for your domain that is hosted. For the purpose of simplicity we’re going to assume you’re running very basic setup such as www.example.com/HelloWorld.html. There are other nuances you need to consider when you have a more complex setup that are outside of the scope of this blog post.

sudo certbot --apache -d yum-info.contradodigital.com

What you’ll notice in the above is that we’re using Certbot and telling it that we’ve got an Apache Web Server behind the scenes and that we want to generate an SSL certificate for the Domain (-d flag) yum-info.contradodigital.com.

Simply run that command and everything should magically work for you. Just follow the steps throughout.

Summary

The above steps should help you get setup using Let’s Encrypt on Amazon Linux 2 without much fuss. Amazon Linux 2 really does feel like it has taken a step back in places, Amazon Linux 1 had more up to date software in places, and easier to work with things like Let’s Encrypt. But hey. We can only work with the tools we’ve got on the AWS platform. Please leave any comments for how you’ve got along with installing Let’s Encrypt and getting it all set up on Amazon Linux 2, the good, the bad and the ugly.

by Michael Cropper | Apr 29, 2021 | Amazon Web Services AWS, Client Friendly, Developer, Technical |

AWS. With great power comes with great responsibility. AWS doesn’t make any assumptions about how you want to backup your resources for disaster recovery purposes. To the extent that they even make it easy for you to accidentally delete everything when you have zero backups in place if you haven’t configured your resources with termination protection. So, let’s think about backups and disaster recovery from the start and plan what is an acceptable level of risk for your own setup.

Risk Appetite Organisationally and Application-ally

OK, that’s a made up word, but you get the gist. You need to assess your appetite to risk when it comes to risk, and only you can do this. You have to ask yourself questions and play out roll plays from “What would happen if a single bit of important data got corrupted and couldn’t be recovered on the Live system?” all the way through to “What would happen if the infrastructure running the Live system got hit by a meteorite?”. Then add a twist into these scenarios, “What would happen if I noticed this issue within 10 minutes?” through to “What would happen if I only noticed this issue after 4 days?”.

All of these types of questions help you to assess what your risk appetite is and ultimately what this means for backing up your AWS infrastructure resources such as EC2 and RDS. We are talking specifically about backups and disaster recovery here, not highly available infrastructures to protect against failure. The two are important aspects, but not the same.

As you start to craft your backup strategy across the applications in your corporate environment and tailor the backup plans against different categories of applications and systems into categories such as Business Critical, Medium Risk, Low Risk etc. then you can determine what this looks like in numbers. Defaults for frequency of backups, backup retention policies and such like.

How to Backup EC2 and RDS Instances on AWS Using AWS Backup

To start with the more common services on AWS let’s take a look at how we back these up and what types of configurations we have available to align out backup strategy with the risk appetite for the organisation and the application itself. The specific service we’re interested in for backing up EC2 and RDS instances on AWS is creatively called….. AWS Backup.

AWS Backup allows you to create Backup Plans which enable you to configure the backup schedule, the backup retention rules and the lifecycle rules for your backups. In addition, AWS Backup also has a restore feature allowing you to create a new AWS resource from a backup so that you can get the data back that you need and/or re-point things to the newly restored instance. Pretty cool really.

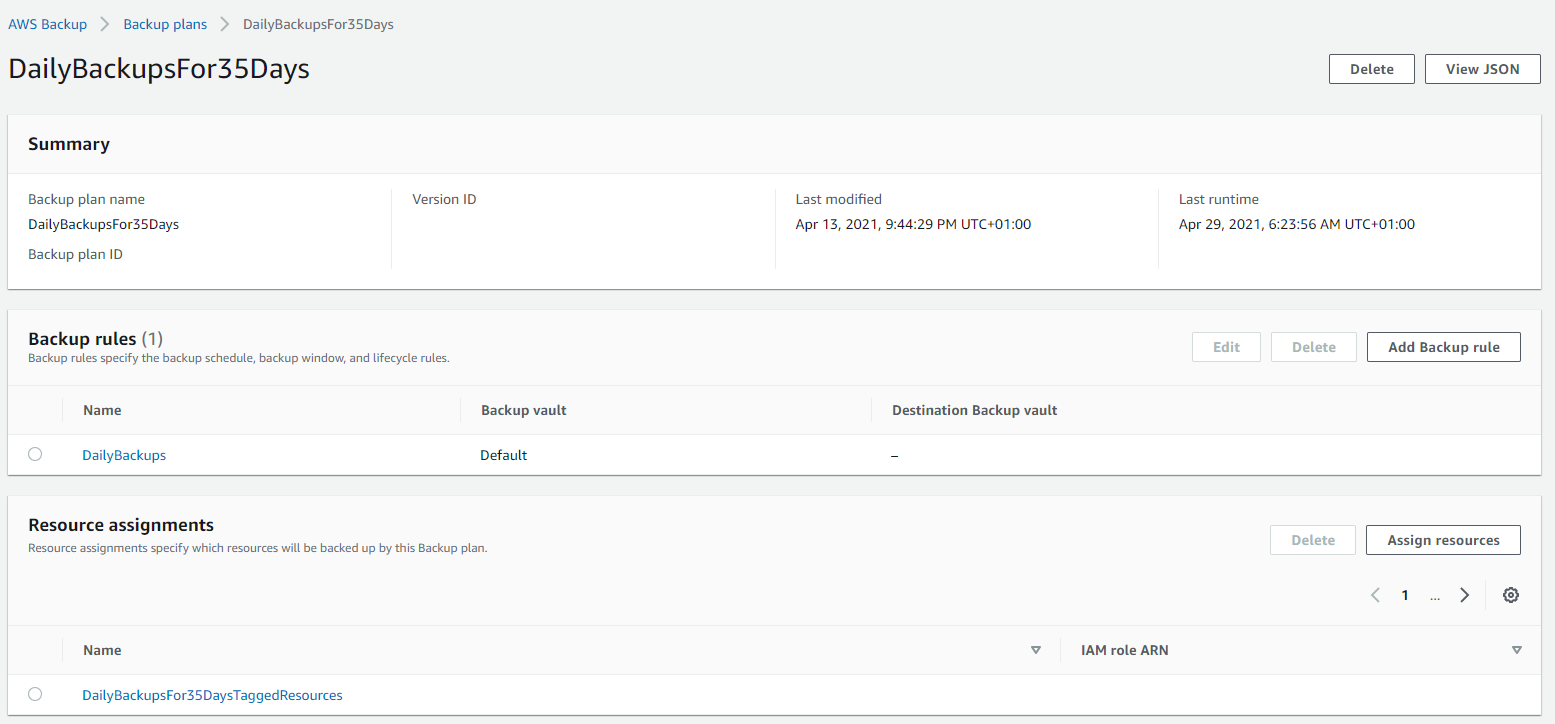

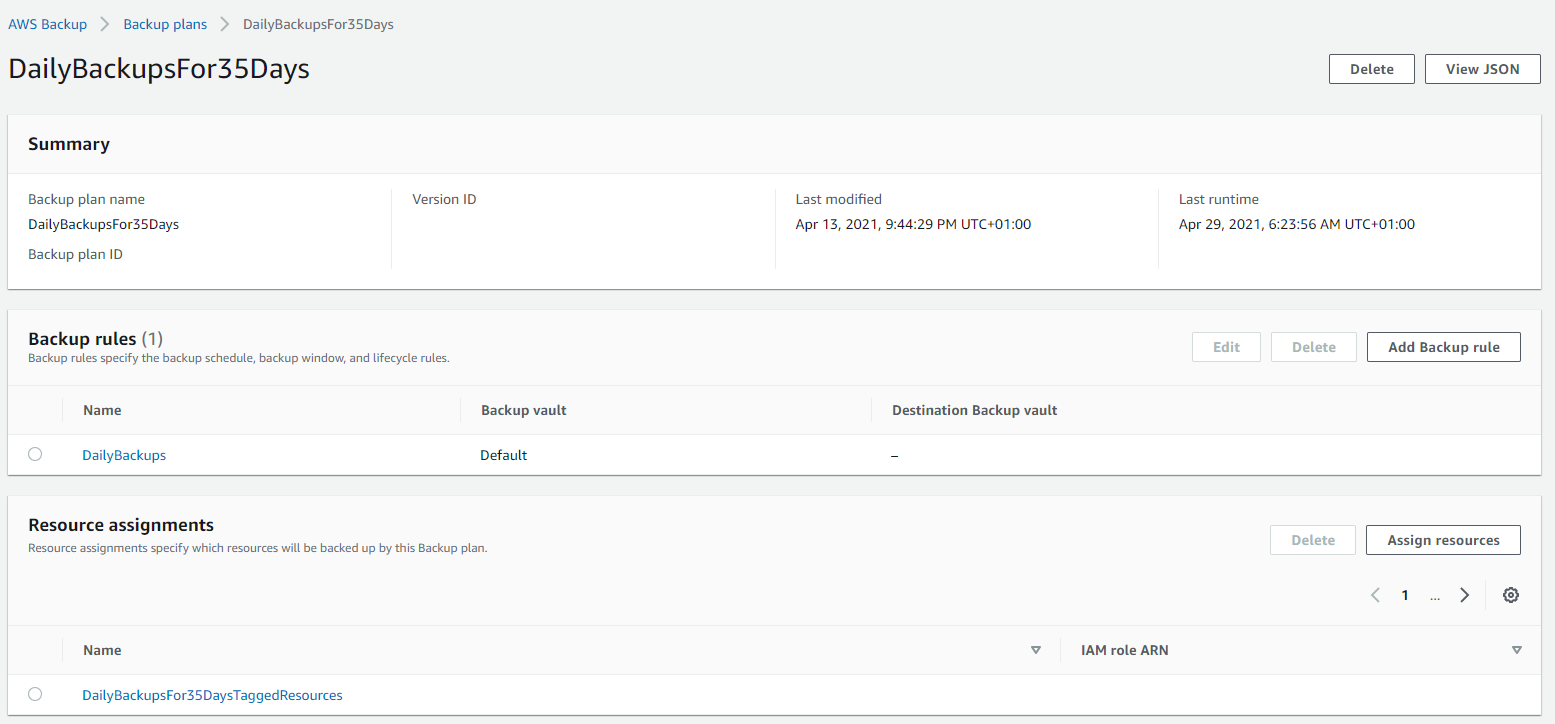

The first thing you want to do to get started is to create a Backup Plan. Within the creation process of your Backup Plan, you can configure all the items mentioned previously. Usually we’d walk through the step by step to do this, but really you just need to walk through the settings and select the options that suit your specific needs and risk appetite.

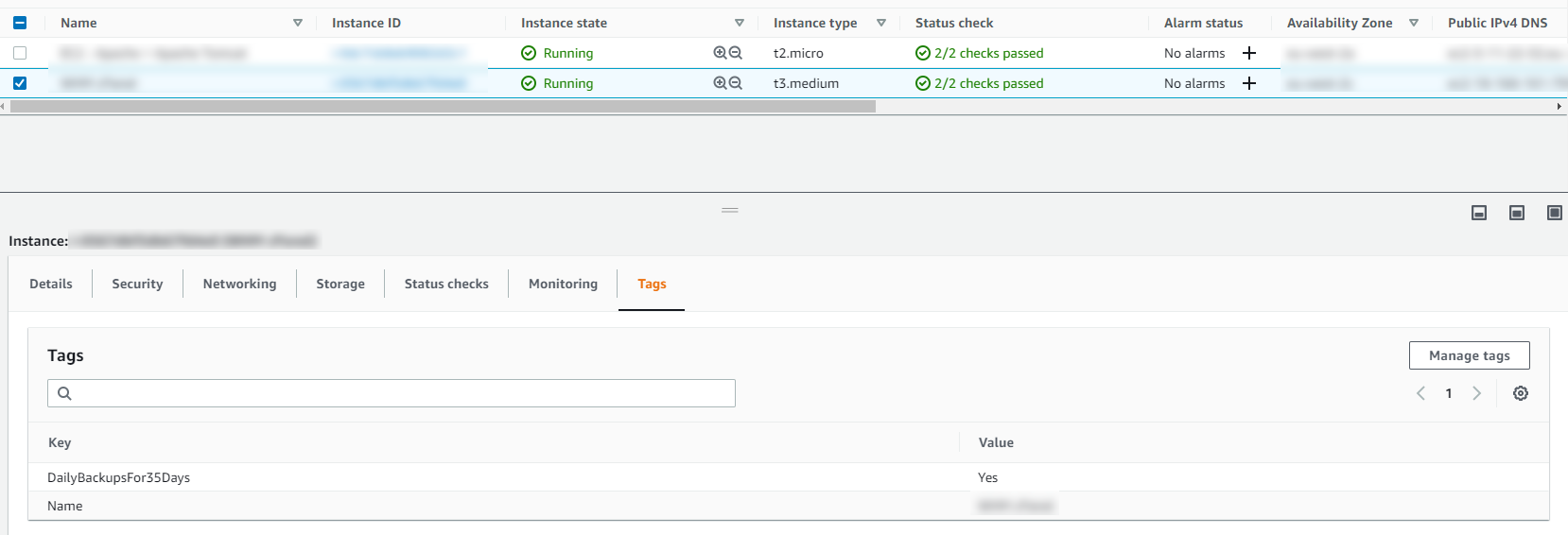

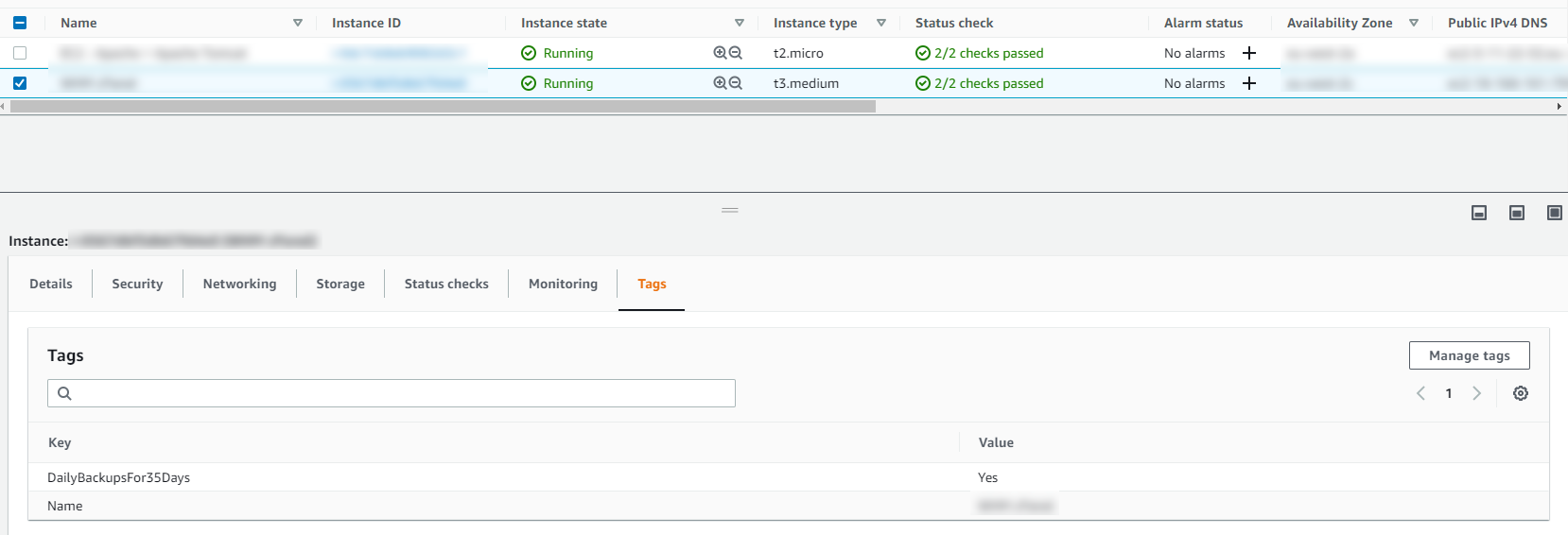

Below is a basic Backup Plan that is designed to run daily backups with a retention policy of 35 days, meaning we have 35 restoration points. You’ll also notice that instead of doing this for specific named resources, this is backing up all resources that have been tagged with a specific name.

Tagged Resources;

The tagged resource strategy using AWS Backup is an extremely handy way of managing backups as you can easily add and remove resources to a Backup Plan without ever touching the Backup Plan itself. Naturally you need a proper process in place to ensure things are being done in a standardised way so that you aren’t constantly hunting around trying to figure out what has been configured within AWS.

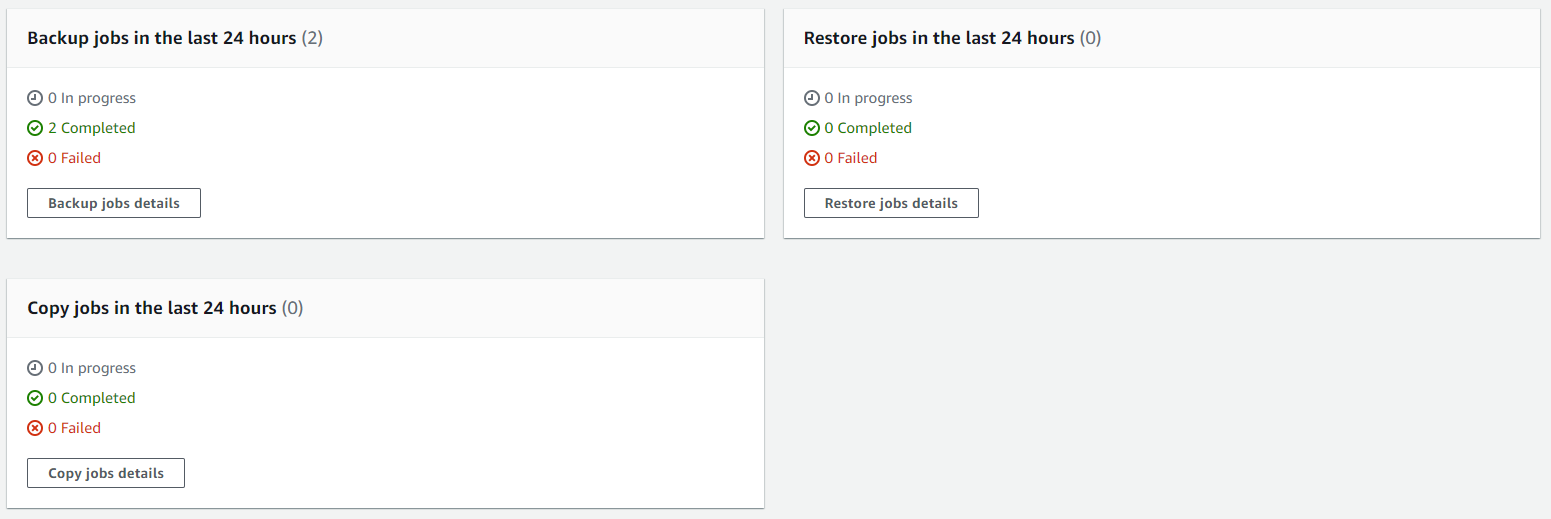

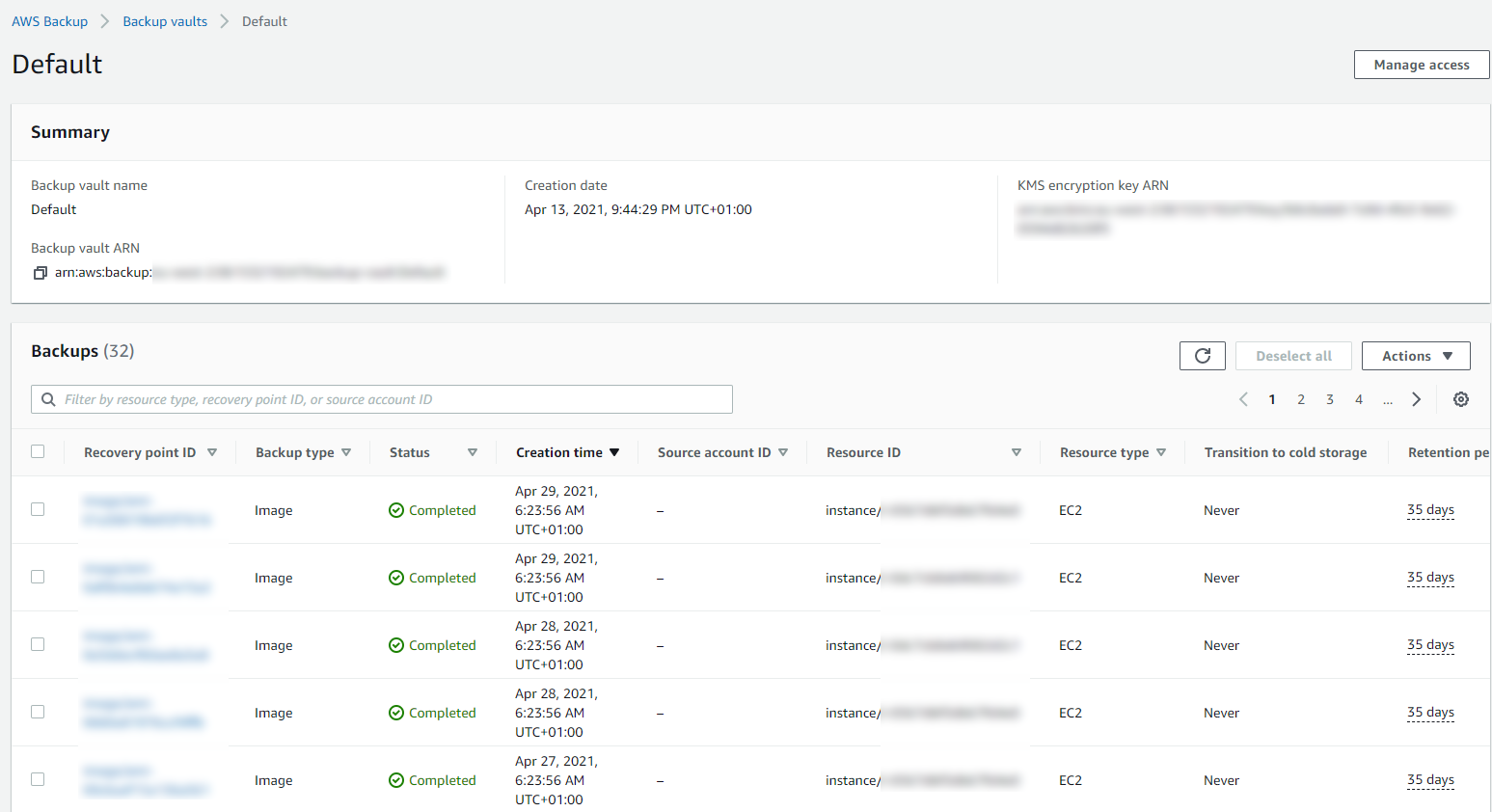

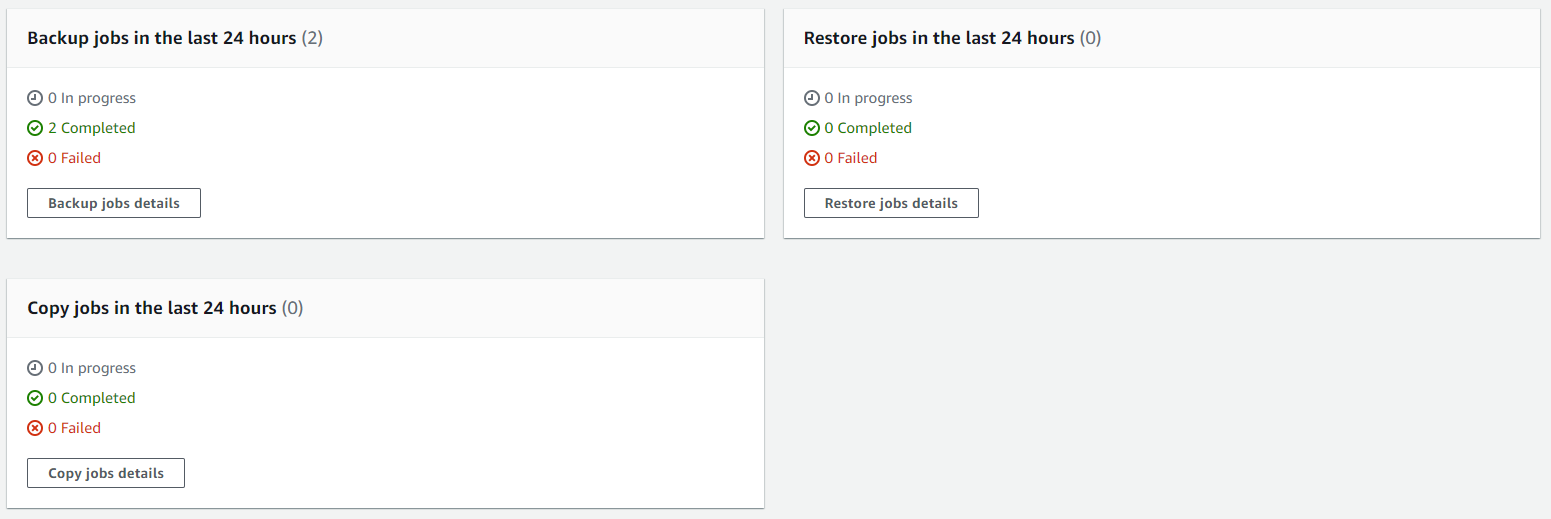

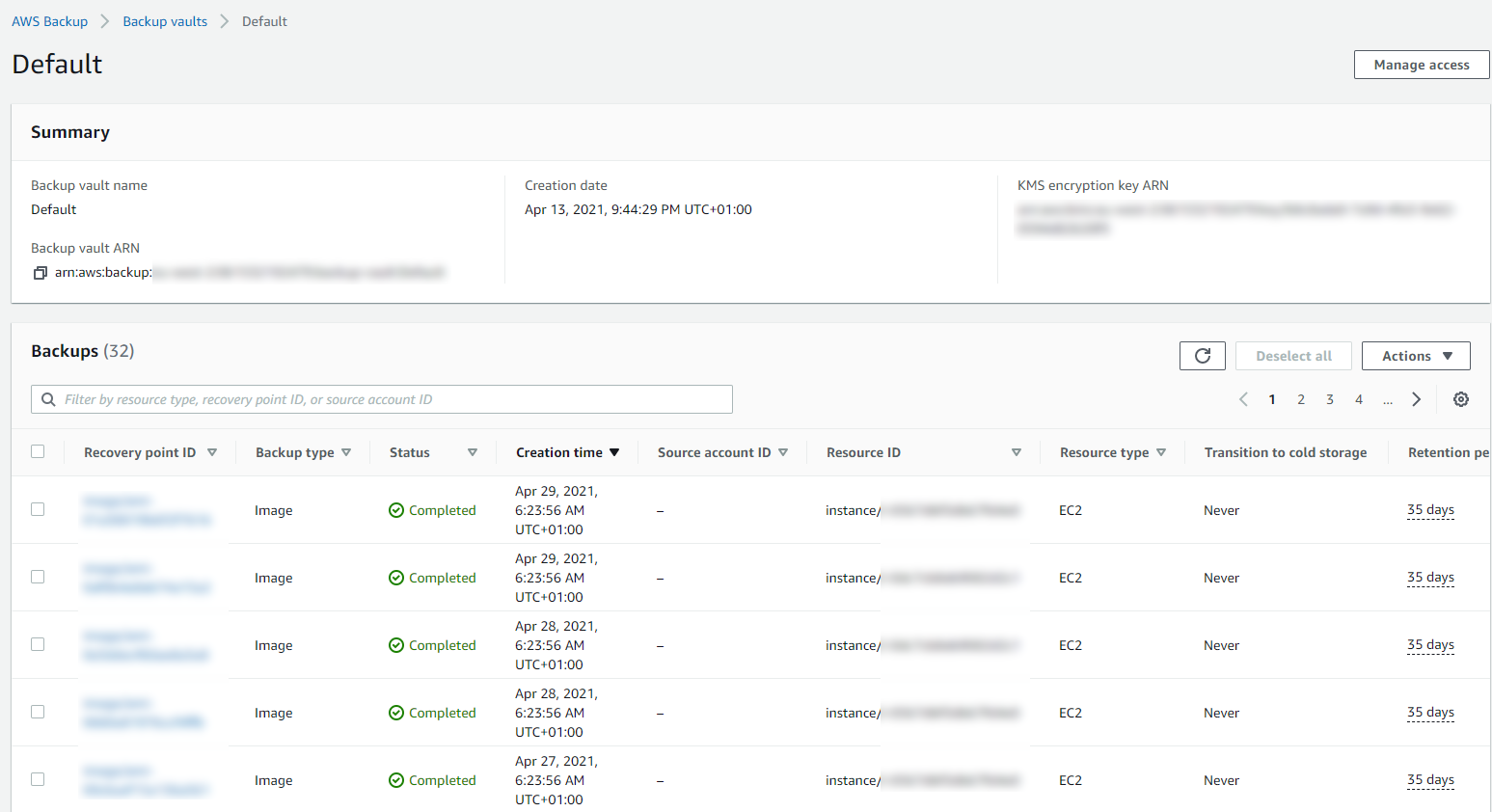

Running Backups

Once you have your Backup Plans in place, you can then start to see easily the backups that have been running, and most importantly if they have been successful or if they have failed.

Then you can drill into the details and see all of your restoration points within your Backup Vault and ultimately this is where you would restore your backups from if you ever need to do that;

Summary

Hopefully that’s a whistle stop tour of how to backup your AWS infrastructure resources such as EC2 and RDS on AWS using AWS Backup. The best advice I can give when you are implementing this in the real world is that you need to truly understand your IT landscape and create a backup strategy that is going to work for your business. Once you have this understood, clicking the right buttons within AWS Backup becomes a breeze.

Don’t do it the other way round, just creating random backups that don’t align with the business goals and risk appetites. You will end up in a world of pain. No-one wants to go reporting to the CEO….. IT: “Oh we only have backups for 7 days.” ….. CEO: “What?!?!?! We are legally required to keep records for 6 years! WTF!”. You get the gist.

This can be quite an enormous topic to cover, so here’s some further reading if you want to know more;

by Michael Cropper | Apr 27, 2021 | Client Friendly, Developer, Technical |

So this isn’t quite as straight forward as it probably should be and the documentation from AWS is the usual, not great. So let’s cut through the nonsense and take a look at what you need to do so that you can quickly and easily get your DNS Zone Files and DNS Records migrated.

Assess Your Current DNS Provider, Zone Files, Domains and Nameserver Configurations

The first things you want to do before you start any kind of migration of your DNS over to AWS Route53 is the plan. Plan, plan and plan some more. Some of the nuances I came across with a recent DNS migration piece of work from DNS Provider X to AWS Route53 included some niggles such as vanity nameservers. The old DNS provider had things configured to ns1.example.com and ns2.example.com, then domain1.com and domain2.com pointed their nameservers to ns1.example.com and ns2.example.com which was quite a nice touch. This doesn’t quite work on AWS Route53 and I’ll explain that in a bit more detail in a moment. Another niggle that we came across that you need to plan properly and that is to make sure you have absolutely everything documented, and documented correctly. This needs to include for every domain at an absolute minimum things such as;

- Domain name

- Sub-Domains

- Registrar (inc. login details, and any Two Factor Authentication 2FA steps required)

- Accurate Zone File

The vast majority of people just have a Live version of their DNS Zone Files, which in itself is risky because if you had an issue with the DNS Provider X and you had no backup of the files, you could be in for a whole world of pain trying to re-build things manually in the event of a critical failure.

How AWS Route53 Manages Hosted Zones

So back to the point I mentioned earlier around vanity nameservers and why this doesn’t quite work in the way the old DNS Provider X worked. When you create a new Hosted Zone within AWS Route53, Amazon automatically assigns 4x random nameservers of which you can see an example below;

- ns-63.awsdns-07.com

- ns-1037.awsdns-01.org

- ns-1779.awsdns-30.co.uk

- ns-726.awsdns-26.net

What you will instantly notice here is that there are a lot of numbers in those URLs which should give you an idea of the complexity of the nameserver infrastructure behind the scenes on the Route53 service. What this also means is that because these nameservers are automatically generated you can’t configure two Hosted Zones to use the exact same nameservers to get the similar vanity nameserver setup explained earlier.

The reality is, this approach while is fairly common for complex setups, the majority of standard setups this isn’t an issue for. If you want to get vanity nameservers set up on AWS for a single domain, i.e. ns1.domain1.com and ns1.domain2.com etc. then you can do this if you wish.

Export Zone Files from your Old DNS Provider

The first step of this process is to export your Zone Files from your old DNS provider. What you will find from this process is that ever provider will export these slightly differently, and this highly likely won’t be in the format that Route53 needs when you import the Zone Files.

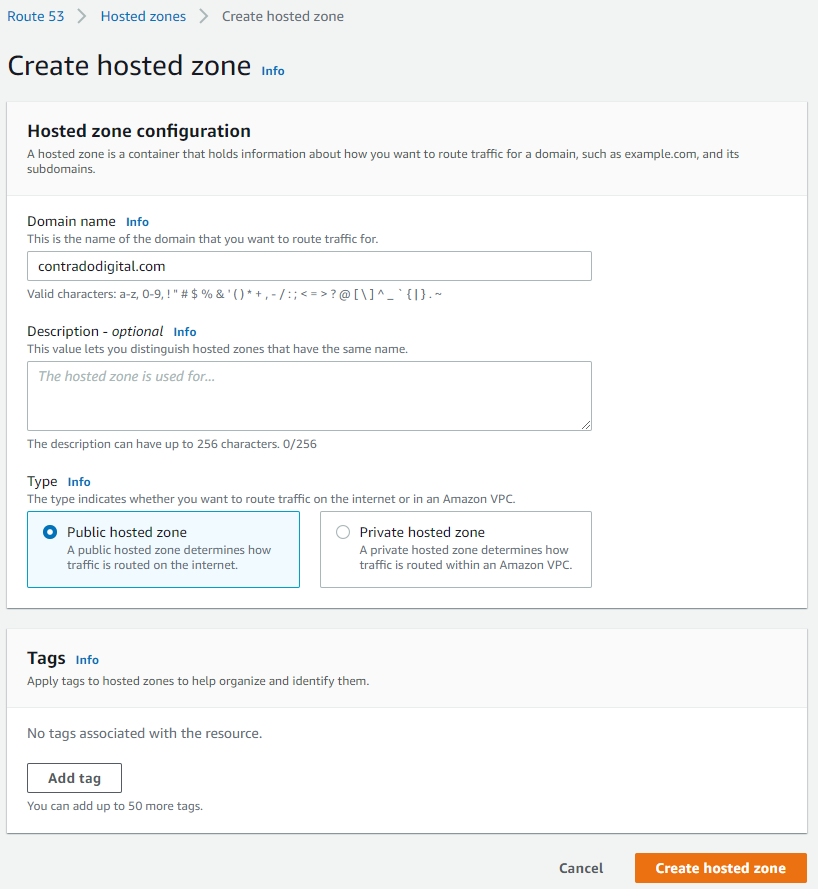

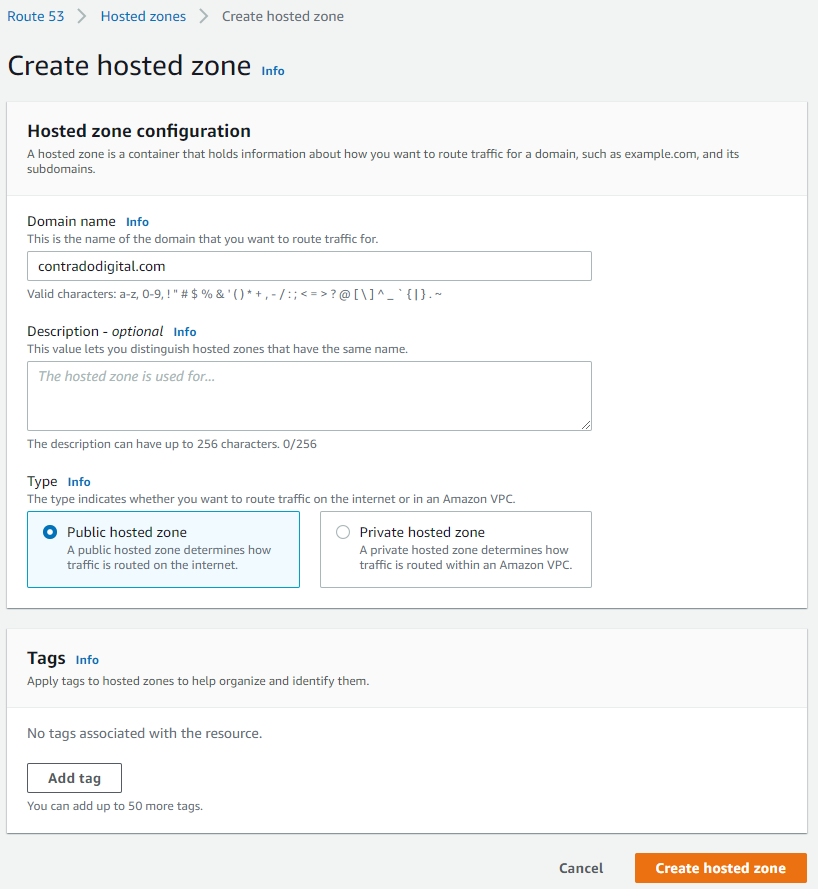

Create a Hosted Zone in Route53

This step is straight forward, just click the button.

Importing Zone Files to your Hosted Zone

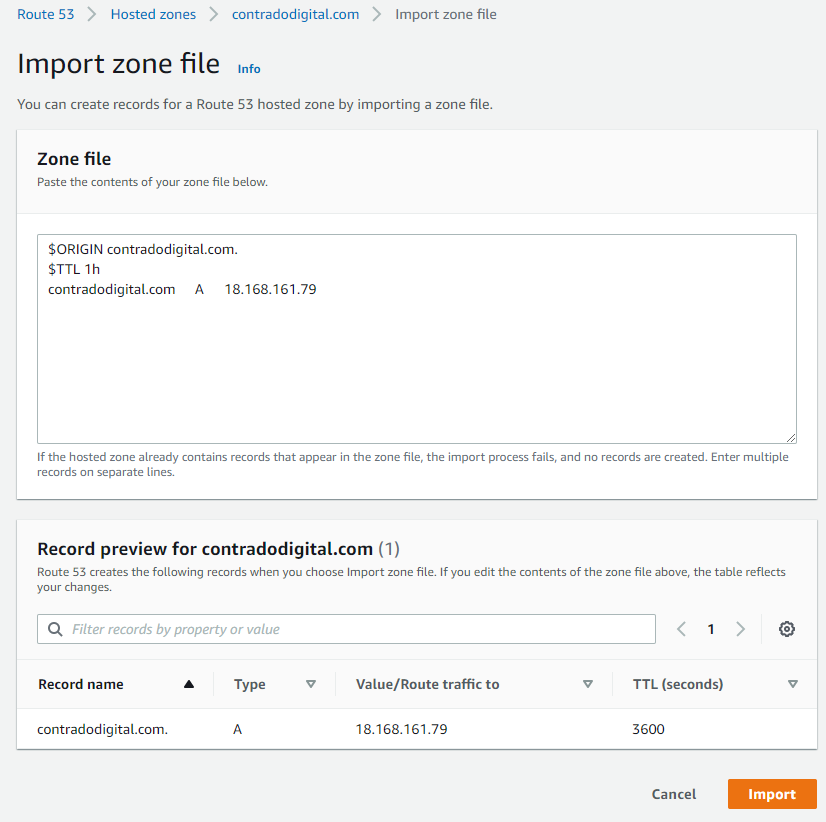

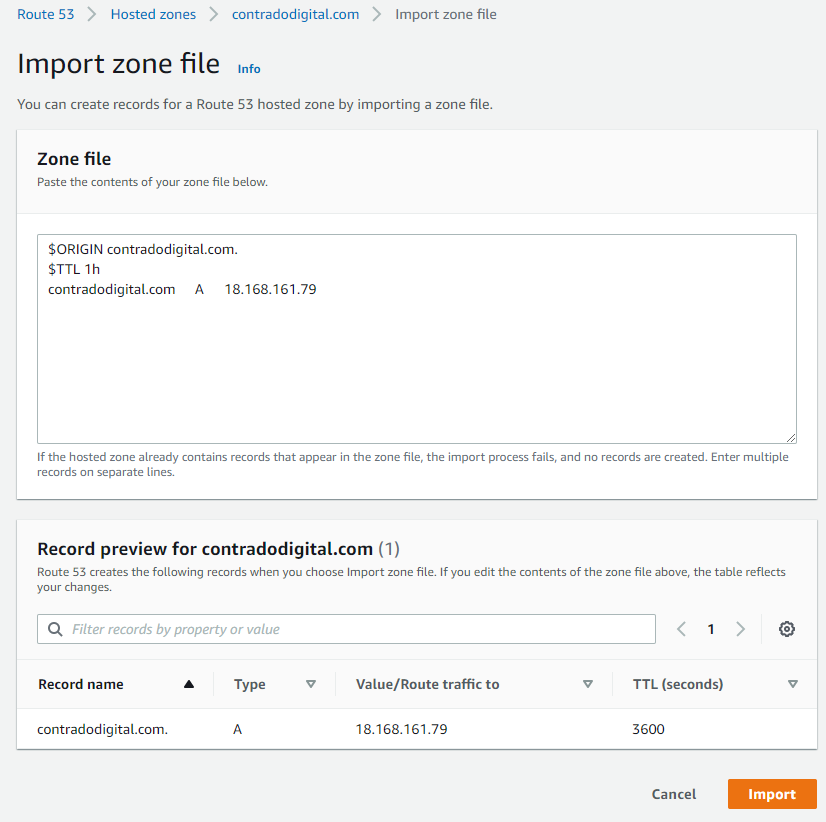

As such, it’s time to prepare your Zone Files to be able to be imported into Route53 successfully. The format you need for your zone file import is as follows;

$ORIGIN contradodigital.com.

$TTL 1h

contradodigital.com A 18.168.161.79

Notice the couple of additional lines you need to add in which likely won’t be included from your export from your old provider. The above is just a very basic set of DNS entries. The reality is you will likely have 10 – 50+ DNS entries per domain depending on the complexity of your setup. One to keep an eye out on is that you may find certain record types don’t quite import seamlessly. Just a few niggles that I came across doing this included;

- MX records required a 10 included, i.e. contradodigital.com MX 10 contradodigital-com.mail.protection.outlook.com

- DKIM (TXT) and SPF (TXT) records had to be re-generated and imported manually as the format just didn’t quite work for the automatic import for some reason.

And I’m sure you’ll come across a few issues along the way that I haven’t mentioned here.

Summary

Hopefully this guide on how to import Zone Files into AWS Route53 helps to clarify some of the niggles around using the Zone File Import feature. To reiterate around this process when you are doing this in a real situation, make sure you plan this properly, have clear checklists and processes that you can methodically work through to ensure things are working as you do them. These types of changes can have a significant disruption to live systems if you don’t implement these things correctly.

by Michael Cropper | Apr 27, 2021 | Client Friendly, Developer, Technical |

We’ve got a lot of complex topics to cover here, so for the sake of simplicity we’re only going to touch on the really high level basics of these concepts to help you understand how all these different pieces of the puzzle are connected together. When you’re first getting started in the world of IT, it’s often a bit of a puzzle how all these things are plugged together under the hood which can cause a lot of confusion. By knowing how things are plugged together, i.e. how the internet works, you will have a significantly better chance of working with existing setups, debugging problems fast, and most importantly building new solutions to bring your creative ideas to life.

Firstly, let’s get some basic terminology understood;

- Registrar = This is where you purchased your domain name from, i.e. example.com

- Nameservers = This is the where the authority starts for your domain, i.e. it’s the equivalent of “tell me who I need to talk to who can point me in the right direction to get to where I want to go”. It’s the authority on the subject whose opinion on the matter is #1.

- DNS = This is the gate keeper to determine how traffic into your domain flows to where it needs to go. Think of your DNS like Heimdall from the Thor movies. You configure your nameservers to act in a way that you want, i.e. requests from www.example.com, should ultimately route to server IP address, 1.2.3.4, so that you don’t need to go remembering a bunch of IP addresses like a robot – or in the Heimdall world, “Heimdall…” as Thor screams in the movies, and he is magically transported from Earth to his home world of Asgard. Likewise, if Loki wants to visit a different planet, he just asks Heimdall to send him there and the magic happens. DNS can appear like magic at times, but it’s actually really simple once you understand it. DNS is a hard concept to explain simply, we’ll do another blog post on this topic in more detail another time. Hopefully this basic comparison helps you to at least grasp the topic at a high level.

- Servers = This is where things get fairly messy. This could be a physical piece of hardware that you can touch and feel, or could be a virtualised system, or visualised system within a virtualised system. There are multiple layers of virtualisation when you get down to this level. Although it’s not that important for the purpose of this blog post. Ultimately, all we care about is that the traffic from www.example.com, or something-else.example.com, gets to where it needs to when someone requests this in their web browser.

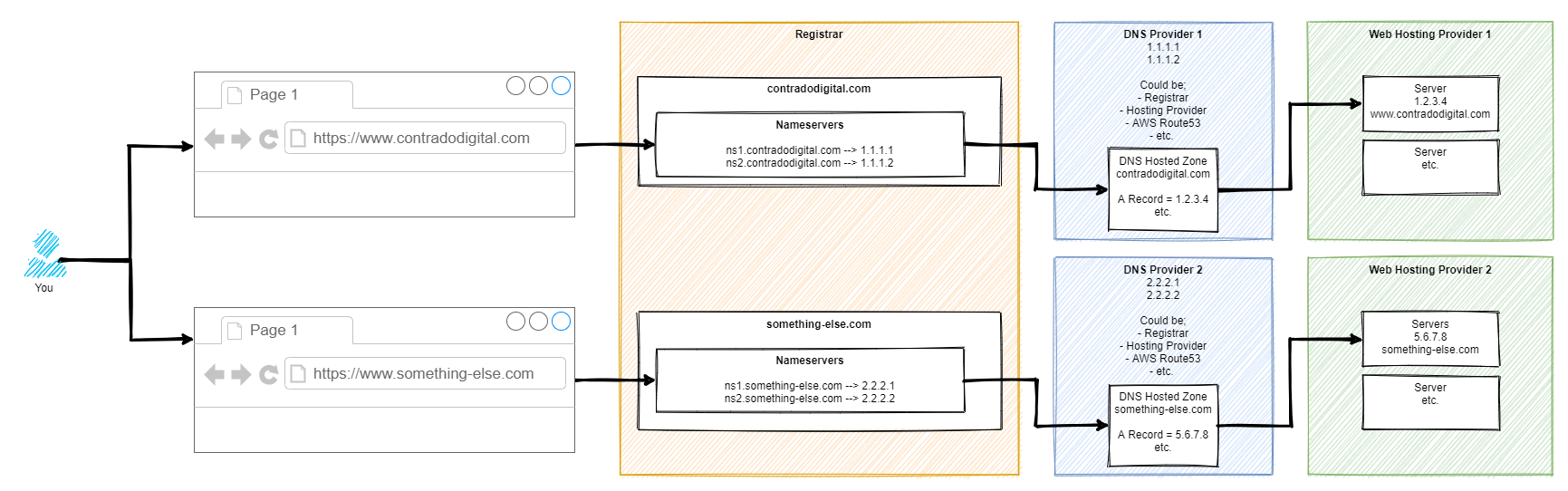

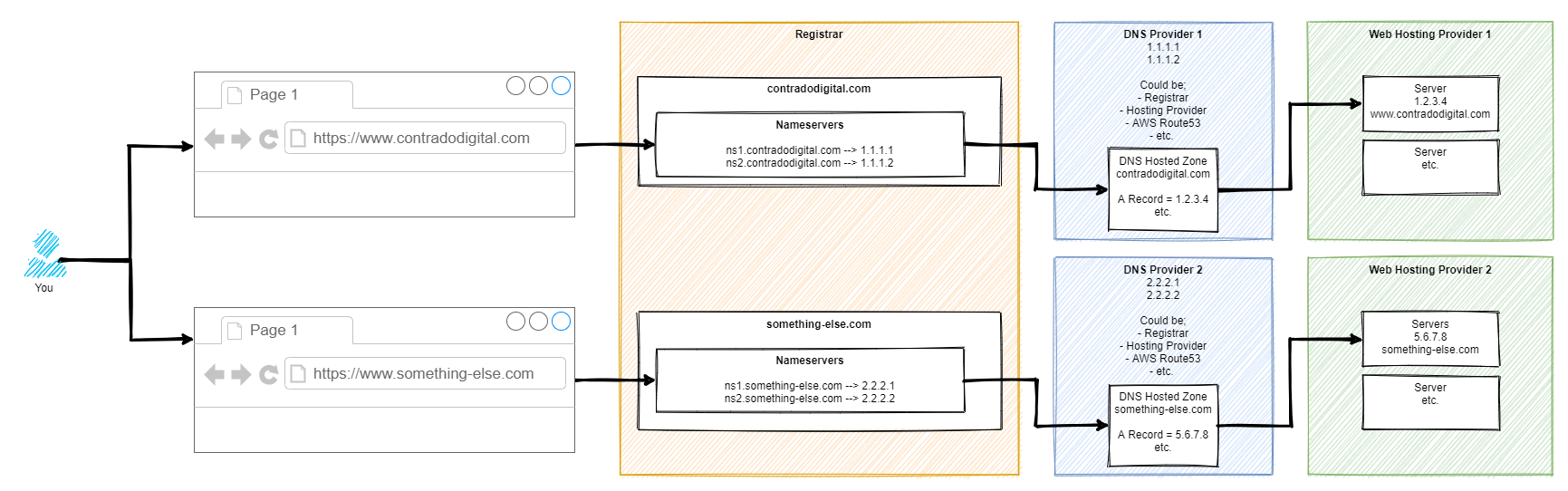

As mentioned, this is a difficult concept to explain simply in a blog post as there are so many different considerations that need to be made. But hey, let’s give it a go, with a basic diagram. There are elements of this diagram that have been simplified to help you understand how the different bits fit together.

So here’s how it works step by step. For those of you who are more technical than this blog post is aimed at, yes there are a few steps in between things that have been cut out for simplicity.

Step 1 – Type Website Address into Web Browser

This step is fairly basic so we’ll skip over this one.

Step 2 – Web Browser Asks for Authoritative Nameserver for Website

This part is very complex in the background, so we’re not going to delve into these details. For the purpose of simplicity, ultimately your web browser says “Give me the name servers for contradodigital.com”, and ‘the internet’ responds with, “Hey, sure, this is what you’re looking for – ns1.contradodigital.com and ns2.contradodigital.com”.

As with all hostnames, they ultimately have an IP address behind them, so this is what then forwards the request onto the next step.

Step 3 – DNS Provider with Hosted Zones

A Hosted Zone is simple something such as contradodigital.com, or something-else.com. Within a Hosted Zone, you have different types of DNS Records such as A, AAAA, CNAME, MX, TXT, etc. (that last one isn’t an actual record, just to confirm 🙂 ). Each of these record types do different things and are required for different reasons. We’re not going to be covering this today, so for simplicity, the A Record is designed to forward the request to an IP Address.

So your DNS Provider translates your request for www.contradodigital.com into an IP address where you are then forwarded.

Step 4 – Web Hosting Provider Serves Content

Finally, once your web browser has got to where it needs to get to, it starts to download all the content you’ve asked for from the server on your web hosting provider to your web browser so you can visualise things.

This part of the diagram is so overly simplified, but it is fine for what we are discussing. The reality of this section is that this could quite easily be 10-20 layers deep of ‘things’ when you start to get into the low level detail. But that’s for another time.

If you want to get a feel for how complex just part of this area can be, we did a blog post recently explaining how Your Container Bone is Connected to Your Type 2 Hypervisor Bone.

Summary

Hopefully this blog post has given you a good understanding of how your Registrar, Nameservers, DNS and Servers are connected together under the hood. When you truly understand this simple approach, play around with a system that isn’t going to break any live environment, so you can start to test different types of configurations along the way to see how they behave. If you don’t know what you are doing, do not play around with these things on a Live system as you can do some real damage if you get things wrong which can result in your services being offline for a significant period of time.

by Michael Cropper | Feb 25, 2021 | Client Friendly, Developer, Software Development, Technical |

In this guide we’re going to look at how to setup Selenium using Java and Apache NetBeans as an end to end guide so you can be up and running in no time. If you’ve not set this up before, there are a few nuances throughout the end to end process and other areas where the official documentation isn’t the best at times. So hopefully this guide can clear up some of the questions you likely have so you can start working on using Selenium to run automated browser based testing for your web applications.

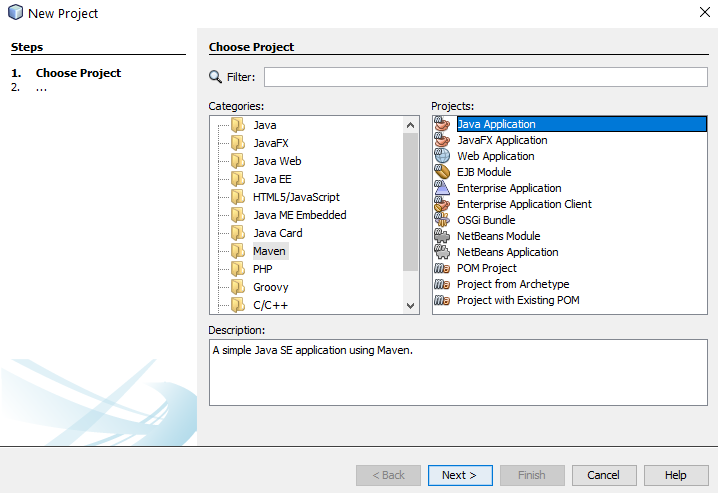

Create a New Maven Java Application Project

Firstly we’re going to use Maven to simplify the installation process. If you aren’t familiar with Maven, it is essentially a package manager that allows you to easily import your project dependencies without having to manually download JAR files and add them to your libraries. You can manually install the JAR files if you like, it’s just a bit more time consuming to find all of the dependencies that you are going to need.

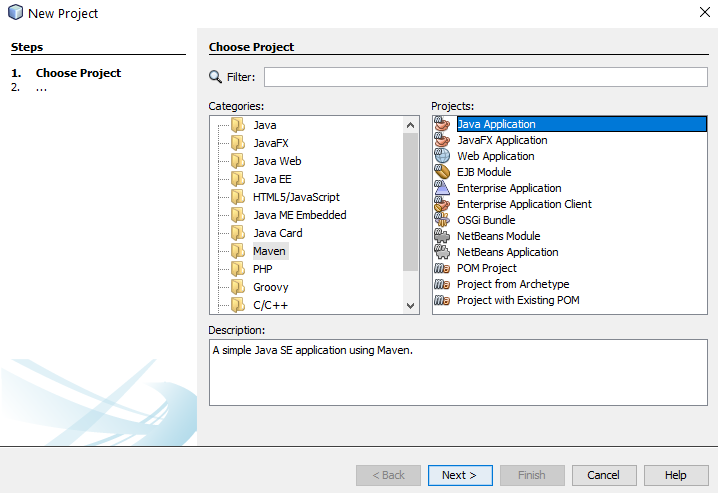

To create a new Maven project, click File > New Project and select Maven then Java Application;

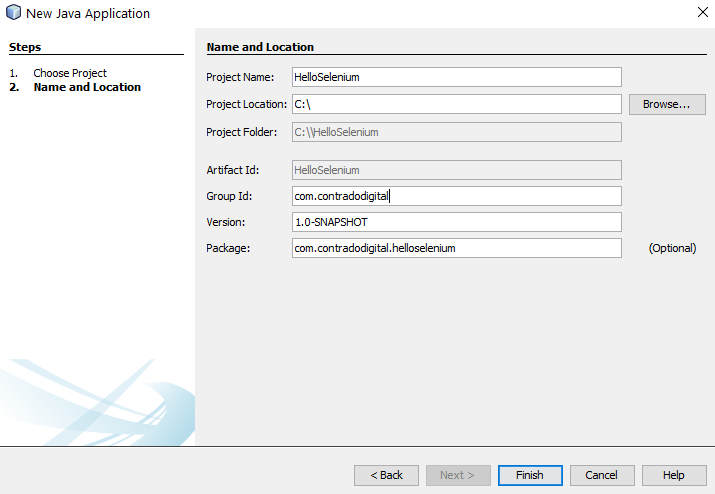

Configure Maven Project and Location

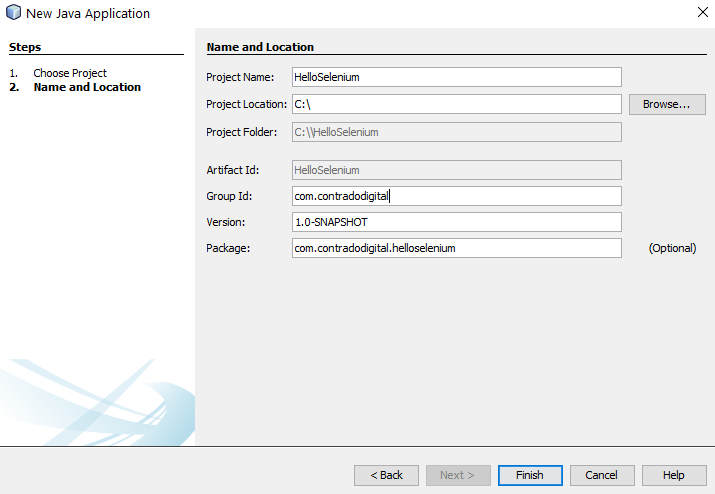

Next you need to configure some details for your project. For the purpose of simplicity we’re going to call this project HelloSelenium. And you’ll notice that when you enter the Group ID field, set this to the canonical name of your package which you generally want to set to your primary domain name in reverse, i.e. com.contradodigital, which will then automatically populate the Package name at the bottom to be com.contradodigital.helloselenium. This is industry best practice for naming your packages so that they have a unique reference.

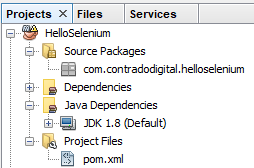

Open Your Pom.xml File

Next we need to configure your pom.xml file which is used for Maven projects to manage your dependencies. Out of the box within NetBeans, when you create a Maven project, a very basic pom.xml file is created for you. Which sounds like it would be handy, but it doesn’t contain a great deal of information so can be more confusing than helpful for those less familiar with Maven.

When you open up the default pom.xml file, it will look similar to the following;

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.contradodigital</groupId>

<artifactId>HelloSelenium</artifactId>

<version>1.0-SNAPSHOT</version>

<packaging>jar</packaging>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

</properties>

<name>HelloSelenium</name>

</project>

Which looks like a good starting point, but is a long way for you to be able to simply add in the relevant dependencies and get this working. So let’s look next at what your pom.xml file needs to look like to get you up and running with Maven.

Configure Your Pom.xml File

Before we jump into what your pom.xml file needs to look like, let’s first take a look to see what the required primary libraries are that we need to get Selenium up and running. There are a fairly small number, but behind the scenes there are quite a few dependencies too which aren’t always obvious.

Just don’t ask me why you need all these and what the differences are. The Selenium documentation isn’t that great and it just seems that these are needed to get things working. If you fancy having a play with the combinations of the above to see what the absolute minimum set of libraries are, then please do comment below with your findings.

So now we know this, there are a few bits that we need to configure in your pom.xml file which include;

- Plugin Repositories – By default there are none configured. So we need to get the Maven Central Repository added in

- Repositories – As above

- Dependencies – We need to add the 6x project dependencies so that they can be imported directly into your NetBeans environment

So to do all the above, your pom.xml file needs to look like the following;

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="https://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="https://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.contradodigital</groupId>

<artifactId>HelloSelenium</artifactId>

<version>1.0-SNAPSHOT</version>

<packaging>jar</packaging>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

</properties>

<pluginRepositories>

<pluginRepository>

<id>central</id>

<name>Central Repository</name>

<url>https://repo.maven.apache.org/maven2</url>

<layout>default</layout>

<snapshots>

<enabled>false</enabled>

</snapshots>

<releases>

<updatePolicy>never</updatePolicy>

</releases>

</pluginRepository>

</pluginRepositories>

<repositories>

<repository>

<id>central</id>

<name>Central Repository</name>

<url>https://repo.maven.apache.org/maven2</url>

<layout>default</layout>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>

<dependencies>

<!-- https://mvnrepository.com/artifact/org.seleniumhq.selenium/selenium-java -->

<dependency>

<groupId>org.seleniumhq.selenium</groupId>

<artifactId>selenium-java</artifactId>

<version>3.141.59</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.seleniumhq.selenium/selenium-api -->

<dependency>

<groupId>org.seleniumhq.selenium</groupId>

<artifactId>selenium-api</artifactId>

<version>3.141.59</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.seleniumhq.selenium/selenium-server -->

<dependency>

<groupId>org.seleniumhq.selenium</groupId>

<artifactId>selenium-server</artifactId>

<version>3.141.59</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.seleniumhq.selenium/selenium-chrome-driver -->

<dependency>

<groupId>org.seleniumhq.selenium</groupId>

<artifactId>selenium-chrome-driver</artifactId>

<version>3.141.59</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.seleniumhq.selenium/selenium-remote-driver -->

<dependency>

<groupId>org.seleniumhq.selenium</groupId>

<artifactId>selenium-remote-driver</artifactId>

<version>3.141.59</version>

</dependency>

<!-- https://mvnrepository.com/artifact/junit/junit -->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.13.2</version>

<scope>test</scope>

</dependency>

</dependencies>

</project>

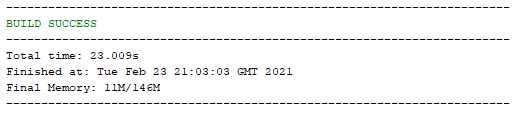

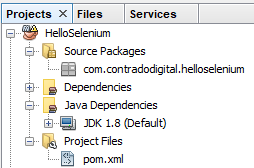

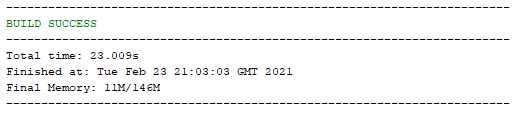

Once you’ve done this, save. Then Right Click on your project name and select ‘Build with Dependencies’ which will pull all of the dependencies into your NetBeans project. You should see a successful build message here;

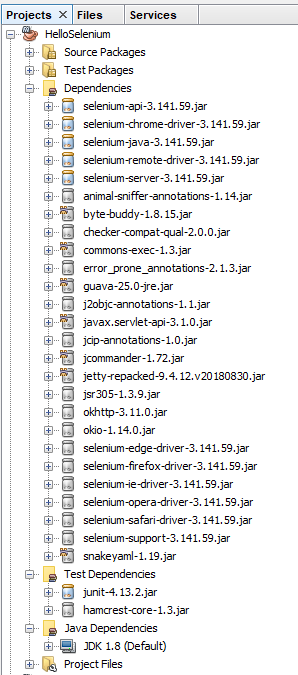

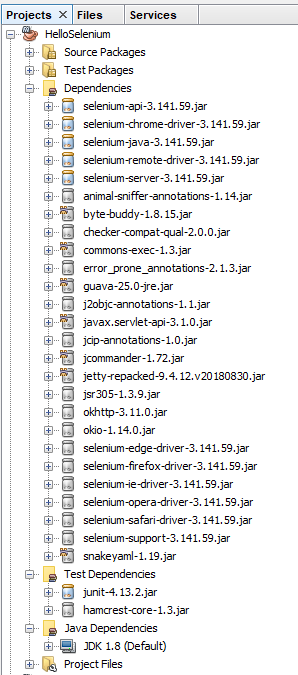

And you should also notice that within your NetBeans Project the total number of Dependencies and Test Dependencies that have now been imported have grown to significantly more than 6 JAR files that have been imported. This is one of the huge benefits of using a package dependency management system such as Maven as it just helps you get things working with ease. Can you imagine having to find all of the different libraries that have now been imported manually and keeping everything in sync? Here is what has now been imported for you automatically;

Note, if this is the first time you are getting Maven set up on your machine, you may find a few issues along the way. One of the common issues relates to an error that NetBeans throws which states;

“Cannot run program “cmd”, Malformed argument has embedded quote”

Thankfully to fix this you simply need to edit the file, C:\Program Files\NetBeans 8.2\etc\netbeans.conf and append some text to the line that contains netbeans_default_options;

-J-Djdk.lang.Process.allowAmbiguousCommands=true

So that the full line now reads;

netbeans_default_options=”-J-client -J-Xss2m -J-Xms32m -J-Dapple.laf.useScreenMenuBar=true -J-Dapple.awt.graphics.UseQuartz=true -J-Dsun.java2d.noddraw=true -J-Dsun.java2d.dpiaware=true -J-Dsun.zip.disableMemoryMapping=true -J-Djdk.lang.Process.allowAmbiguousCommands=true”

If you’re interested in why this is required, this release note outlines the issue in more detail.

You will find there will be the odd nuance like this depending on the version of NetBeans / Java / JDK / Maven etc. that you are running. Rarely do things seamlessly line up. So if you encounter any slightly different issues within your setup, then please do leave a comment below once you’ve found a solution to help others in the future.

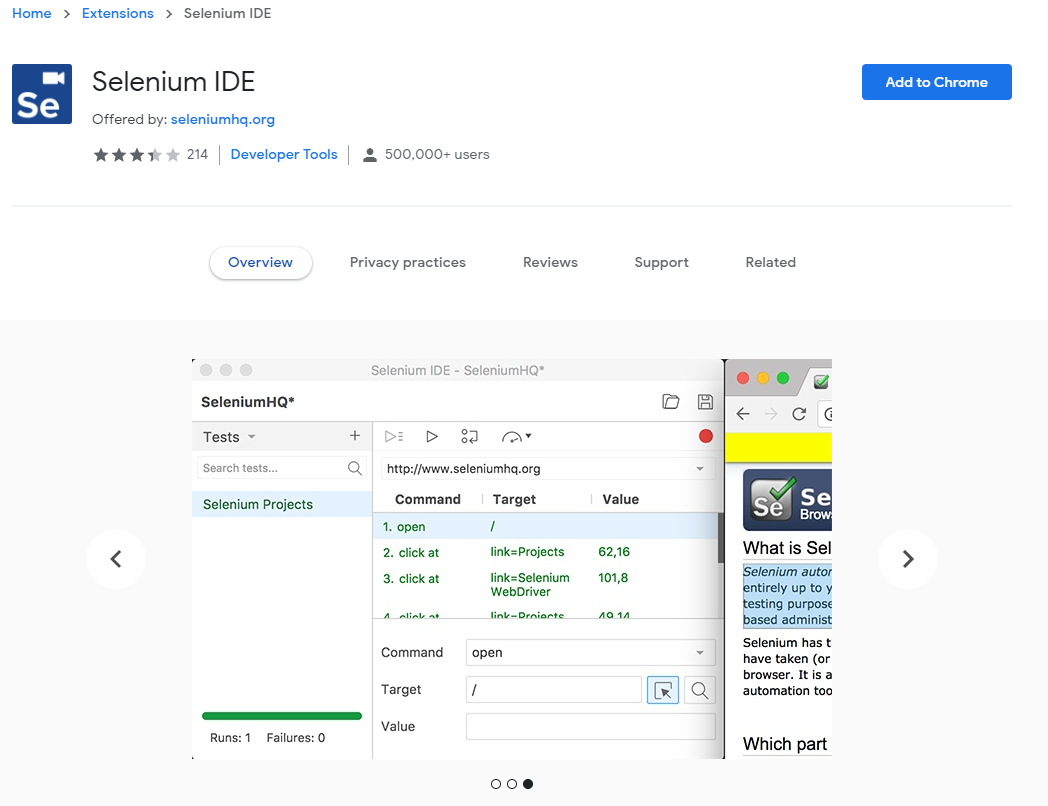

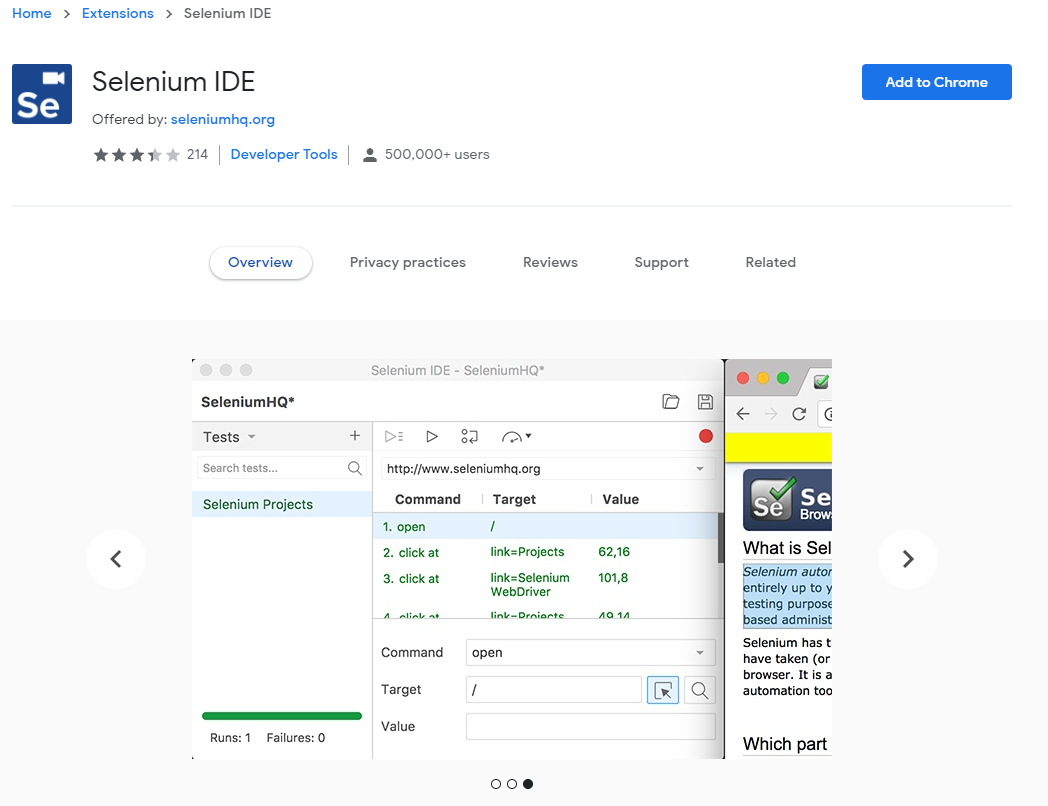

Install Selenium IDE

Ok, so now we’ve got our NetBeans environment up and running. It’s time to make life as easy as possible. I’m assuming you don’t want to be writing everything manually for your web browser test scripts? I mean, if you do, enjoy yourself, but personally I prefer to make life as easy as possible by using the available tools at hand. This is where the Selenium IDE comes into play.

The Selenium IDE is a Google Chrome Extension that you can easily download and install at the click of a button;

Once you’ve done this you will notice that the Extension has added a button at the top right of your Chrome browser that you can click on to open the Selenium IDE. If you’re from a tech heavy software development background, you’re probably expecting an installed desktop application whenever you hear the word IDE mentioned, but in this case it is nothing more than a Chrome Extension.

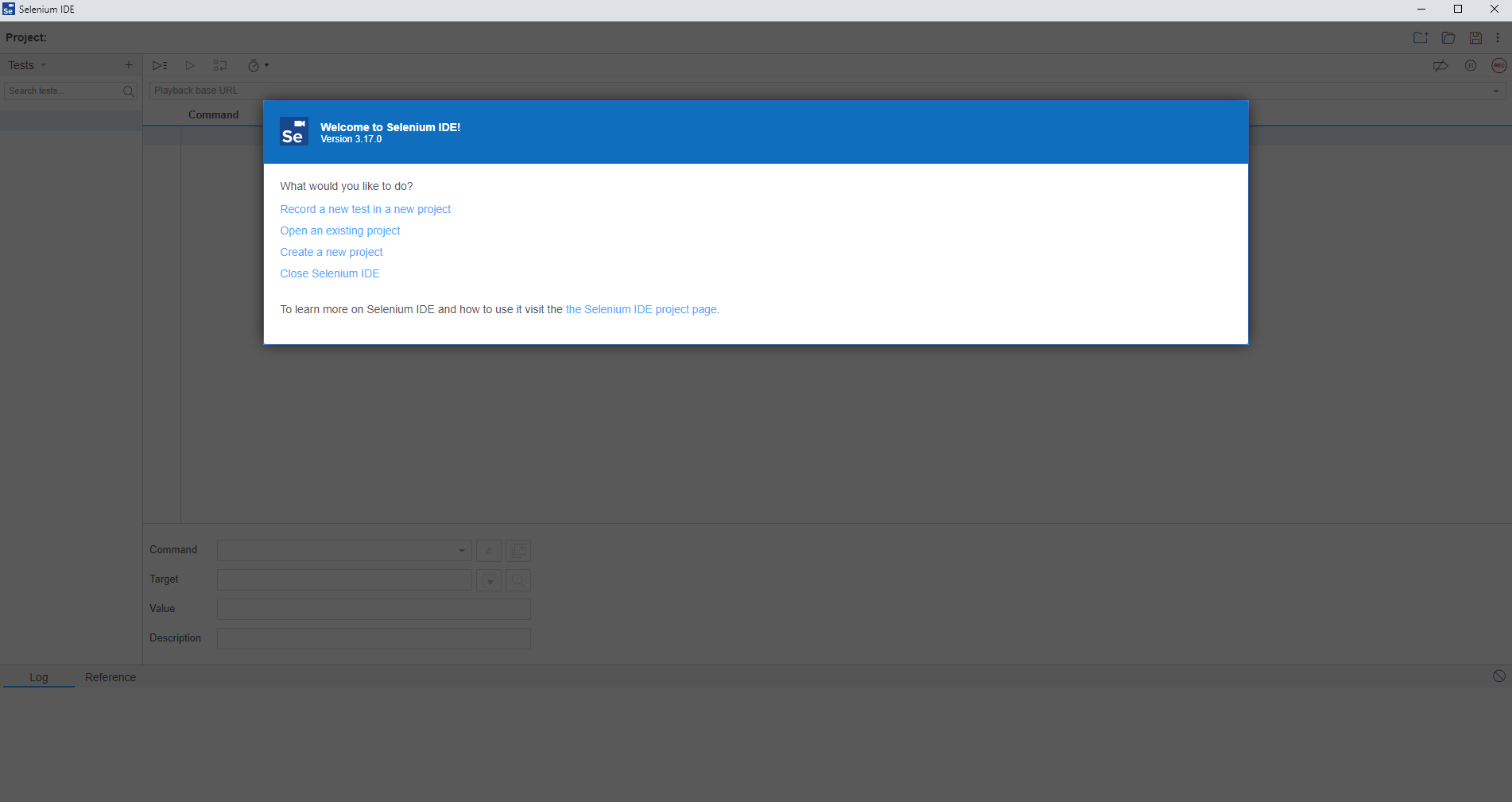

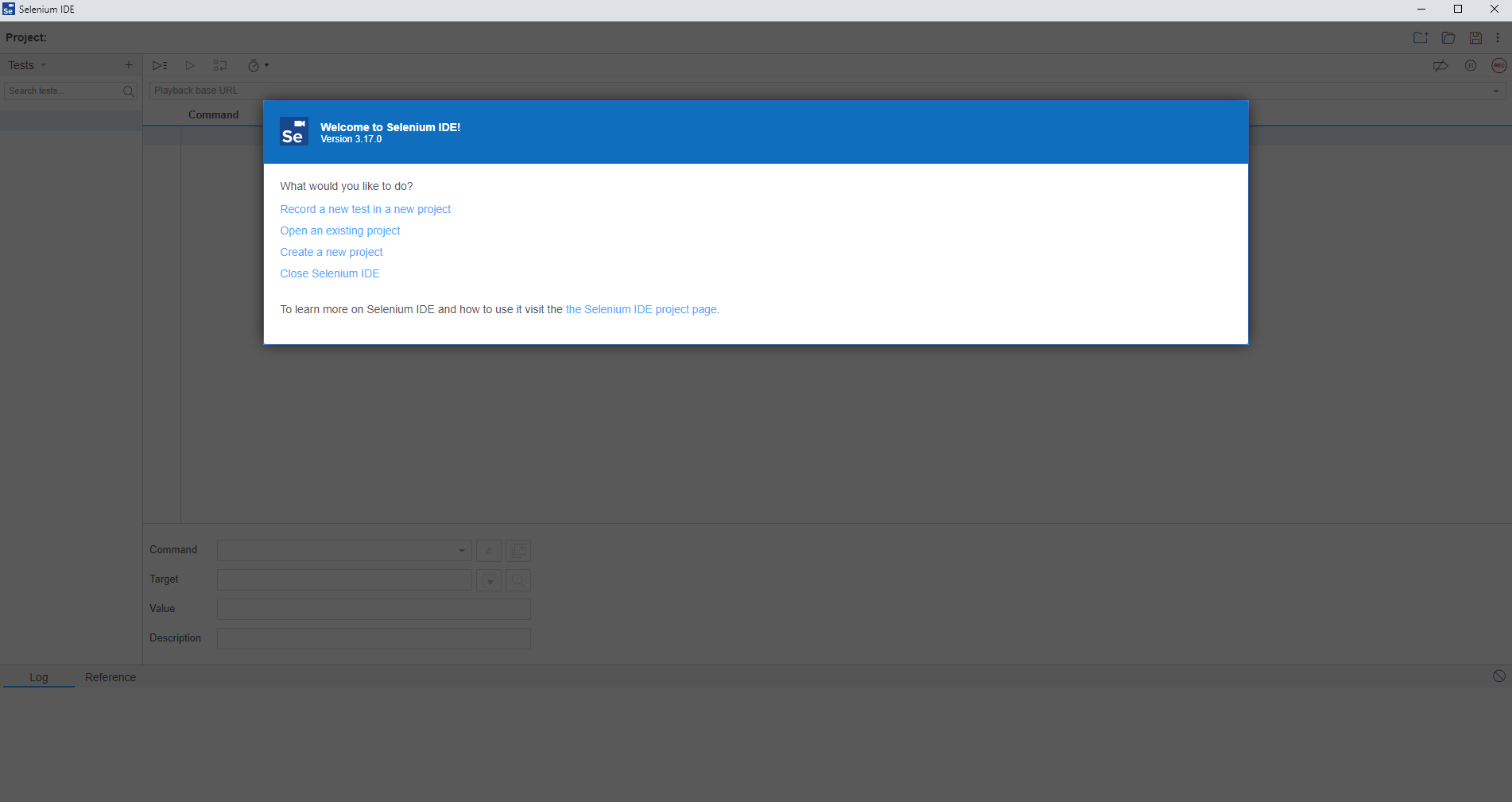

Click the Selenium IDE icon in Chrome to open it up. Once it is open for the first time you will notice a basic welcome screen;

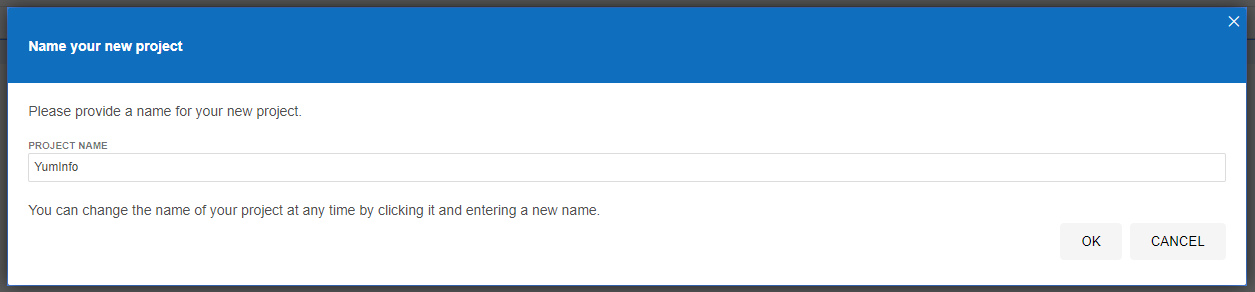

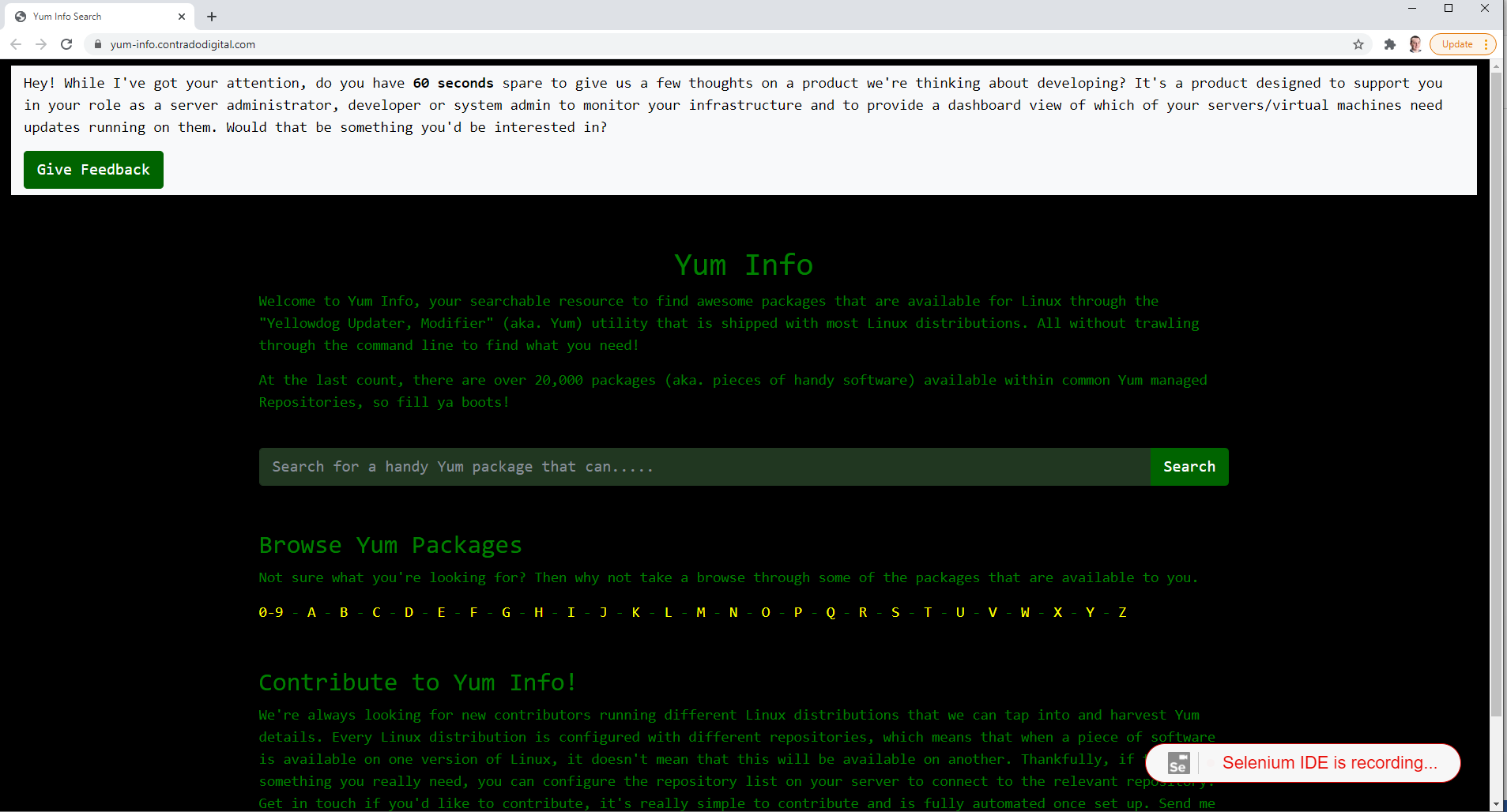

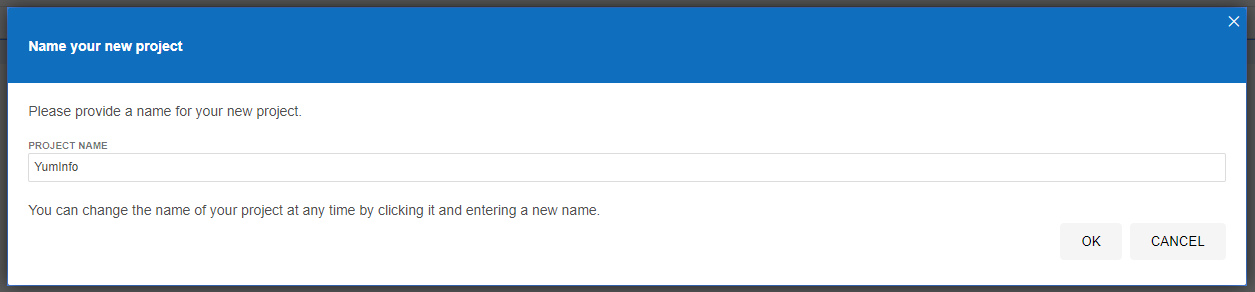

Click on Create a New Project to get started. Give your project a name so it’s clear what you are testing. In this example we’re going to be doing some testing on YumInfo which is an application we created to help software developers and infrastructure engineers easily search through the 20,000 packages that are contained within common Yum Repositories. Exactly like we have the Maven Central Repository for installing Java packages, it’s the same thing, just focused on Linux level software packages instead.

Create Your First Automated Web Browser Test in Selenium IDE

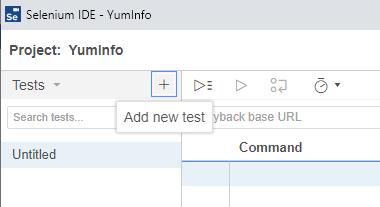

Ok, so now we’ve got Selenium installed and a new project created, let’s get onto creating your first automated browser test so you can get a feel for how all this works. It’s extremely simple to do so.

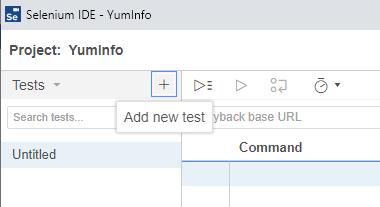

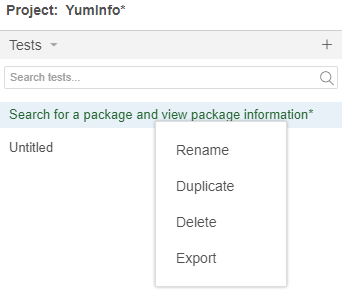

Firstly click on the + button to add a new test;

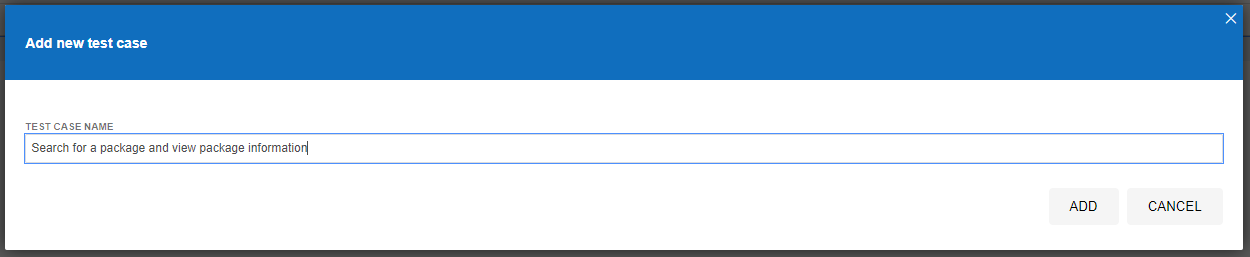

This will then open up the popup which allows you to give your new test a name. In this example, we’re going to test if we can use the search functionality on the YumInfo site to easily find a useful package.

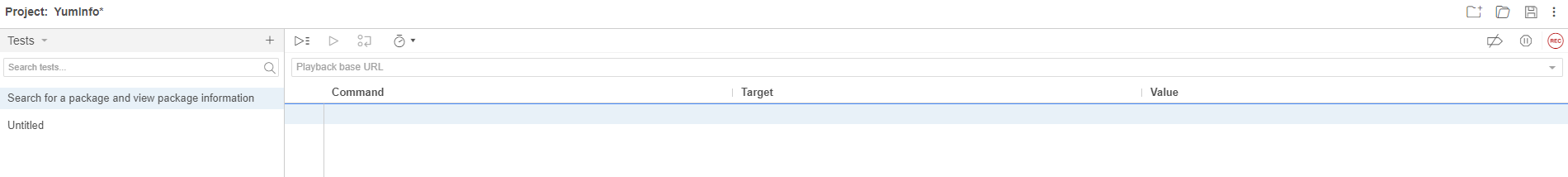

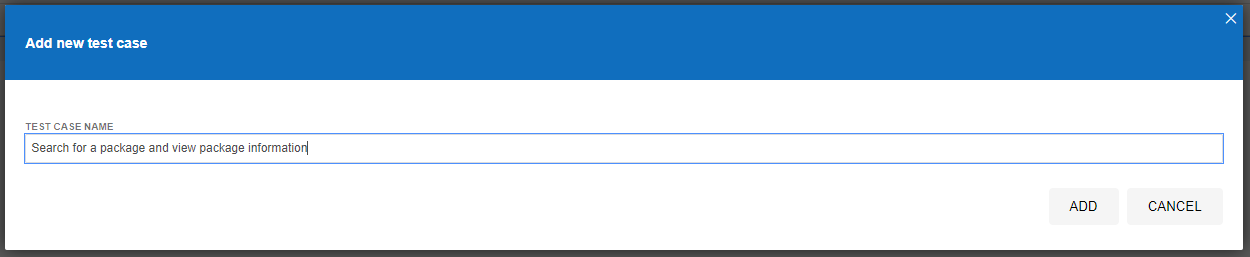

Once you’ve done this, you’ll notice that a new Test Case has been created for you which is in the left section of the screenshot below, but you’ll notice there are no steps that have been created yet which is why the section on the right of the screenshot below is still all blank.

What you will notice in the above screenshot is there are two core sections that we are going to look at next;

- Playback base URL – This is the landing page that you are going to start your tests from. Generally speaking this is so you can test in the same way that your users would use the website.

- Record Button – This is in the top right coloured in red. This allows you to start the process running for recording your automated test scripts within Selenium IDE.

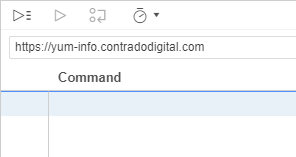

To get started, enter the base URL you want to work with. In our case we’re going to enter https://yum-info.contradodigital.com as that is the website we are doing the automated browser based testing on.

Then once you’ve done that. Click the red Record Button at the top right.

This step will open a brand new Chrome window and it will inform you that recording has started. It’s a very similar concept as you can record Macros in Excel if you have ever used those before.

Now all you need to do is to click around your website and use it like a user would. In this case, as this specific Test Case we are looking to search for a package and then view the package information we’re going to do just that.

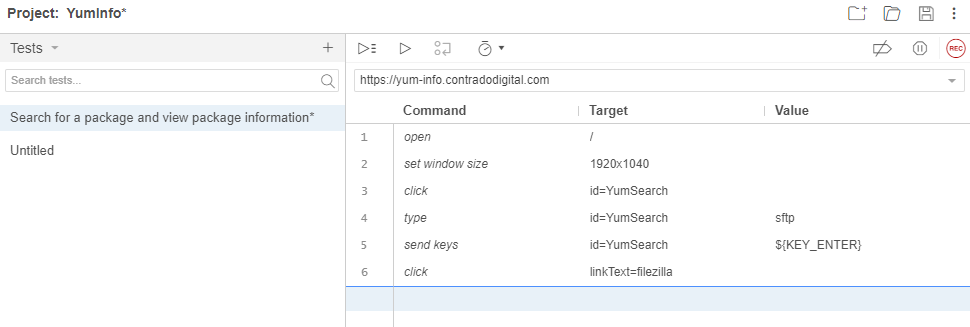

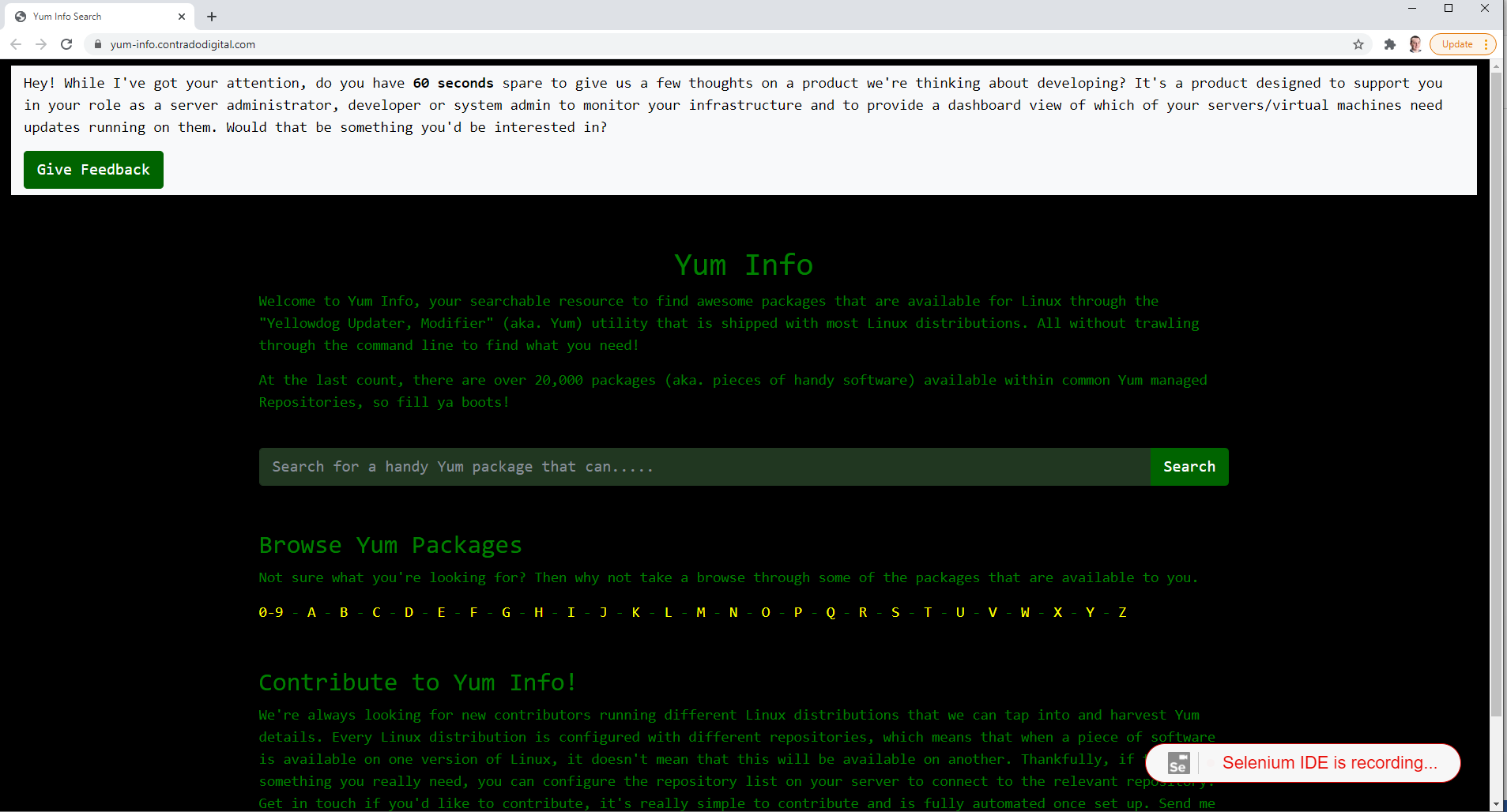

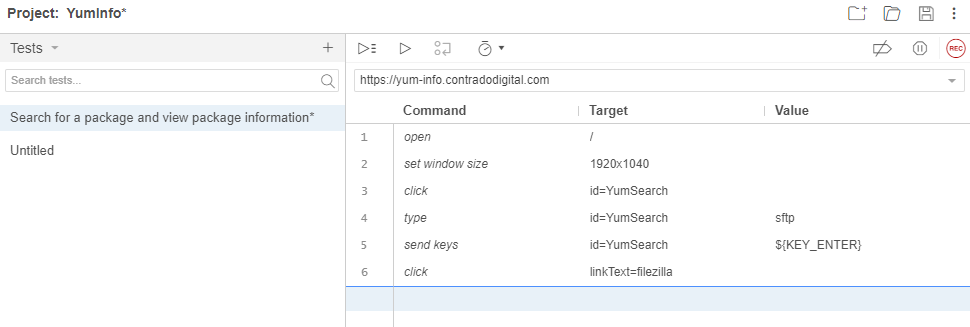

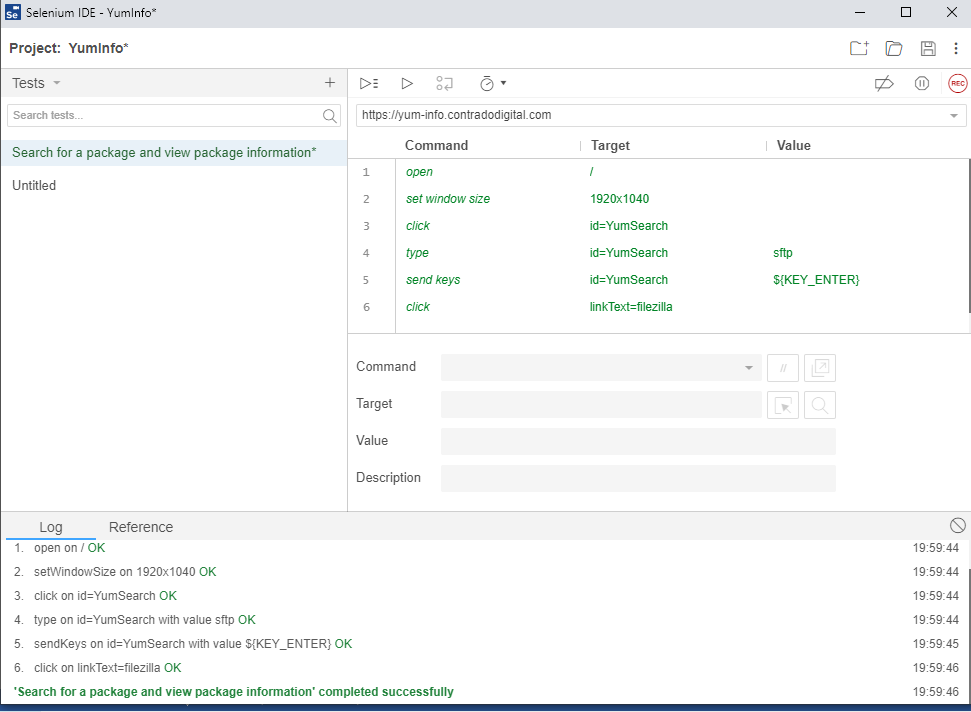

Once you are done clicking around, simply navigate back to your Selenium IDE that is open and click on Stop Recording. Once you have done that you will notice that the specific steps that you have just taken within the web browser have been recorded within Selenium IDE. Awesome!

What the above steps are saying is that I followed these actions;

- Open the Base URL https://yum-info.contradodigital.com

- Set the browser window size to the default of your computer setup

- Click on the HTML Element that has an ID of ‘YumSearch’, which in this case is the search box that allows users to search for packages

- Type into the search box “sftp” without the quotes

- Then click Enter to trigger the search

- And finally, click on the link titled FileZilla which is a relevant package that can handle SFTP based communications

What all this has shown us is that as a user doing these steps, this all works as expected on the website. Hopefully this isn’t an unexpected result that basic functionality on your website is working. But this is just a simple example we are using to get you up and running.

Save this Test Case so you can reference back to it later down the line.

Re-Run Your First Automated Web Browser Test Case

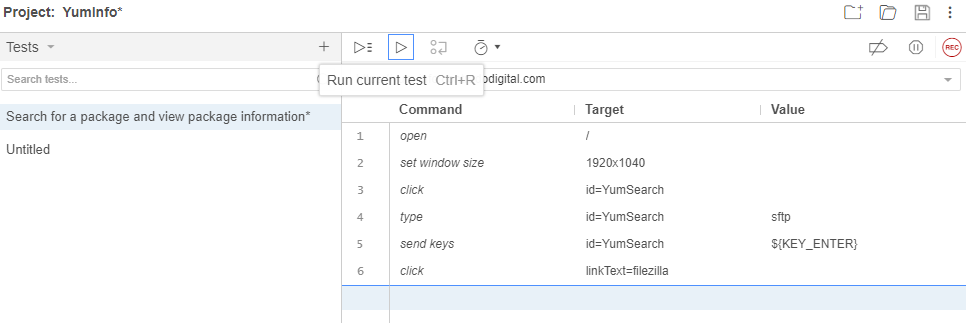

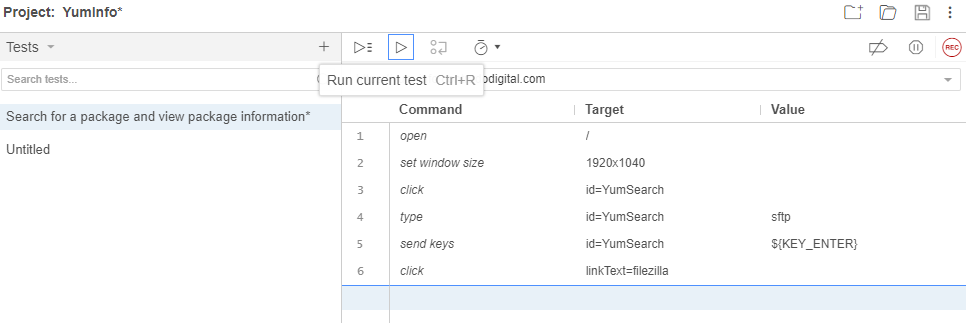

Now that you have recorded your first test, you want to replay it so that you are confident that it has been recorded accurately. For traditionally built websites that use a single Request/Response you’ll find that these test generally record perfectly first time around. Whereas for websites build using more Single Page Applications / Front End Frameworks that load content dynamically into the page past the initial page load, you’ll find you will likely have a few issues with the default recordings and that the automated recording will need some manual intervention to get them to work properly.

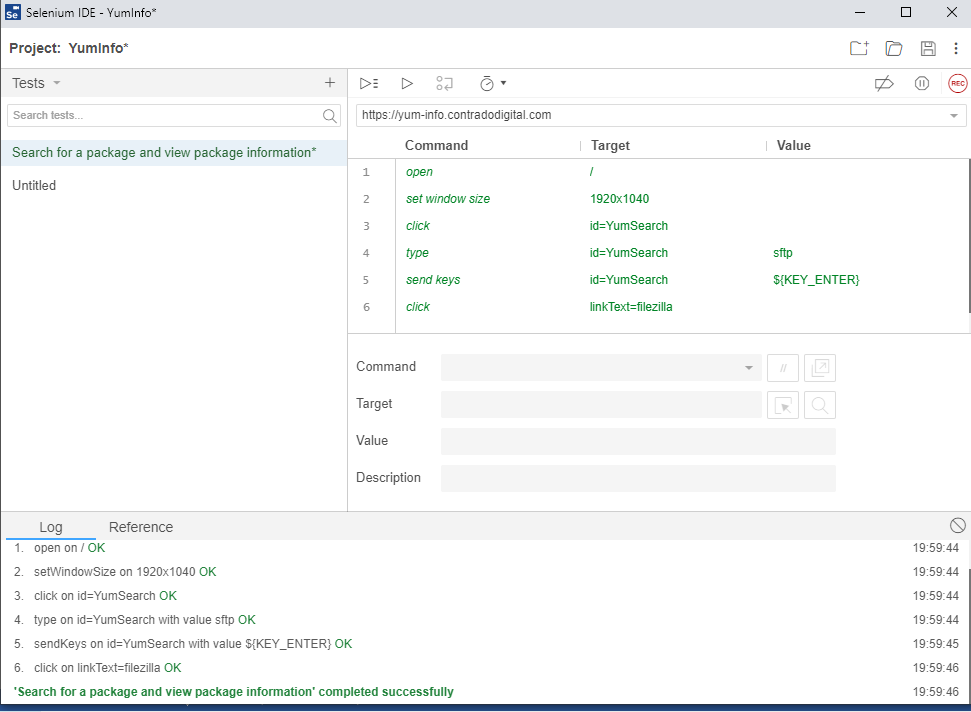

To re-run the test you have just created, simply click on the Play button;

Once you click that button, you will notice that magic starts to happen. Your web browser will open and the exact steps that you just took will be replicated in real time right in front of your eyes. Most importantly, once it is complete, you will see that it has completed successfully.

By doing this you have just proved that the Test Case has been recorded successfully and can run through to the end to confirm this end to end process works correctly. This is important as you build up your Test Cases as you will find many larger websites can have 1000s and even 10,000s of Test Cases created over time that ensure the stability of the platform.

Why Build a Library of Test Cases in Selenium IDE for Automated Web Browser Testing?

Just stepping back a little though, why are we even bothering to do this? Well quite frankly, that is a very good question – and one that you should genuinely be asking for any project that you are working on. Yes, many people say this is best practice to build automated web browser testing for web applications, and there is a very solid argument to this. Then on the other hand, if you are working with solid web application development principles, and you have awesome developers, and you have an extremely slick development process to fix forward, then you may find that Selenium automated web browser testing is just an added burden that adds very little value.

The reality is that most organisations, this isn’t the reality, so Selenium comes in extremely handy to mitigate any risk of pushing bad code through to the live environment and streamlines regression testing. Meaning that you can run a significant amount of automated tests without ever having to worry about getting users to manually test features and functionality every time you want to do a release.

Download Chrome Web Driver

Now we’ve utilised the power of the Selenium IDE to create our automated web browser for us, it’s time to take that and move it into a proper software development environment, aka. NetBeans. Before we jump into the details we’re going to need to download the Chrome Web Driver. This will allow you to make NetBeans, more specifically the Selenium and JUnit dependencies, interact with your Chrome web browser.

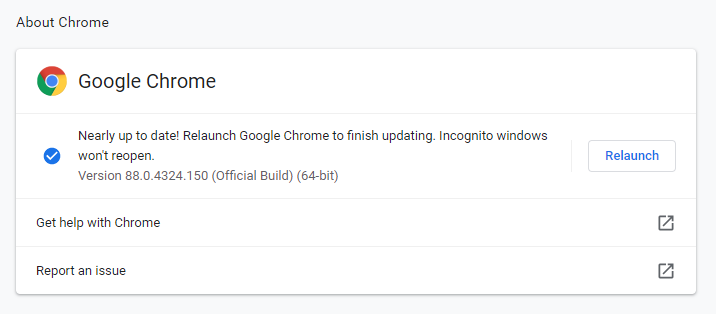

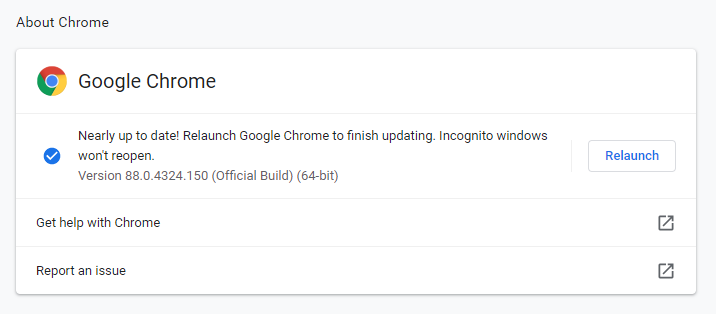

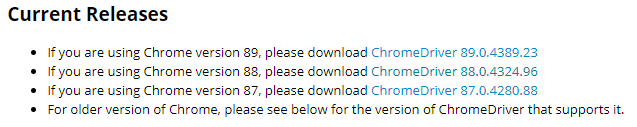

As with anything, versioning is important. So the first thing you need to do is understand what version of Google Chrome you are running. To do this, go into Google Chrome > Settings > About Chrome and you will see your version number there;

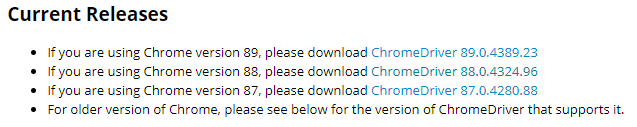

Now you know what version of Google Chrome you are using. Next you need to download the specific Google Chrome Driver that applies to your version of Google Chrome. Head over to the Chromium Chrome Driver Downloads page and find the version that applies to you.

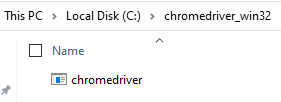

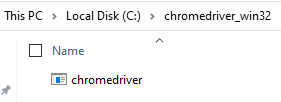

Once you’ve downloaded the ChromeDriver and you have unzipped it, you have this on your system which we’ll reference a little later as we move your Selenium IDE generated Test Case into NetBeans.

You want to put this file into a location that you aren’t going to change next week as your code will break. You need this in a handy reference location that suits how you personally organise your development environments.

Export Test Case from Selenium IDE to JUnit Format

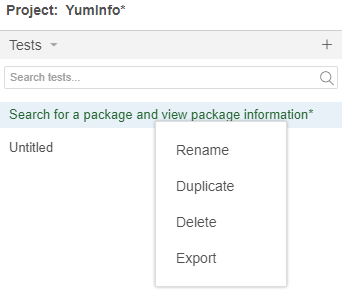

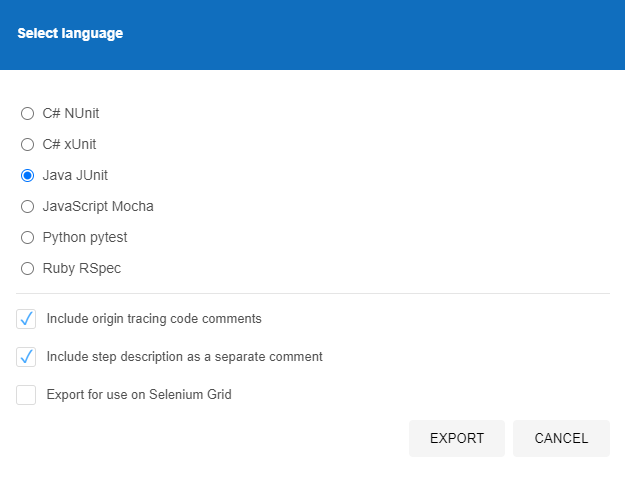

Next we need to export the Test Case that we created in Selenium IDE so that we can then import that into NetBeans. To do this go back to Selenium IDE and right click the Test Case you created then click on Export;

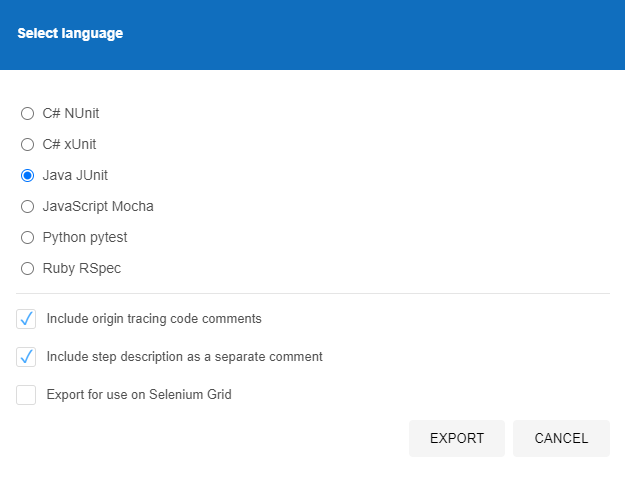

Then select the language we want to export the file to. The beauty of the Selenium IDE is that it is cross language compatible which means that you can import the Test Case into any number of your preferred automated web browser testing setups you use, in this case we’re using JUnit in Java, but you could quite easily use either NUnit or xUnit for C#, or Mocha for JavaScipt or pytest for Python or RSpec for Ruby. The choice is yours.

Once this has been exported, this will save a .java file in our example to your local file system which will look as follows;

// Generated by Selenium IDE

import org.junit.Test;

import org.junit.Before;

import org.junit.After;

import static org.junit.Assert.*;

import static org.hamcrest.CoreMatchers.is;

import static org.hamcrest.core.IsNot.not;

import org.openqa.selenium.By;

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.firefox.FirefoxDriver;

import org.openqa.selenium.chrome.ChromeDriver;

import org.openqa.selenium.remote.RemoteWebDriver;

import org.openqa.selenium.remote.DesiredCapabilities;

import org.openqa.selenium.Dimension;

import org.openqa.selenium.WebElement;

import org.openqa.selenium.interactions.Actions;

import org.openqa.selenium.support.ui.ExpectedConditions;

import org.openqa.selenium.support.ui.WebDriverWait;

import org.openqa.selenium.JavascriptExecutor;

import org.openqa.selenium.Alert;

import org.openqa.selenium.Keys;

import java.util.*;

import java.net.MalformedURLException;

import java.net.URL;

public class SearchforapackageandviewpackageinformationTest {

private WebDriver driver;

private Map<String, Object> vars;

JavascriptExecutor js;

@Before

public void setUp() {

driver = new ChromeDriver();

js = (JavascriptExecutor) driver;

vars = new HashMap<String, Object>();

}

@After

public void tearDown() {

driver.quit();

}

@Test

public void searchforapackageandviewpackageinformation() {

// Test name: Search for a package and view package information

// Step # | name | target | value

// 1 | open | / |

driver.get("https://yum-info.contradodigital.com/");

// 2 | setWindowSize | 1920x1040 |

driver.manage().window().setSize(new Dimension(1920, 1040));

// 3 | click | id=YumSearch |

driver.findElement(By.id("YumSearch")).click();

// 4 | type | id=YumSearch | sftp

driver.findElement(By.id("YumSearch")).sendKeys("sftp");

// 5 | sendKeys | id=YumSearch | ${KEY_ENTER}

driver.findElement(By.id("YumSearch")).sendKeys(Keys.ENTER);

// 6 | click | linkText=filezilla |

driver.findElement(By.linkText("filezilla")).click();

}

}

The core bits of information that are relevant for this are the following;

@Test

public void searchforapackageandviewpackageinformation() {

// Test name: Search for a package and view package information

// Step # | name | target | value

// 1 | open | / |

driver.get("https://yum-info.contradodigital.com/");

// 2 | setWindowSize | 1920x1040 |

driver.manage().window().setSize(new Dimension(1920, 1040));

// 3 | click | id=YumSearch |

driver.findElement(By.id("YumSearch")).click();

// 4 | type | id=YumSearch | sftp

driver.findElement(By.id("YumSearch")).sendKeys("sftp");

// 5 | sendKeys | id=YumSearch | ${KEY_ENTER}

driver.findElement(By.id("YumSearch")).sendKeys(Keys.ENTER);

// 6 | click | linkText=filezilla |

driver.findElement(By.linkText("filezilla")).click();

}

Here you can see the 6 steps that were in the original Selenium IDE which are visualised as code. This is extremely handy as this now gives you full control over every aspect of Selenium by using the power of the library to do what you need to do and more by using the power of JUnit too. Selenium by itself will only take you so far, you need to integrate Selenium with a proper testing framework such as JUnit to get the most from the technology. By exporting this code, this is the first step of the process to enhancing your automated web browser testing setup.

Create a New JUnit Test File in NetBeans

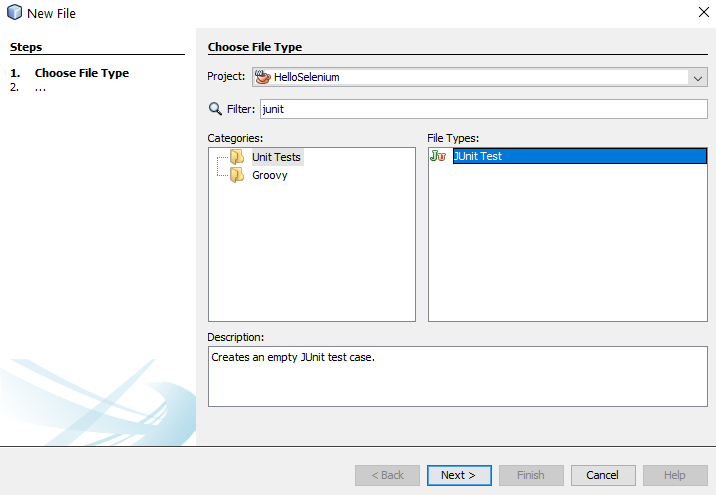

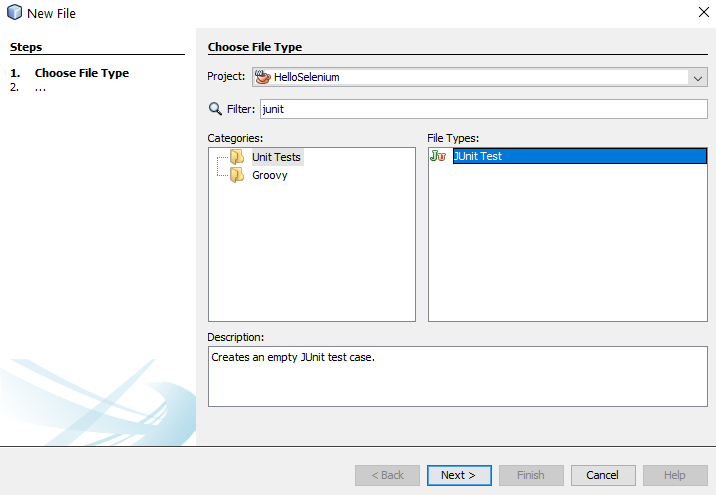

Ok, so back over to NetBeans. We want to import the Exported JUnit file from Selenium IDE into NetBeans so that we can manage the lifecycle of this Test Case better and work collaboratively with our colleagues. We’ll jump into more around the team collaboration elements of Selenium a little later. For now, let’s first jump in and get the JUnit Test added to NetBeans. To do this, right click on a folder in your project in NetBeans and select New File, then search for JUnit, then select JUnit Test and click Next.

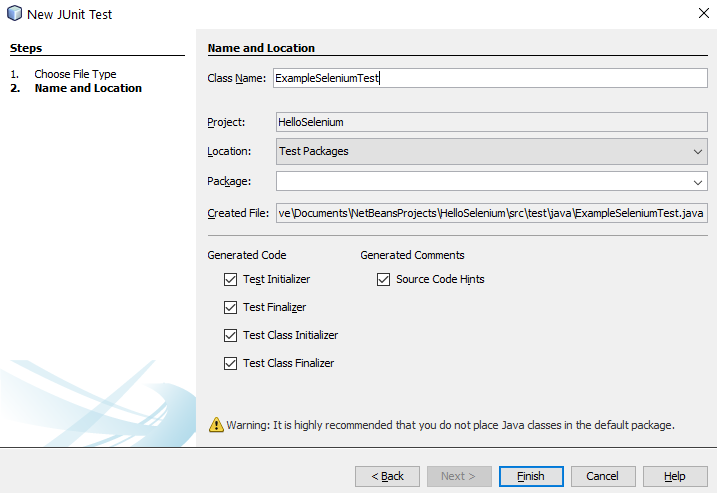

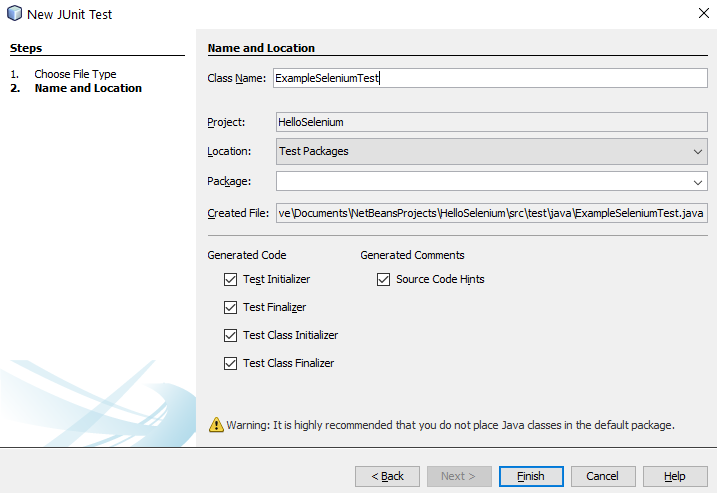

Once you’ve done that, give your new JUnit Test a Class Name and click Finish.

You will notice that this has generated a bunch of code for your automatically which is quite handy. This is great, but what you will also notice is that this doesn’t quite align 100% with the code that has been generated by the Selenium IDE. This is because here you have created a specific JUnit Test based on what NetBeans thinks a Test looks like, whereas the code that was generated in Selenium IDE was code that was a JUnit test that is specific to the test you created.

import org.junit.After;

import org.junit.AfterClass;

import org.junit.Before;

import org.junit.BeforeClass;

import org.junit.Test;

import static org.junit.Assert.*;

import org.openqa.selenium.By;

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.WebElement;

import org.openqa.selenium.chrome.ChromeDriver;

/**

*

* @author Michael Cropper

*/

public class ExampleSeleniumTest {

public ExampleSeleniumTest() {

}

@BeforeClass

public static void setUpClass() {

}

@AfterClass

public static void tearDownClass() {

}

@Before

public void setUp() {

}

@After

public void tearDown() {

}

// TODO add test methods here.

// The methods must be annotated with annotation @Test. For example:

//

// @Test

// public void hello() {}

}

Merge Your Exported Selenium IDE Test Case Into Your NetBeans JUnit Test Class

The next step is generally best to be done as a copy and paste to fit the automatically generated Selenium IDE Test Case code into the standardised approach you use for your JUnit Test Classes within NetBeans. Don’t just blindly copy and paste the code as while the automatically generated code is handy, you need to manage this code to fit with your specific needs and use cases.

In this example, here’s what the JUnit Test Class looks like now I’ve manually merged this code;

import org.junit.After;

import org.junit.AfterClass;

import org.junit.Before;

import org.junit.BeforeClass;

import org.junit.Test;

import static org.junit.Assert.*;

import org.openqa.selenium.By;

import org.openqa.selenium.Dimension;

import org.openqa.selenium.Keys;

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.WebElement;

import org.openqa.selenium.chrome.ChromeDriver;

/**

*

* @author Michael Cropper

*/

public class ExampleSeleniumTest {

public ExampleSeleniumTest() {

}

@BeforeClass

public static void setUpClass() {

}

@AfterClass

public static void tearDownClass() {

}

private WebDriver driver;

@Before

public void setUp() {

System.setProperty("webdriver.chrome.driver", "C:/chromedriver_win32/chromedriver.exe");

driver = new ChromeDriver();

}

@After

public void tearDown() {

driver.quit();

}

@Test

public void searchforapackageandviewpackageinformation() {

// Test name: Search for a package and view package information

// Step # | name | target | value

// 1 | open | / |

driver.get("https://yum-info.contradodigital.com/");

// 2 | setWindowSize | 1920x1040 |

driver.manage().window().setSize(new Dimension(1920, 1040));

// 3 | click | id=YumSearch |

driver.findElement(By.id("YumSearch")).click();

// 4 | type | id=YumSearch | sftp

driver.findElement(By.id("YumSearch")).sendKeys("sftp");

// 5 | sendKeys | id=YumSearch | ${KEY_ENTER}

driver.findElement(By.id("YumSearch")).sendKeys(Keys.ENTER);

// 6 | click | linkText=filezilla |

driver.findElement(By.linkText("filezilla")).click();

}

}

A couple of really important parts to be made aware of in the above code snippet are these;

private WebDriver driver;

@Before

public void setUp() {

System.setProperty("webdriver.chrome.driver", "C:/chromedriver_win32/chromedriver.exe");

driver = new ChromeDriver();

}

@After

public void tearDown() {

driver.quit();

}

These are the following lines of code / methods;

- private WebDriver driver;

- This line of code is required to ensure you can easily open/close the WebDriver at the start and end of this Test Class. Over time you may have many Tests contained within a single Test Class, so by using this approach it is significantly more efficient and allows you to re-use the object without having to instantiate it every single time you run a Test within this Test Class.

- This then aligns with the contain within the setup() method and the teardown()

- setProperty(“webdriver.chrome.driver”, “C:/chromedriver_win32/chromedriver.exe”);

- This is what enables your JUnit code to communicate with your Google Chrome Web Browser and control it.

- public void searchforapackageandviewpackageinformation()

- Hopefully you recognise the contents of this method which is the automatically generated code produced by Selenium IDE that we have merged into this JUnit Test Class.

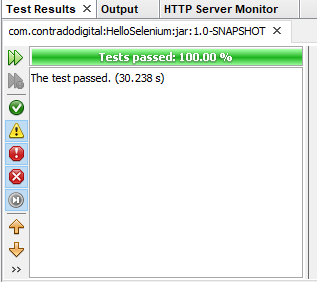

Run Your JUnit Test Class

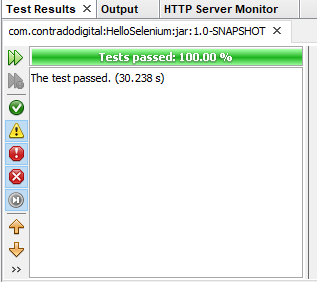

Excellent, now we’re at a point that we can actually run our JUnit Test Class to confirm everything is still working as expected. To do this simply Right Click and select Test File within your JUnit Test Class. If everything has merged successfully you should see your Google Chrome Web Browser kick into action, run the test and the test should pass.

Collaborating with Colleagues

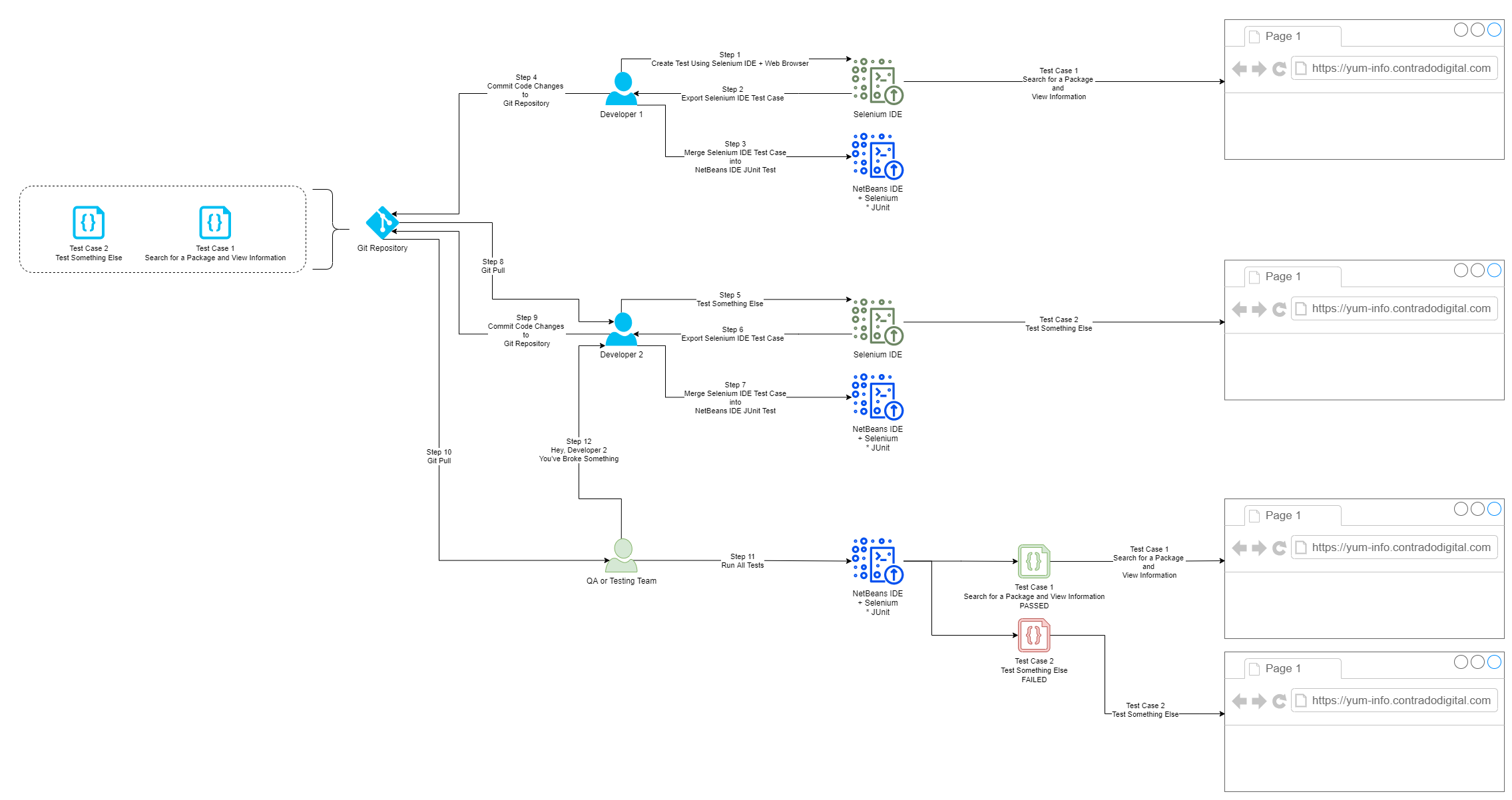

Finally we’re going to briefly touch on how you now collaborate with colleagues using Selenium and JUnit Test Classes. Everything we have done so far is excellent if you are working by yourself and just having a play around. But in any real world environment you are going to be working with a lot of colleagues that span different roles/responsibilities and even departments. This is where the collaboration element really kicks in. For the purpose of this blog post and to keep things fairly simplistic we’re going to highlight how this approach works when you are working between Developers and Quality Assurance (QA) or Test people.

One point to note is that this isn’t going to be a tutorial on how to use Git, so if you are unfamiliar with some of the concepts below then you are going to need to do a bit of background reading. To get up to speed with how to use Git and why it is important, then read the Git Book. Once you’ve read that about 10x from a standing start with zero knowledge you’ll probably understand it. We’ll do a write up on Git in a bit more detail at some point as it is a topic that is hugely misunderstood and often implemented incorrectly which can cause a lot of problems.

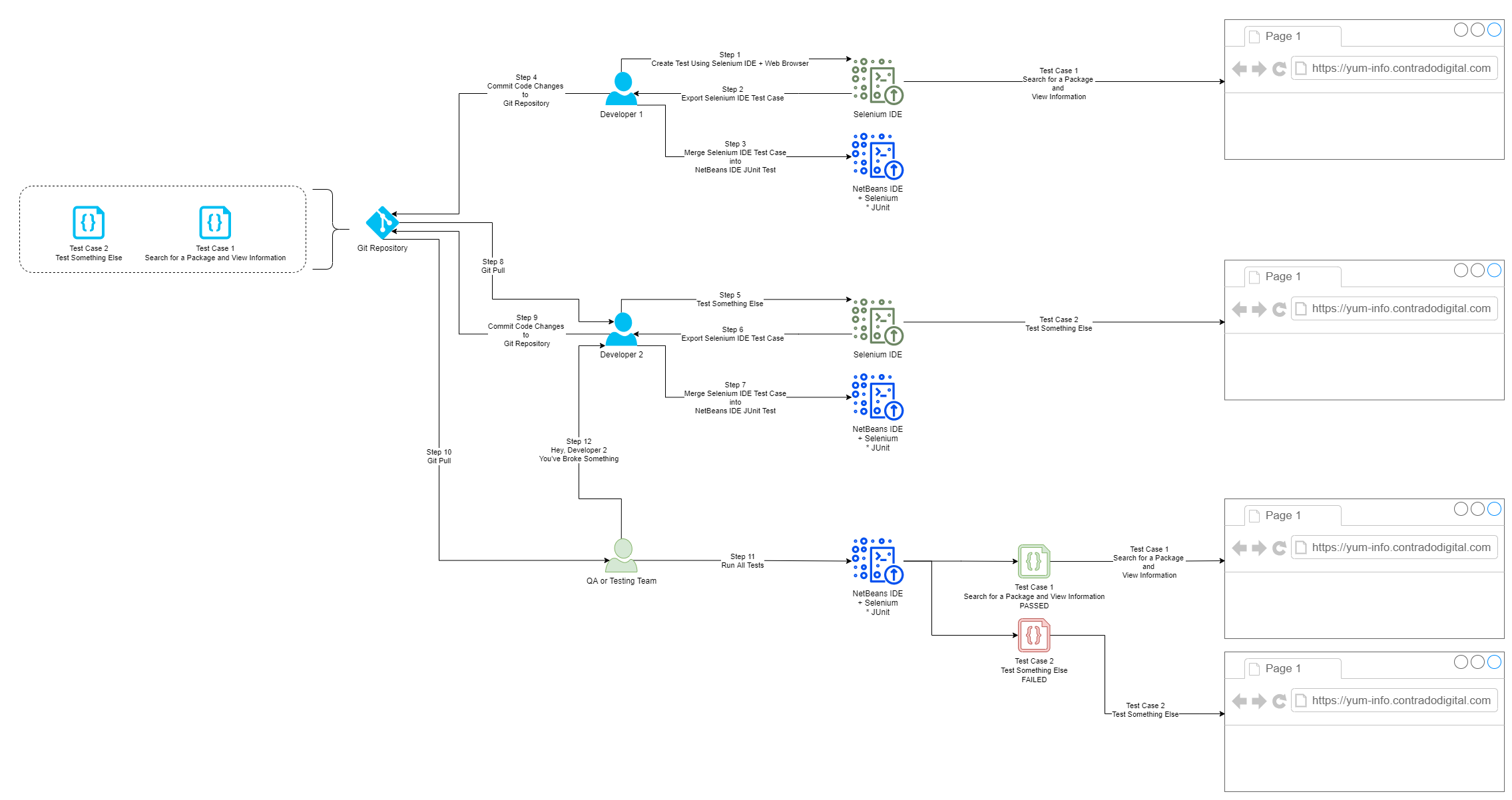

Below is a diagram of a generic process that can be handy to implement in organisations to show you how the different steps fit together along the Software Development Life Cycle, specifically focused around Selenium and team collaboration.

Summary

Hopefully this guide on how to setup Selenium using Java and Apache NetBeans has been helpful to get you up and running with Selenium in no time at all. This is very much the basics of automated web browser testing using a very specific set of technology, tooling and processes. There is an awful lot more that could be covered on this topic and that is perhaps one for another day. For now, this is just the very basics about getting up and running.