Whilst working in the SEO industry there are time when certain tools would make your life easier and you just can’t quite find a tool that does the job that you need. This is one occasion where I was looking for a simple sitemap generator and all of the tools that I could find were either limiting the number of URLs which could be contained to a really small number or didn’t allow me to tell the tool what the URLs actually were.

So that I why I built SimpleSitemapGenerator.org over a weekend. I’m sure there will be sitemap tools which can achieve a similar result out there that I simply have found but my patience was wearing thin searching

Was it difficult? Not really. It was just working through some basic logic to build in exactly what I needed. Below explains how I built the tool.

What is it built with?

Simple Sitemap Generator is built on a Java platform running on an Apache Tomcat web server. Why? Because I know Java. The exact same task could be achieved using any programming language you choose if you require. My referred method of developing websites is using the Integrated Development Environment (IDE) called NetBeans.

Some hardcore programmers always prefer not to use these types of tools as they can get their self tied in knots sometimes which require a deeper understanding to untangle – so if you only use these tools you may find it difficult to figure out what is wrong. Personally, I prefer to make my life as simple as possible – why make things more difficult than they have to be to achieve the task in hand?

How does it work?

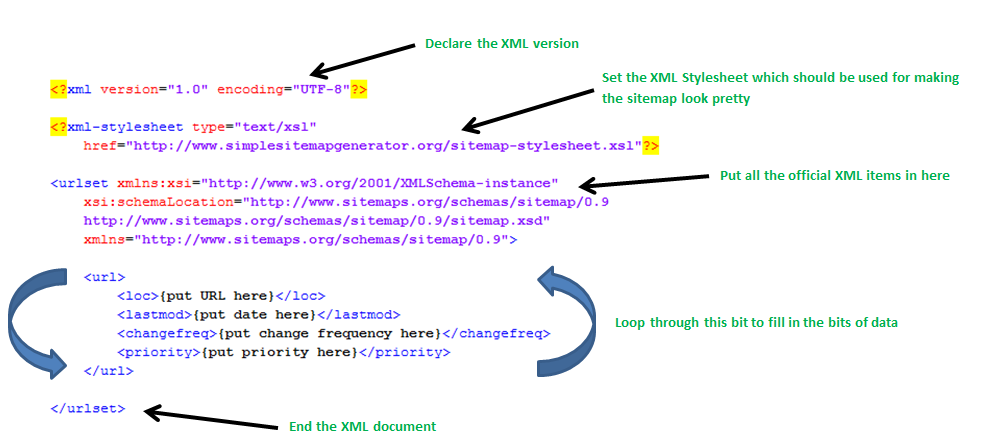

Quite simply really, the list of URLs are parsed using a Java program behind the scenes which separates all URLs by the new line character. The other items including the change frequency, last modified and the priority are also picked up from the main form then used in the program.

The program ultimately just runs through each of the URLs within the list (up to a maximum of 50,000 URLs due to this limitation within XML sitemaps) and wraps the correct tags around each item based on the latest XML sitemap specification.

Below is a simple diagram about how the program uses the data which has been entered on the form so you can see how the logic works in the program. I have excluded any of the Java code so it is a little easier to understand for the non-technical people.

(click on image for a larger view)

Then the sitemap is complete! So it is just about displaying that nicely to the user.

How did you design the logo?

I am not a big fan of designing anything and I am very poor at doing so. My preferred method of developing logos and nice graphics is using Microsoft Word combined with Paint.Net to achieve a few nicer effects if needed.

Why do I use these tools? Because using the more advanced tools are way beyond my skill set and I don’t have the time or desire to try and master these. The basics serve my purposes for the time being but not to say that I may not learn in the future – just not in the near future.

Why didn’t you build in a website scraper?

A lot of other sitemap generator tools have built in website scrapers and can identify all URLs on your website easily, although these are always limited by the number of URLs they can crawl. There are several reasons why I didn’t build in a web scraper to the tool;

The first reason being that by having a website that crawls the whole of a website leaves the tool open to abuse by people wanting to attack certain websites by making the tool send thousands of requests towards a certain website. This is more commonly known as a Denial of Service (DOS) attack. This amount of requests can bring websites to their knees or totally offline.

If I built in a scraper function into the tool then it would be very simple for someone to enter in “www.website.com” into the scraper tool and press ‘go’ and continue doing the same in endless tabs in their browser. The result from which would be thousands of requests going to www.website.com. There is always ways to get around this type of abuse but this requires more time to build into the tool.

The second reason why I didn’t build a web scraper into the tool is because there are already really good tools out there that can do this for you, namely Xenu Link Sleuth. Why re-invent the wheel?

I primarily built this tool for myself as I will find it useful as I work on a lot of different websites, so it makes my life simpler. I can quickly identify all URLs on a website using Xenu so I didn’t need to go re-designing this as I can simply use a combination of tools to achieve the task which works out quicker.

The third reason why I didn’t do this is because the actual server overheads to crawl an awful lot of URLs to scrape a website, then parse all of the information to use in the sitemap is an awful lot and since there will likely be very little income from the tool (advertising makes pennies!) then this would purely be a loss making exercise for me and that doesn’t sound like too much fun.

Why can’t you have different priorities for different URLs?

Because I didn’t build this in as (in my opinion – I’m sure there will be people with other opinions on this!) there is very little value in changing this from 0.1, 0.4, or 1.0. The aim of the tool is to quickly build an XML sitemap from a list of URLs so you can tell search engines about content they may not already be aware of. If you want to quickly tell them about content then why would you set a lower priority for content?

While it may be interesting to build into the tool a way to prioritise URLs based on their importance, there are no plans to do this in the near future. If you want to begin doing things like this then I suggest you build a custom XML sitemap generator which is more integrated into your content management system / database so that it can be continually upgraded.

How did you choose the font and colour scheme?

As you know already that I created the logo in Microsoft Word, well you may notice the font from another post I did a while ago about the 200 signals in Google ranking algorithm (and yes, that image was also created in Word). Why the font? Because I like it. Simple as that.

Why the colour scheme? For the same reason, I like that basic green colour in Word for colouring sections of text in (I usually use this for ticking off items on a to-do list or similar) so it seemed like a nice choice and I think it works quite well.

How about the main navigation colour scheme? Well I actually just pulled this whole navigation from another website I have developed as I wanted to quickly create a navigation menu and there was little value in creating one from scratch. So this was more of a quick and dirty approach which achieves the aim of being a navigation menu.

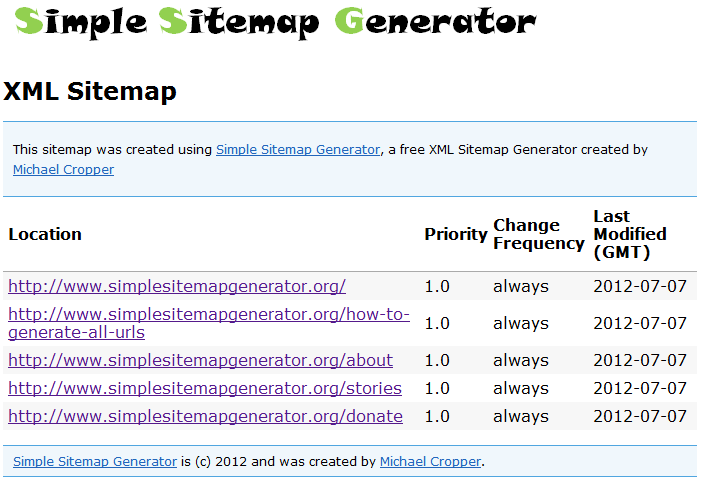

How did you get the XML sitemap to look pretty?

If you view the sitemap for the actual website, http://www.simplesitemapgenerator.org/sitemap.xml then you can see the sitemap is styled all nicely as is seen below;

Isn’t an XML sitemap supposed to look like a normal XML document though? Well usually yes, but it is possible to style up XML sitemaps so they look nice. This is using an XML Stylesheet which is achieved by adding a line of code to the top of the XML Sitemap as follows;

<?xml-stylesheet type=”text/xsl” href=”http://www.simplesitemapgenerator.org/sitemap-stylesheet.xsl”?>

This line of code is pulling in the stylesheet information from a separate stylesheet file which is making the XML document look a little nicer. I will be doing another post about how to create these as they are reasonably straight forward to implement and can make your XML sitemap a little more user friendly and they also have other SEO benefits such as being able to easily ping all of the URLs to ensure they are working etc.

Summary

So there is a bit of information about how I builtSimpleSitemapGenerator.org in a weekend. Quite simple really, it was just about allowing basic data to be entered onto a form then parsing the results and outputting to a nice format which is in line with the latest XML sitemap specifications.

I always encourage people to give something a go and try and solve a solution to a problem yourself as it really isn’t that difficult. The added bonus that it is fun doing so too!

This tool has certainly made my life easier and will continue to do so. I hope it can be of some use to you as well. If you do find it useful then please share

Michael Cropper

Latest posts by Michael Cropper (see all)

- WGET for Windows - April 10, 2025

- How to Setup Your Local Development Environment for Java Using Apache NetBeans and Apache Tomcat - December 1, 2023

- MySQL Recursive Queries – MySQL While Loops – Fill Zero Sum Dates Between Dates - October 6, 2023