As an SEO working in house or agency side there will be a time when you have to assess a website after it has been re-designed to ensure the rankings remain steady. I have put this SEO checklist together to help with this process and to ensure some of the obvious checks aren’t overlooked.

Throughout my career as an SEO I have been through a lot of full website redesigns and each has gone smoothly without impacting the rankings, some of which included a full change of URL structure across hundreds of thousands of pages. This basic SEO checklist has helped the rankings remain steady throughout the various website redesigns, so hopefully they will be of some use to you as well.

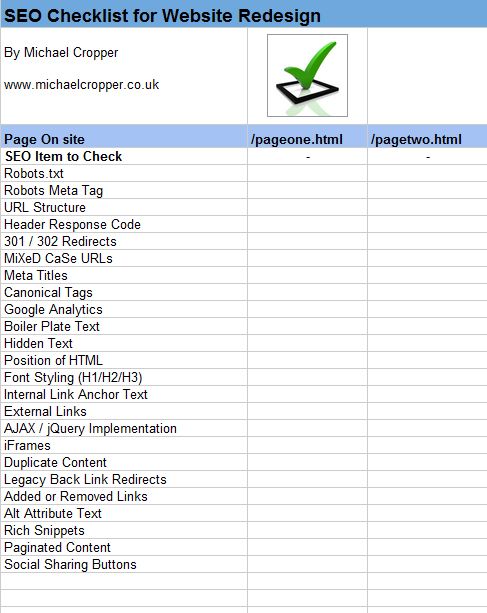

I have created a Google Doc listing all of these checks so that you can go through them one by one and tick off each page when checking through the new website. I find this process to be extremely valuable and systematic to ensure all of the key SEO areas of the site haven’t been accidentally damaged during a redesign.

Google Doc: SEO Checklist for Website Redesign (Please save a copy for yourself)

Here is a quick screenshot of the SEO checklist, but I do recommend reading the comments below as they explain what each section on the spreadsheet means.

SEO Checklist

Robots.txt

Ensure the robots.txt hasn’t accidentally noindexed the entire site. If the site was using the robots.txt file prior to the redesign, then check this is still the same as it was previously (unless it was wrong in the first place!). It is also possible that certain pages or directories may have been accidentally noindexed so keep an eye out for this too.

Meta Robots Tag

Following on from the above, it is also important to check all of the main pages throughout the site to ensure a “index, follow” tag hasn’t accidentally changed to “noindex, follow” as this would be really bad. The two characters that can bring your site to its knees, ‘no’.

URL Structure

Very important to check that the URL structure throughout the website hasn’t changed for some reason. When developing code for large scale websites it can be very useful to append certain identifiers to make URLs map to certain scripts which then parse the URL and get the information from a database. For example www.website.com/pageone-identifier.html where any URLs containing ‘identifier.html’ would be passed through to a certain script which would get the content for ‘pageone’.

So keep a close eye out for any changes that could have been done here. Even adding one single character to a URL or making one of the characters uppercase will impact your rankings, since as far as Google is concerned those are totally brand new URLs which have no relation on the old ones.

If it is required that the URLs change as part of the site redesign, and this can happen, then it is important that the old URLs are 301 redirected to their new URLs so that the site is still benefiting from any back links that have been generated to those pages. I have worked on websites where it was required that hundreds of thousands of URLs needed changing as part of the redesign and can confirm that as long as all of the 301 redirects are in place then everything will go smoothly. Just make sure these aren’t accidentally a 302 redirect though!

On this note, it is also possible that during the redesign that a chain of redirects could emerge meaning the old URL would 301 to an intermediate URL which would then 301 to the new URL. This is to be avoided and there is generally very little need to do something like this. Use a tool such as Fiddler to see what is happening.

Header Response Codes

This one is very unlikely to crop up during a website redesign, but it is worth checking to be safe. Since header codes can be altered to say whatever you like, then it is quite possible that a 404, 500 or 503 header code could be returned whilst the page content is still being displayed.

If something like this did happen I would find it difficult to believe this got accidentally introduced since it would take someone to actually program this into the code to be altered.

As I say, very unlikely to happen, but worth checking since things may look ok on the surface but underneath the site is actually telling Google to go away.

301 and 302 Redirects

This check partially follows on from checking the URL structure, since if something has been altered it is important to be using 301 redirects instead of 302 redirects. 301 redirects pass all of the Google Juice to your new pages, where as 302 redirects don’t.

It is also possible that during a website redesign that redirects could have been randomly introduced in the site. In Microsoft ASP and ASP.Net, the command ‘Response.Redirect()’ uses a 302 redirect by default which can cause a few issues. So if your site is being designed in either of those languages then this may be a thing to be aware of.

Mixed Case URLs

Generally mIxeD cAse URLs work on database driven websites which isn’t great for SEO since that is creating an awful lot of duplicate content for Google to hopefully handle correctly.

It can be useful to check all of the URLs on the website to see if they also work when replacing a lower case character with an upper case character. Personally I prefer to 301 all upper case URLs to their lower case versions to avoid any potential duplicate content where as showing a 404 page would also suffice.

Unique Meta Titles

It is quite possible during a website redesign that all of those fullyoptimised meta titles that you worked so hard to create have suddenly disappeared. Luckily you have kept a record of what they were (haven’t you?) so you don’t need to worry too much there. Best to check over all of the pages on the site to make sure some of your top performing pages haven’t accidentally lost their meta titles.

Canonical Tags

This is one of the areas where I have seen the most issues during a website redesign and an area I would pay most attention to when doing your SEO checks. One area where the canonical tag implementation can fail drastically is when a script in the background can be accessed viamultiple URLs, for example www.website.com/search?product=something and www.website.com/catalogue/something.

It is extremely important that this doesn’t get messed up since it can mean you are telling Google that the correct page is actually a copy of a page that is on a sloppy URL (such as a search URL) or worse, that it is a copy of a page that doesn’t actually exist.

Also keep an eye on what the correct version of the URL should be. Should it have a trailing slash or not? Whilst Google is pretty good at realising a URL with/without a trailing slash is ultimately the same content, I would still prefer to explicitly tell it so (or even 301 to the correct version).

I have seen it happen where the canonical was set incorrectly which happened to be a search URL, so the page still displayed the same content and luckily Google just began showing the search URL in the search results instead of the friendly URL. Whilst this ins’t great it is good to know that Google does handle this correctly without any negative impact. I would still prefer to get things right first time though.

One final thing to test with canonical tags is how the tag behaves when adding a parameter to the end of the URL which could be a page number such as ?page=2, although I will cover pagination in more detail later.

Google Analytics Implementation

Quite a simple check is this one, but if Google Analytics has been missed off during the website redesign then you aren’t going to be aware of other mistakes that may have happened either.

Boiler Plate Text

Another quick check, but it is important that large chunks of boiler plate text haven’t been randomly added throughout the website. I haven’t come across any times where this has happened but worth keeping an eye out for.

Hidden Text

It is a common technique to use ‘display:none’ then expand the section of text when someone clicks on a ‘view more’ link or similar. So one thing to watch out for is trying to limit the use of this where possible. It is a technique that Google are reasonably good at identifying when it is used for spam and when it is used for genuine purposes but personally I would aim to have things visible on the page both from an SEO point and a user experience point.

In addition hidden text can be found during a website redesign if there has been Multivariate Testing installed at the time. Since some MVT packages actually hide sections of the page and display them only to certain users then this is something to watch out for if using MVT.

Order of HTML

This is a bit of an old skool obvious thing to watch out for, but make sure that all of your important content is as high up in the HTML as possible. Don’t go filling the top of your HTML with crap, instead get all of yourmain content up there. It is possible to do clever CSS trickery by floating columns in a table left or right so that within the HTML the important items are at the top of the page but for the user the item is visually in a different area.

Font Styling

Since heading tags are designed for headings (no surprise there!) then you will find that it is quite common for developers to use them a lot which is great for the user. SEO wise though we want to be making sure that odd titles aren’t being wrapped in heading tags, such as “Look Here” in a H1. Instead we need to be making sure areas that need to look like a H1 are styled using CSS and not heading tags.

Internal Link Anchor Text

Quick run through the main pages on the site to ensure the internal anchor text hasn’t been drastically altered. We wouldn’t want to be telling Google that every page on the site is about ‘click here’.

External Links

Quick check for external links and to make sure they have the‘nofollow’ attribute on any links that you don’t want to pass any Google Juice.

AJAX and jQuery Implementations

Google has been indexing pages controlled by JavaScript for a while now, although I always prefer to make sure there is a clickable link in place so that Google can definitely find all the pages on the website. It is possible to mark up links so that a user with JavaScript turned on will still get the cool user experience and at the same time have a clickable link for when a user (or a search engine) doesn’t have JavaScript turned on.

IFrames

I have never come across a website that has found a good reason to be using iFrames so I don’t think this will happen much. But best to double check nothing has been put into iFrames (or normal frames for that matter) since Google finds it more difficult to stitch things together.

Duplicate Content

It is possible that duplicate content has been added as part of a website redesign so that more content is easily accessible for the user. SEO wise though we all know that duplicate content is a bad thing for Google. So always have a quick check for any possible pieces of content that have been duplicated across several pages.

Legacy Back Link Redirects

This is one of the areas that is often forgotten about during a website redesign since it is not something that is visible on the front end and often the redirects were put in place a long time ago and have been forgotten about.

Legacy back links can be a great source of getting additional Google Juice instead of funneling it all to a 404 page. So when the website is redesigned then it is important to be able to check that these redirects are still in place.

An example of where this is extremely important is if the whole websites URL structure changed at some point in the past as in the example earlier. If those redirects were forgotten to be carried across then you could potentially be loosing all of your Google Juice from links that got built up to your old URLs for the past X years.

Added Or Removed Links

Check to make sure no large chunks of links have either been added or removed from your key pages. It is possible that the new logic in the website can not put all of the link in places where they used to go. If this happened then you may find that a lot of your site is no longer crawlable. Whilst Google still knows of these links, it is like telling Google that those pages are not important any more.

Alt Attribute Text

This is a basic really and whilst some SEOs say that it is important and some say it isn’t. Personally I would rather put something in and it be ignored by Google than exclude something with the possibility that it may not be helping. Check to see if all important images have relevantALT attribute text, so not something like ‘Picture’.

Rich Snippets Markup

If you are using any of the Rich Snippets markup that Google offer then you will already know how long it can take before Google decide to pull their finger out and show them in the search results. So if you have got them in there already then please make sure you check they are correct before launching a new redesign of the page. If you lose them now then it may be several months until they reappear, if at all.

The same goes for the new authorship markup.

Paginated Content

A lot of larger websites have paginated content for product listings so check that all of the paginated pages are crawlable via a static link, check they are all indexable (ie, they aren’t ‘noindexed’) and check that their canonical tag has been implemented correctly. Too often the paginated pages can end up messing up the canonical tag implementation which will ultimately chop off a lot of pages from your site for Google.

In addition, the new “rel=next” and “rel=prev” markup from Google should be there. If it hasn’t been marked up yet, then what are you waiting for? If you have already marked this up then best to make sure it is still there.

Social Sharing Buttons

Ensure any social sharing buttons that were on the old site are still on the new website. Social is extremely important for SEO these days so it is important to make these buttons as prominent as possible.

Summary

I am sure there are a lot more site specific things to check when undergoing a site redesign, but above are some of the key areas I tend to focus on. I hope they are of some use to people going through the process and make sure to check any other items that you have developed previously.

Once you have put your brand new site live, then keep a close eye onGoogle Webmaster Tools to spot errors that may have been missed. Google Webmaster Tools can be a great source of fast information to identify problems, for example if you suddenly see a massive amount of URLs that are ‘restricted by robots.txt’ then the site may have been accidentally ‘noindexed’

Thanks a lot Mick!

No problem:-)

Nice checklist.

I would like to keep this table on my post notes, will really helpful for any post that i update.

Glad you found it useful. Feel free to keep that on your post for reference

Great check list,. I found the checklist what i needed.