by Michael Cropper | Aug 1, 2022 | Developer |

This is one that comes up fairly infrequently and one that always seems to take way more Googling than it should to get a straight answer on this. When in fact, the answer to this question is an extremely simple one, well, usually.

If you are getting regular or intermittent errors with your scripts timing out, count the seconds. Most people are used to having a default timeout with something like Apache Httpd to be 30 seconds. Well, Apache Tomcat likes to play by it’s own rules here and doesn’t quite follow that standard which may leave you scratching your head for a while until you get to the bottom of it.

Here’s what the official Tomcat 8.5 documentation says on the topic;

“The number of milliseconds this Connector will wait, after accepting a connection, for the request URI line to be presented. Use a value of -1 to indicate no (i.e. infinite) timeout. The default value is 60000 (i.e. 60 seconds) but note that the standard server.xml that ships with Tomcat sets this to 20000 (i.e. 20 seconds). Unless disableUploadTimeout is set to false, this timeout will also be used when reading the request body (if any).”

Simple, right?

Basically, just check what you’ve got in your server.xml file and adjust accordingly as it’s not as straight forward as it should be.

But where is that file you may be wondering. Well, by default you’ll find the file at /{TOMCAT_HOME}/conf/server.xml so check there first.

This home directory is going to be different depending on operating system and how Tomcat was installed, so you may have to hunt around for this if you’ve not edited these settings before. Make a note of this file location as it will come in handy over time.

The line you are looking for is;

<!--60 Seconds-->

<Connector URIEncoding="utf-8" connectionTimeout="60000" port="8084" protocol="HTTP/1.1" redirectPort="8443"/>

Simply change the value of the connectionTimeout attribute to what you need, restart Apache Tomcat 8 and re-run the script you were trying to execute which was timing out, things should work now.

Happy config!

by Michael Cropper | Jul 25, 2022 | Developer, IT, Networking |

First of all, networking is a challenge in itself to understand what you want to achieve and how to configure things to make them work. It’s made even harder when basic things that should just work, for some reason don’t due to one reason or another. This blog post is off the back of one of those niggles that was driving me crazy trying to understand why something wasn’t working when it should have been and it turned out the answer was pretty simple when I finally got to the bottom of it.

Before we jump into the details, let’s make sure we’re all on the same page.

What is Ping?

Ping is a command line utility that is designed to test the reachability of a hostname or IP address. In simple terms, it’s a way of your computer saying “hello” to another computer and waiting to see if the other computer responds and says “hello” back – or just ignores you.

To use the ping command, it’s as simple as running either of the following commands via your command line either on Windows or Linux and is often packaged on Linux systems through the iputils package from Yum etc.

ping google.com

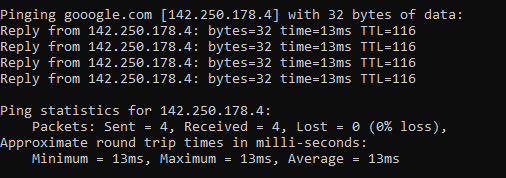

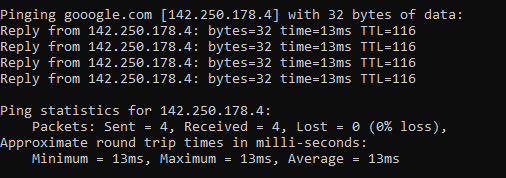

Which will respond as follows if a successful connection is made

ping 8.8.8.8

8.8.8.8 is the primary IP address of Google’s public DNS system if you’re not aware, with 8.8.4.4 being the secondary IP address. When ping’ed this will respond as follows if a successful connection is made

ping a-website-that-doesnt-exist.com

Here’s what happens when you don’t get a successful response such as when in this example, there is no DNS A Record against that hostname so the hostname cannot be successfully translated into an IP address.

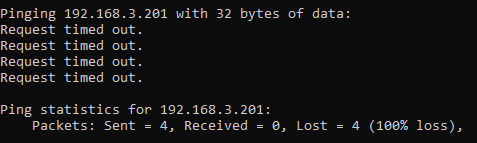

ping 192.168.3.201

Now let’s say that you have a computer on your network that you know exists and you know it is turned on. When you try to ping that IP address, you should receive a successful message as shows above. But in some situations you may get errors such as “request timed out”

This is where things get a little fun trying to debug these things, which we’ll cover off in this blog post.

Ping Summary

The above various examples are what both success and failure messages look like when using the ping command line utility. So you know what to look out for when debugging why ping isn’t working.

What is ICMP aka. Internet Control Message Protocol?

The ping command line utility utilises ICMP, the Internet Control Message Protocol. I’m not going to go into too much detail with this one as we could go down a fairly deep rabbit hole around the Internet Protocol Suite, IEFT and RFCs (Request for Comments) such as RFC1918 which is the protocol that outlines what IP address ranges can be used publicly and which ones are reserved for private usage. We did a blog post covering what RFC1918 is a few years back to help people understand this in a simple way.

Anyhow, to keep things simple ICMP is essentially a supporting protocol as part of the Internet Protocol Suite that is designed to capture success/failure type messages when one host is trying to communicate with another host. Just like in the examples we gave above using the ping command line utility. The reality is that there are a whole host of protocols that the average technical user has never even heard of unless you’re a network specialist such as: TCP, UDP, ICMP, ESP, AH, GRE, EoIP, IPV6, IGMP, PIM, OSPF, SCTP, CARP, PFSYNC. Most people have heard of TCP, some know of UDP, but the rest most people have never heard of and will never need to know anything about.

In reality, ICMP is used by things that are primarily under-the-hood technologies that most people haven’t ever heard of and/or don’t really care about. The exception being command line tools like ping and tracert/traceroute commands which are designed for humans to be using to help with debugging.

Why all of this is important is because ‘a’ firewall along the journey from source to destination could be blocking ICMP traffic which would cause your ping command to fail, when you would expect it to be working.

Understanding the Route of the Network Packets

Now comes the fun part. While things often seem relatively straight forward that Computer 1 wants to talk to Computer 2, the reality is that things under the hood are 100x more complex than this and have so many nuances and company specific configurations that it’s never as straight forward as it should be to debug basic things like this.

Hence why it’s essential that you understand how things are configured under the hood. Unfortunately in my experience in most enterprise organisations, no-one really has a clue how all this actually works and why it works, it just kind of does for the average non-technical user so organisations kind of accept that because it just works, let’s not to prodding it. Ultimately this is an extremely bad thought process to have and when leadership doesn’t question these things, this ultimately causes endless headaches for IT staff doing their daily work because things that should just work often don’t and often require weeks, even months in many cases of time spent on meetings/emails/conversations to ultimately understand what should have really been a 5 minute fix to a problem.

Even worse in some organisations whereby it soon becomes a case of networking/firewall/configuration whack-a-mole whereby people start fiddling with the settings without understanding things fully which solves one problem but creates 10x more which get reported days/weeks later when other things start to break. This stuff is hard, it requires extremely knowledgeable individuals to make informed decisions to configure things well.

Anyhow, back to the main point. You need to understand how things work. How do packets get from Computer 1 to Computer 2 when you are ping’ing the IP address or hostname?

Let’s look at an extremely basic setup and the hops along the way;

- Source Computer sends ping command to Destination Computer

- Source Computer outbound firewall – Does it allow outbound ICMP traffic?

- Network Router/Firewall/Gateway – Does it allow ICMP traffic through from the Source Computer to the Destination Computer?

- Destination Computer inbound firewall – Does it allow inbound ICMP traffic?

And this is where things get even more challenging, since there can be configurations on Windows such as Public and Private networks that you connect to. Let’s be honest, the average user configuring this on their own machines hasn’t got a clue what to select and just randomly click one of the two options. In reality though, depending on what a user clicks on will depend on how Windows behaves and ultimately in this example if Windows response to ping requests or completely ignores them.

Windows Firewall Public and Private Networks

To keep this section focused on the topic at hand, debugging ping requests not working as they should, we’re going to simply take a look at the two common Windows network settings – Public Network and Private Network.

Your device will either have one or both of these options;

- WiFi Network Interface Card (aka. No cable plugged into device)

- Ethernet Network Interface Card (aka. Cable plugged into device)

And each of these within your Windows Network and Sharing Centre will either be configured as a Public Network or a Private Network. By default on Windows, if a NIC (Network Interface Card) is configured to be used as a Public Network, then inbound ping requests will be ignored, whereas if the NIC is configured to be used as a Private Network, then inbound ping requests will be responded to.

Rather annoyingly on Windows, once this initial configuration is done (in most cases incorrectly…) then changing a NIC from Public to Private or vice-versa is not as simple as clicking a button – you have to use Windows Powershell. There should be no reason to do this in 2022, but hey, send your complaints (aka. “feature requests”) into Microsoft to solve this one.

For anyone needing to do this, here’s how to change a Windows network from private to public or how to change a Windows network from public to private;

- Open Windows PowerShell as an Administrator

- Run command:

- Get-NetConnectionProfile

- Which will show you the network type, i.e. either public or private

- Run command:

- Set-NetConnectionProfile –Name “{NAME OF YOUR NETWORK}” –NetworkCategory Private

- Which will set the name of the network to either Private or Public depending on what you type in the above command

- Then when you open your Network and Sharing Centre, you should see that the network has changed from Public/Private to the opposite of what it was previously.

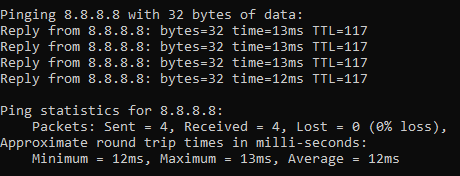

Norton 360 Smart Firewall Blocking ICMP Ping Requests

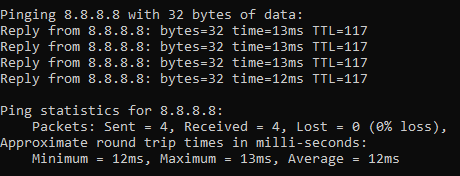

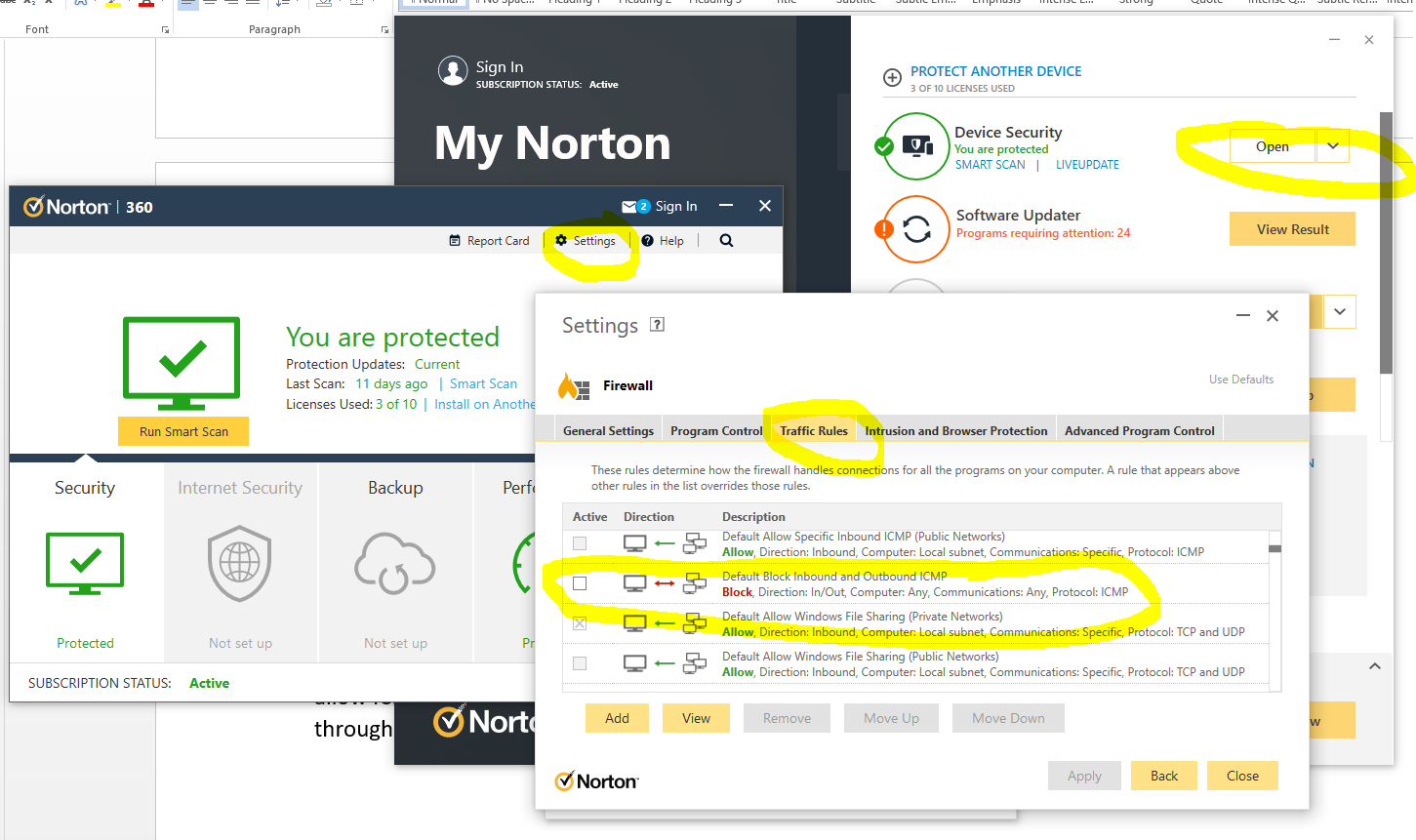

Another one of those annoying “features” is when Windows based firewall software overrides the default Windows firewall, adding another layer of debugging to a problem. In this case, software such as Norton 360 Smart Firewall essentially takes control of the firewall management rather than Windows out of the box. This is where things can get messy.

End user firewall software, regardless of brand, tend to dumb things down to such as level that they often hide the complexity of the details which are often hard to find in the system. Norton 360 is a prime example of this. By default it blocks inbound ICMP traffic, meaning that if you are trying to ping Computer 2 (with Norton 360 on) from Computer 1, then by default pings will fail. You need to allow ICMP traffic within the Norton 360 Smart Firewall settings to ensure that this traffic can pass through to Windows to respond successfully.

You can see here how deep the settings for this are buried within Norton 360 Smart Firewall, it’s almost impossible to find this without knowing exactly what you are looking for, which 99.9% of users simply haven’t got a clue about.

The irony being that there is nothing “smart” about this setup, it’s simply fully of dumb assumptions being made in the guise of security. These kinds of configurations are an absolute pain to deal with as they are never well documented out of the box about what is the default configuration, which ultimately results in people digging and digging and digging to get to the root cause of why something that should be working isn’t working. Hey, the joys of IT I guess. It does annoy me though around all these assumptions that are made by software vendors to try and “help”. The best help they could give is write better documentation and/or build their software in a way that caters for different types of users from basic to power users and documentation that helps even the most computer illiterate individual understand what they need to configure. Most companies don’t seem to get this basic concept though, unfortunately.

Summary

I hope that this blog post has given you some guidelines and thoughts about how to debug and troubleshoot issues when commands like ping and tracert/traceroute aren’t quite working as expected. What you’ve hopefully picked up is that these things aren’t straight forward to debug.

The best advice I can possible give is to be methodical at every step along the way. Aim to understand every hop along the journey, and confirm for every hop that traffic is successfully leaving the hop and successfully arriving at the next hop along the way.

It’s not straight forward and requires an exceptional understanding of the underlying infrastructure, which often isn’t easily accessible particularly within enterprise organisations. It’s not easy debugging these kinds of things with every hand/arm/limb/sense tied behind your back.

by Michael Cropper | Jul 3, 2022 | Developer |

Webhooks are a very interesting topic, but they are often misunderstood by both technical and non-technical people alike. The reason they are misunderstood is often because depending on who you ask or what system you are looking at, each often has their own slight variance on what they actually are and how they are used.

I often head phrases such as “a Webhook is just an API isn’t it?” Well, no, not quite. And “Why do we need Webhooks when we can just poll a system for changes?” Webhooks are a complex topic to understand well, but fundamentally they are actually really basic things.

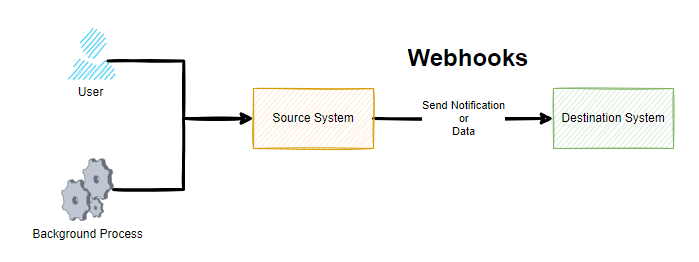

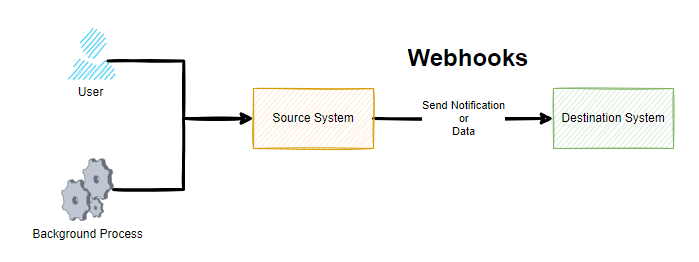

A Webhook is simply a term used for when something happens in a Source System that it will send a notification or data to a Destination System. Conceptually, there is nothing much more to it than that.

Sounds simple when you put it like this, right? Well, yes, and it is that simple at least at the conceptual level. As always, the devil is in the detail. Webhooks are commonly described as user-defined callbacks.

As you can see in the above diagram, whenever either a User or a Background Process / System Event happens, this means that the Source System sends a notification or data to a destination system. But how you may be thinking. Well, we’ll come onto that in a little while. For now, just keep this basic concept in your mind for what Webhooks are.

When Should I Look at Webhooks?

There are many reasons you want to look at Webhooks and this type of technology, often it is when you want to something to happen in one system off the back of something happening in another system.

For example, some common use cases of Webhook technology is when a customer on an ecommerce website makes a purchase using a payment gateway. When this happens, the payment gateway needs to inform your website that the payment was either successful or it failed as you want to make sure the customer has paid before you go sending the goods out to them. Or another common use case is when you have a master system of record for certain types of data and you want to ensure this data is replicated in another system which is quite common in enterprise organizations to ensure a user doesn’t have to log in to 10x different systems to do their day job.

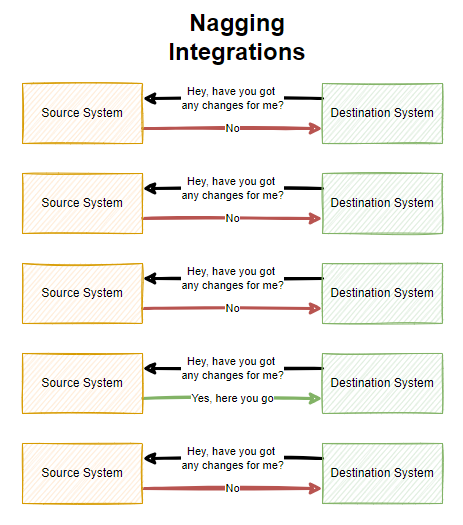

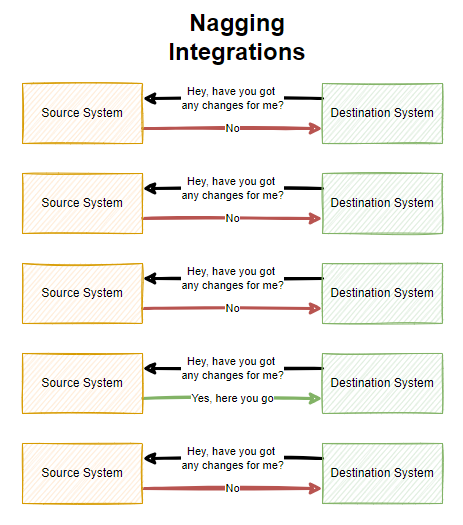

You may also want to start to look at Webhook technology when you are integrating systems together or you may have a legacy integration in place with either CSV files flying around and/or what I like to call Nagging Integrations, whereby System A will continually poll System B asking if there has been any changes that it needs to be aware of. These types of integrations are extremely inefficient, particularly if data only changes say once per hour and your integration is asking if anything has changed once per minute. These types of integrations are wasteful.

As you can see above, there is a lot of waste with Nagging Integrations which poll a system on a regular frequency. All of this needless additional load can result in your applications being less performant and require more infrastructure resource to power because of this inefficiency which can have a cost implication.

Why Webhooks are Awesome

Many legacy integrations are simply either too slow, too dumb or a mixture of both. This is why Webhook technologies are so awesome. Webhooks are real time data and notification transfers to get data moving throughout an organization to where it needs to be. That means, no more batch jobs or overnight data loads slowing an organization down.

With technology first systems, you will often find that Webhook technologies come out of the box and are configurable to what you need so you can design your data flows efficiently throughout your enterprise organization. The reality is though, many systems aren’t built in this modern way to enable this capability, so don’t expect everything to support this type of technology.

I should also clarify, that Webhooks are a concept, it’s not something you can purchase and plug in to your existing systems as they need to be built into systems from the core. We’ll come on to this bit a little later in this blog post.

Who Uses Webhooks?

I’ve already mentioned a few example earlier around some common use cases for Webhooks being either ecommerce and payment gateway related, or getting data moving around an enterprise organization in real time. You’ll find that a lot of SaaS (Software-as-a-Service) companies will have some form of Webhook technology baked into them.

In some cases, there are even entire business models and SaaS companies that have been built to piggy back off this technology. Companies such as IFTTT (If This Then That) and Zapier have a suite of tools in their systems which are powered through Webhook technologies under the hood to get notifications and data moving to where it should be.

Where Can I Use Webhooks?

As for where you can use Webhooks, well, this all depends on the software and systems that you are working with. In my experience, the majority of historical legacy system simply won’t have any kind of Webhook type technology to use for integrations. You’ll often be lucky if they have APIs available in some way or another.

You tend to find that Webhook technology will sit in the bit companies, the SaaS tech first companies. The bigger the company you tend to find that the Webhook technology is more configurable within their system whereas some systems only have basic levels of customization in place.

It all depends on your use case. When you want to look at getting data moving from one system to another, Webhooks are often the first choice due to how efficient they are. So do your research on the systems you are using to understand their capabilities.

If you look at modern SaaS applications that technology first organisations use on a daily basis, all of these come with Webhook technology built in;

- Stripe

- Paypal

- GitHub

- Jira

- Salesforce

- Microsoft Business Central (aka. Microsoft Navision in older terminology)

- SAP

- Microsoft Teams

To name just a very small handful examples that you are probably familiar with. What is interesting to note is that even between the above systems there are often slight differences in terminology and capabilities depending on your individual use case.

How do Webhooks Work?

So now, onto the good bit for how Webhooks work. All of the above has been explained on a fairly high level of what Webhooks are and how they work. Now, we’re going to take a deeper dive into Webhooks so it’s clear how they work as they are no-where near as simple under the hood.

To help keep this bit as simple as possible, let’s break this down into a couple of core sections;

- Source System

- Destination System

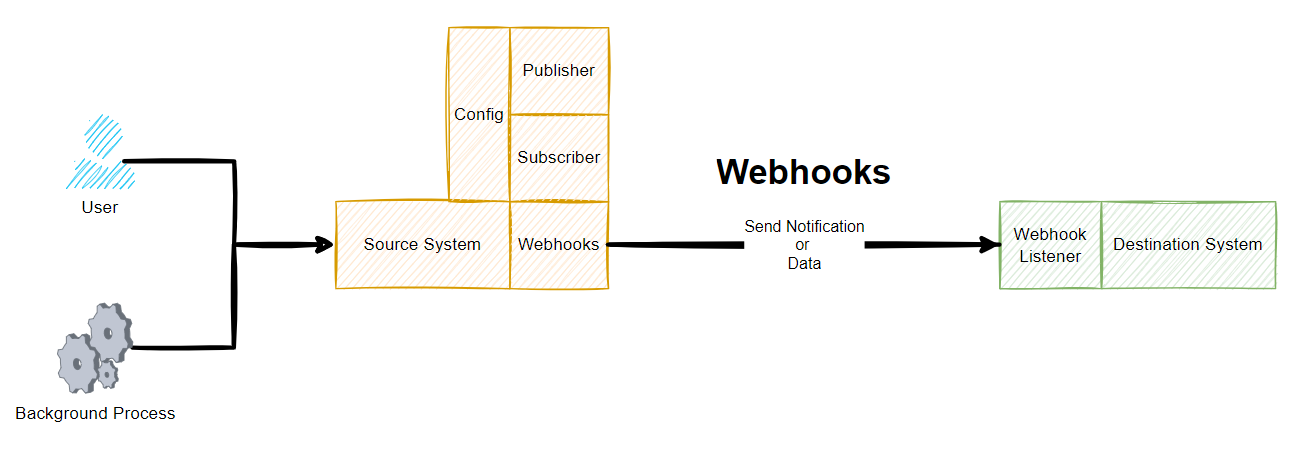

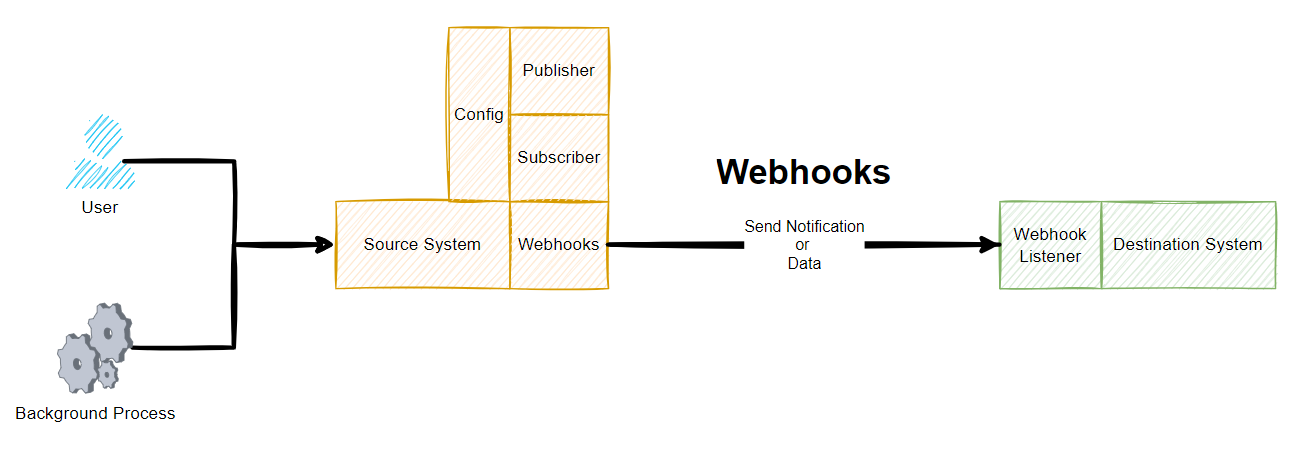

Fundamentally Webhook technology uses the Publish-Subscribe architectural design pattern, whereby one system publishes something and another system(s) then subscribe to that event so they get notified when something happens that they care about.

Google actually created PubSubHubbub (Best name ever for a platform!) quite a few years ago with the aim to synchronize content from many different RSS feeds into a handy platform for content. It never really took off unfortunately, likely due to the demise in the use of RSS feeds generally as social media platforms pretty much got the attention of users. So Google open-sourced their implementation of the concept Webhook technology in 2017 which is called WebSub and designed it as a protocol for communication as a way to standardize how these things work.

What is important to note though, Webhooks are a concept of sending data from one system to another in a real time and even driven way. PubSubHubbub and WebSub are just one implementation of this, and in my experience, most software vendors create their own implementations of Webhook technology based on the specific details of what they want to achieve and how they want to go about doing it. In practice, there is no one size fits all so companies often build these things how they see fit, some of which are configurable and others aren’t etc.

To extend the original diagram from earlier, this is more like what a Webhook really is when you look at it end to end;

Source System Webhooks

Ultimately a source system is just a bunch of code under the hood that teams of software engineers have been crafting for many years to build the product that you see and use today. Fundamentally these systems have a core data model behind the scenes too. To keep things simple as an example, imagine the source system stores basic data about a company in different related data objects;

- Company

- Company Stores

- Company Contacts

The next step is to ensure the system is coded in a way that can ensure that when data changes for these different data objects that an event is captured, aka. published, which can then be subscribed to by another system.

Depending on the system in use, this may be a hard coded approach to sending data to another system and this can be absolutely fine in many scenarios despite the negative connotations with the word hard coded. In more advanced technology first SaaS platforms, this kind of thing generally has a user interface designed to allow a user of the system to configure what they want to do and when etc. These two different approaches involve vastly different amounts of effort to build, so in many cases this is an evolution of time to get the system more configurable and less hard coded, but this is all dependent on use cases.

Then when it comes to the implementation, there are additional nuances to consider. Do we queue the messages, or do we send and forget in real time? What about retries if a message doesn’t get through, do we care if something fails or do we implement a FIFO (First In First Out) message queue to ensure we have the visibility that something has been successfully sent etc.

What about logging, when things go wrong we need to understand why etc. Even with some of the big players mentioned earlier, not all of these concepts are built into their systems yet that can be accessed by a user.

What about the order of execution, is that important? Well, yes in a lot of cases it is to ensure that the updates are processed in the correct manner and the latest update is an accurate reflection of the state of the data. In other cases, the order of execution may not be important at all as it is a simple notification that something happened and when you click through to see the details that has the most up to date information. All of these things need to be considered.

Destination System Webhooks

This is the part that is often forgotten when it comes to Webhook technologies. Just because a Source System can send out a message to a Destination System this isn’t the end of it. What does the Destination System do with the message? Does it even understand the message? This is where things can get complex.

Fundamentally a Webhook is essentially built up of the basic building blocks;

- URL – Where the message should be sent

- Payload – What the message is, usually sent in JSON

Then it is up to the Destination System what to do with this message. And this is where the other half of the complexities lie. This is what is generally referred to as a Webhook Listener. There is no real difference between this terminology and that of a standard API which is basically doing the same thing when data is sent into it using a POST Request.

What the Destination System chooses to do with this message is up to itself. What HTTP Status Code should be sent?

- 200 OK

- 400 Bad Request (aka. I’ve no idea how to interpret your message)

- 401 Unauthorized (aka. you are not authenticated so you cannot access the system)

- 403 Forbidden (aka. you are authenticated but you don’t have permissions to do what you are trying to do)

Ultimately how the Destination System behaves determines if the Source System is happy that the message has got through or not.

Summary

Overall, I’m sure you can see that while Webhooks seem fairly straight forward conceptually, when you get under the hood they can be extremely complex beasts. This complexity from many of the big SaaS providers is often abstracted through the use of marketplaces and plugins where apps or connectors or whatever they like to call them do the translation between the systems to ensure the right message gets through when it should. And likewise there are often user configurable features within modern systems that allow you to easily add/edit/remove configured Webhooks to suit your needs at that point in time. Some systems are more/less configurable than others, so keep this in mind.

Webhook technologies are seriously powerful tools when implemented well. The reality is that they are difficult to build when you are designing a platform that requires this technology embedded into it. As a user of Webhook technologies, you’ll find this technology extremely beneficial in a wide range of use cases. As a software engineer involved with designing and building the system architecture for Webhook technologies, well, this needs a lot of thought to get right and to continually evolve to meet the ever growing expectations from users.

by Michael Cropper | Aug 31, 2021 | IT |

BIOS boot modes, something that is so long standing in the IT industry which never changes, it had a single option which was the BIOS boot mode. The usual blue screen that only works with a keyboard and not a mouse, the blue screen that you have to access from the black screen on boot usually by repetitively tapping on either the F10, F2, F12, F1 or DEL key, depending on your motherboards manufacturer. Simple right? No. It’s an absolute nightmare.

But hey, we’ve got a newer and upgraded version of BIOS, and it’s called UEFI. UEFI was actually launched officially as a standard back in 2006 believe it or not. The time from when a standard is launched vs how quickly this moves throughout the IT hardware manufacturers is often measured in years, not months or days. So actually this is a fairly ‘new’ thing and many consumer hardware manufacturers only started to phase this technology into their systems around 2017.

The whole point of UEFI is the concept of Firmware-as-a-Service which is focused around extensibility rather than fixed approaches. What this means is that UEFI is designed to allow the utilisation of large disk partitions of over 2TB in size along with a modular design which enables both backwards and forwards compatibility.

Fundamentally the difference between BIOS and UEFI comes down to the link between the hardware and boot loader layer on your drives and bootable devices connected to your system. Think of this kind of like the ‘Construct Program’ in The Matrix – you can load whatever you want, given the constraints of the system.

In the real world what this means is that traditional BIOS systems are based on a more simplistic limited memory/capacity systems of computer chips. Whereas UEFI is capable of operating on systems that have more advanced hardware chips within their systems. Hardware and software are intricately linked. UEFI stands for Unified Extensible Firmware Interface.

One of the huge benefits from a user perspective is that within UEFI you can actually use your mouse to control the system rather than everything being keyboard driven in the Legacy BIOS system. Beyond that, you are probably never going to need to worry about these differences – that is until something doesn’t work as you expect in relation to a piece of hardware not booting correctly. This is where things are a bit messy and it’s going to take likely another decade until things fully iron their self out.

As a general rule of thumb, for modern hardware, just use UEFI to Boot. For older hardware or older operating systems, you may have to use Legacy Boot to get the machine to boot up successfully.

Rather than re-invent the wheel, here is a handy source of the core differences between Legacy Boot mode and UEFI Boot mode;

What is a difference between UEFI and Legacy Boot settings;

- Legacy is traditional and very easy method which had worked absolutely fine so far.

- UEFI is critical, 1709 was the first perfect OS which worked best with the OS.

- Legacy has best in performance had minimum boot issues and easiest to install.

- UEFI is now stable, but yet most of the IT tech do not now how to use this feature and correctly install the OS.

- Legacy was safe and secure and very user friendly, it is just that you can select the boot device which you want to boot from and it search for Master Boot Record MBR and used to pick it up.

- UEFI is market as more secure but there is no more security just that it supports TPM which enables Bitlocker and has extra headache to mention the EFI boot manager, which is no secure if you have access to BIOS and did it few times earlier just like Legacy.

- Legacy has maximum partitioning size of 2 Terabyte.

- UEFI has partitioning size of 9 Zetabyte which is huge.

- Legacy can have 4 Primary Partition.

- UEFI can have 128 Primary Partitions.

- Legacy is good for loading 2 OS on same system.

- UEFI is Great for loading more than 2 OS on the single system.

- There are more differences and UEFI is way beyond the Legacy BIOS technology but it has not yet revealed its troe power.

- Legacy uses Master Boot Record.

- UEFI uses GUID Partition Table.

- Legacy is traditional Firmware which interacts with Motherboard and OS.

- UEFI is also just a Firmware with advanced options.

- Microsoft New OS will no more support Legacy they have transformed now.

- Microsoft stop support for Legacy OS after launch of 1709 WIndows update.

- The last best Hard Drive supported by Legacy BIOS is SATA SSD.

- The Last Best Hard Driver supported by UEFI is M.2 PCIE SSD Hard drive which does not work on Legacy BIOS.

- Legacy Supports the SSCM in traditional way like you can enable PXE boot and select option to Boot From Network through LAN.

UEFI also supports and enable options to boot from traditional network which is IPV4 as well as IPV6. UEFI firmware is keeping all possibility of future options. To enable it you need to enable UEFI Network Stack which will give option for IPV4 and IPV6.

by Michael Cropper | May 6, 2021 | Client Friendly |

Imagine….. a situation where modern Agile Delivery Methodologies were applied to a very entrenched ‘way of working’ such as local elections that are happening right now throughout the UK. Well, let’s take a look at how this plays out so it’s easy to see how this can apply to your business and deliver value (aka. more efficiencies, sales, profitability etc. aka. £££) to your business faster. Who doesn’t want that, right?

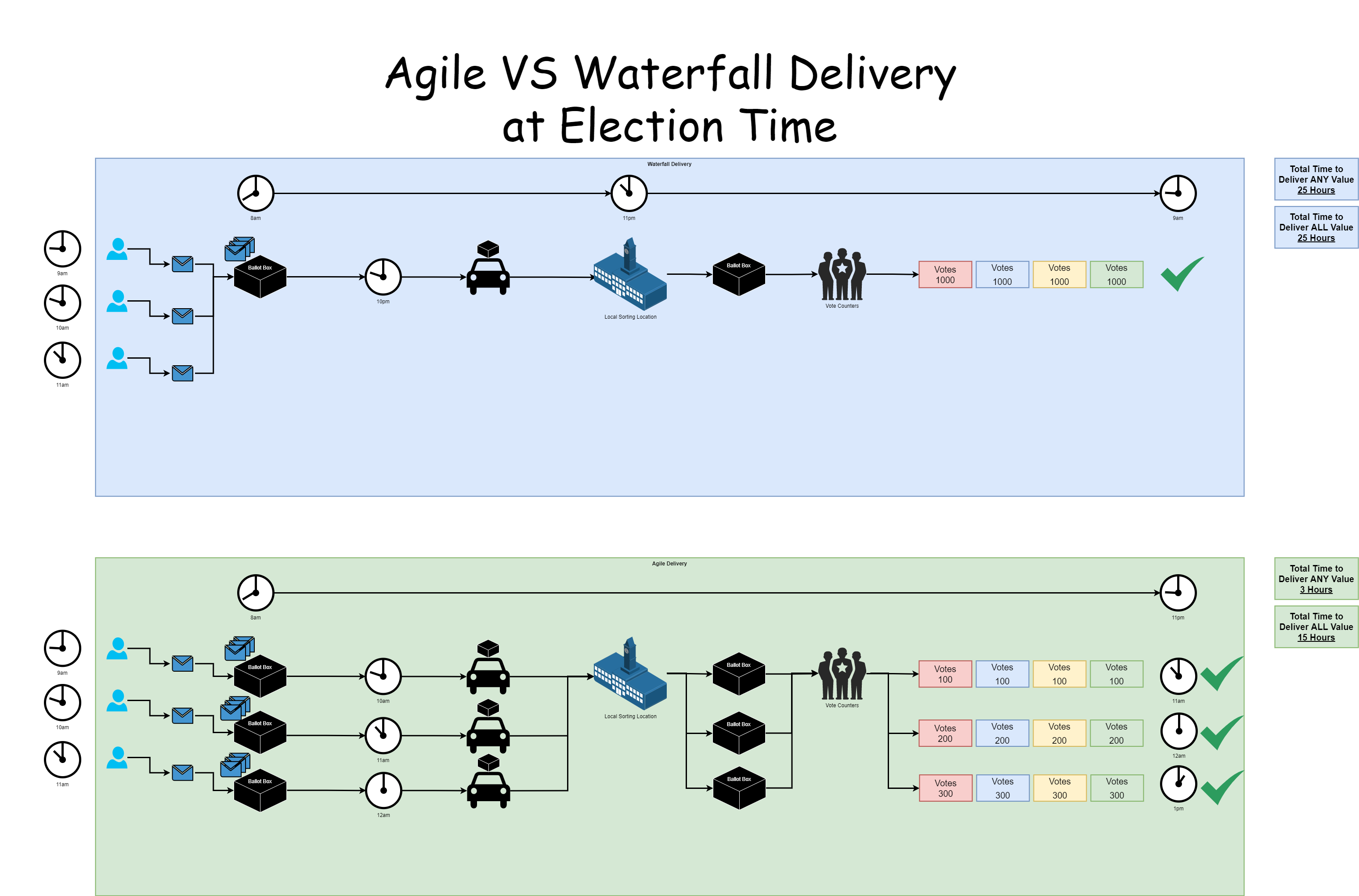

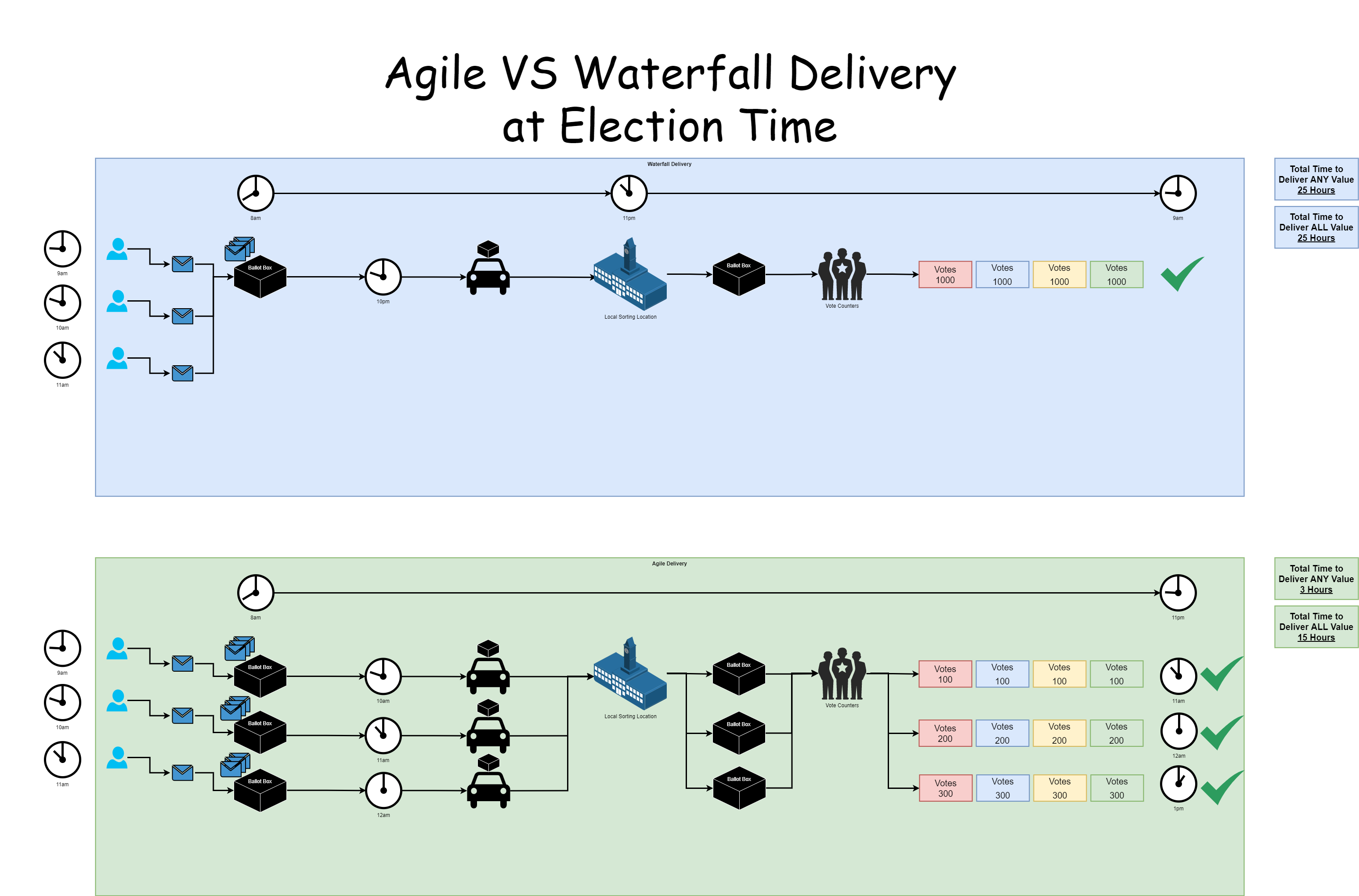

There isn’t going to be a lot of talking in this blog post, more studying the image. Note the word value used below, think £££ ultimately. The core things to point out;

- With Waterfall;

- You only get to deliver any value right at the end, almost double the amount of time than with the Agile Delivery Methodology

- The ‘Big Bang’ approach is heavily reliant on that single delivery method (aka. the car) working and not breaking down. If the car breaks down, the AA/RAC ( #ManyOtherProvidersAvailable 😉 ) have to be called out to save the day, wasting hours of time in the process

- The focus from the delivery team, aka. the car driver and the Vote Counters, is high pressure, high speed, high quality, which naturally results in many errors (aka. Trump and his x+ days of recount nonsense that happened in the US recently). Why put your team under such pressure when you can adjust the way of working to deliver continually?

- With Agile;

- You get to deliver value within as little as 3 hours, and complete value is delivered in only 15 hours, almost 50% more efficient than waterfall

- The ‘Phased Delivery’ approach is able to cope with issues with deliverability. Assuming different transport vehicles for the delivery of each ballot box, a break down in one doesn’t cause issues for the whole

- The focus from the delivery team, aka. the car driver and the Vote Counters, is a low pressure, moderate speed, high quality, which naturally results in low errors. A low pressure environment leads to a happy workforce.

The main benefit is that we don’t need to wait up all night (seriously…. who does that?) to get the election results. Or in the case of recent US election results, several days.

There are so many analogies that I could go into with this blog post. But seriously, while this is a bit of fun at election time, start to think how you can approach Agile Delivery Methodologies to your organisation. The more you can chop down deliverables into tiny tiny pieces, not even large phases, then you will start to see productivity exponentially rise within your organisation when it comes to delivering value to your customers. We did a blog post a while back titled IT Project Delivery Achieving More by Doing Less which goes into a lot more detail about this topic. If in doubt, get in touch to see how you could supercharge the delivery of your organisation from an IT perspective with no more ‘Go Live Dates’.